TL;DR:

- Advances in neural networks have made content generation effortless in the digital world.

- AI models have blurred the line between human-generated and AI-generated content.

- MidJourney’s latest release excels at generating realistic human photos.

- Virtual models are now being used by agencies to advertise products.

- 2D generative models struggle with disentangling facial attributes and altering fine details.

- The entertainment industry demands 3D content for immersive virtual worlds.

- The limited availability of diverse and high-quality 3D training data hinders the generalization of generative models.

- AlbedoGAN is a 3D generative model that captures high-resolution textures and geometric details.

- It leverages a pre-trained StyleGAN model for generating 2D faces and albedo from the latent space.

- AlbedoGAN uses image blending and Spherical Harmonics lighting to produce high-quality albedo.

- The FLAME model and StyleGAN’s latent space enhance shape generation in AlbedoGAN.

- AlbedoGAN enables face editing directly in the 3D domain using latent codes or text.

Main AI News:

Generating content in the digital realm has become increasingly effortless thanks to the advancements in neural networks. From text generation using GPT models to image synthesis through diffusion models, AI has brought about a revolutionary transformation in generative technology. In today’s landscape, the demarcation between human-created and AI-generated content has become increasingly indistinct.

The impact of AI-generated content is particularly evident in the realm of image generation. Take, for instance, the latest iteration of MidJourney, an AI platform renowned for its remarkable ability to generate lifelike human photographs.

The level of proficiency attained by these models has led to the emergence of agencies employing virtual models to endorse various products and apparel. The true beauty of utilizing generative models lies in their unparalleled versatility, allowing users to customize the output according to their preferences while still achieving visually captivating results.

Although 2D generative models excel at producing high-quality facial images, there remains a need for greater capacity in numerous applications, including facial animation, expression transfer, and virtual avatars. Employing existing 2D models for these purposes often proves challenging when attempting to effectively disentangle facial attributes such as pose, expression, and illumination.

The ability to manipulate the intricate details of the generated faces using these models is inherently limited. Moreover, the entertainment industry, encompassing games, animation, and visual effects, necessitates the incorporation of 3D representations of shape and texture on a grand scale to construct immersive virtual worlds.

Efforts have been made to design generative models capable of generating 3D faces; however, the scarcity of diverse and high-quality 3D training data has impeded the widespread application of these algorithms in real-world scenarios. Some attempts have been made to overcome these limitations by utilizing parametric models and derived techniques to approximate the 3D geometry and texture of 2D facial images. Nevertheless, these 3D face reconstruction methods often fail to capture high-frequency details.

It is evident that a reliable tool for generating realistic 3D faces is imperative. Settling for a purely 2D approach would disregard the plethora of potential applications that could benefit from further advancements. Imagine the possibilities if an AI model capable of generating authentic 3D faces existed. Well, the wait is over as we introduce you to AlbedoGAN.

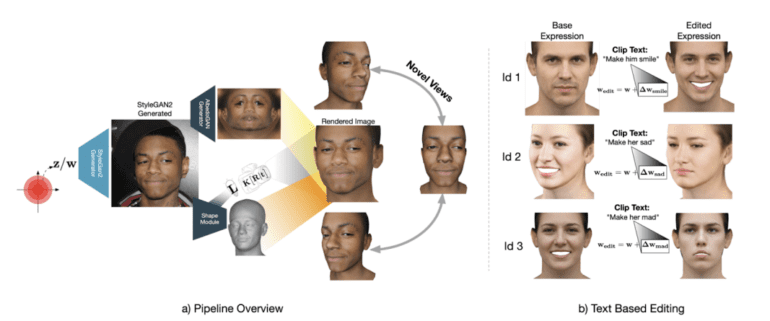

AlbedoGAN represents a groundbreaking 3D generative model for faces, utilizing a self-supervised approach to generate high-resolution textures and capture intricate geometric details. Leveraging the power of a pre-trained StyleGAN model, AlbedoGAN can generate exceptional 2D facial representations while simultaneously producing light-independent albedo directly from the latent space.

Albedo plays a crucial role in determining the appearance of a 3D face model. However, generating high-quality 3D models with albedo that effectively generalizes across different poses, ages, and ethnicities necessitates an extensive database of 3D scans—a resource that can prove costly and time-consuming.

To address this challenge, AlbedoGAN adopts a novel approach, employing a combination of image blending and Spherical Harmonics lighting. This innovative technology enables the capture of high-quality 1024 × 1024 resolution albedo, ensuring generalizability across varying poses and effectively handling shading variations.

In terms of the shape component, AlbedoGAN combines the FLAME model with per-vertex displacement maps guided by StyleGAN’s latent space, resulting in a higher-resolution mesh. The two networks are responsible for albedo and shape, and are trained in an alternating descent fashion. This distinctive algorithm empowers AlbedoGAN to generate 3D faces using StyleGAN’s latent space and perform direct face editing within the 3D domain using latent codes or text.

Conlcusion:

The emergence of advanced generative AI models, such as AlbedoGAN, signifies a significant breakthrough in the market. The ability to generate high-quality 3D faces with realistic textures and intricate details opens up a host of opportunities across various industries.

This advancement not only revolutionizes the advertising and entertainment sectors but also offers immense potential for applications in facial animation, expression transfer, and virtual avatars. With AlbedoGAN’s capability to bridge the gap between 2D and 3D content generation, businesses can harness its power to create visually captivating experiences and immersive virtual worlds, thereby gaining a competitive edge in the market.