TL;DR:

- Researchers have introduced DragGAN, an innovative point-based image manipulation system powered by generative AI technology.

- DragGAN allows precise control over objects’ pose, shape, expression, and layout, surpassing traditional image editing tools.

- Similar to U Point technology, users can drop a point on an image and modify relevant pixels, but DragGAN goes beyond color and brightness adjustments.

- The system employs AI algorithms to generate new pixels in response to user input, resulting in seamless transformations.

- DragGAN introduces a method of interactive control by dragging points in an image toward target positions facilitated by feature-based motion supervision and point tracking.

- The technology enables users to manipulate a wide range of subjects with utmost precision, including animals, vehicles, landscapes, and people.

- DragGAN offers unprecedented control over pixel location, operating within an AI-generated three-dimensional space.

- The user-friendly interface enhances accessibility and practical appeal, even for inexperienced users.

- DragGAN consistently produces realistic outputs, excelling in scenarios like occluded content and shape deformations.

- The research paper delves into DragGAN’s inner workings, showcasing its efficacy through experiments and results.

- The masking function in DragGAN allows users to selectively mask specific regions, enabling more granular adjustments.

- The system’s capability is exemplified by altering the orientation of a dog’s face and generating realistic teeth for a lion’s open mouth.

- The approach combines latent code optimization and point tracking, leveraging discriminative GAN features for precise pixel-level deformations.

- DragGAN surpasses previous methods in GAN-based manipulation, with the potential for powerful image editing using generative priors.

- Future work aims to expand point-based editing to 3D generative models, further enhancing DragGAN’s capabilities.

Main AI News:

Researchers have unveiled an innovative point-based image manipulation system powered by generative artificial intelligence (AI) technology, revolutionizing the way users can precisely control objects’ pose, shape, expression, and layout. This cutting-edge technology, known as DragGAN, harnesses the potential of generative adversarial networks (GANs) through intuitive graphical control, enabling users to effortlessly manipulate and transform images to their exact specifications.

Similar to DxO software’s U Point technology, which allows users to drop a point on an image and modify the relevant pixels, DragGAN takes image manipulation to new heights by providing users with the ability to not only adjust brightness and color but also reshape and reorganize individual pixels. By employing AI algorithms, DragGAN generates entirely new pixels in response to user input, resulting in seamless and remarkable transformations.

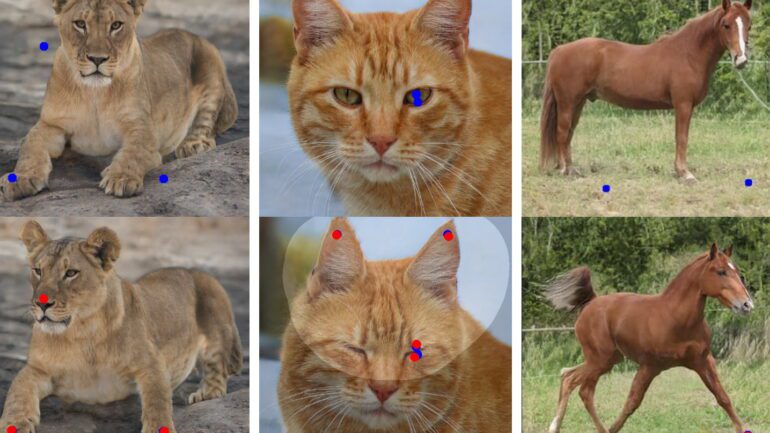

The researchers behind this groundbreaking work explain, “In this study, we delve into a powerful yet relatively unexplored method of controlling GANs, which involves ‘dragging’ any points within an image to precisely attain target points in an interactive and user-friendly manner, as illustrated in Fig.1. To achieve this, we introduce DragGAN, which comprises two key components: 1) feature-based motion supervision that directs the handle point towards the desired position, and 2) a novel point tracking approach that utilizes discriminative GAN features to continuously track the handle points’ positions.”

This remarkable technology allows users to manipulate images depicting a wide range of subjects, including animals, vehicles, landscapes, and even people. With DragGAN, users gain the ability to deform images with utmost precision, exerting control over the movement, form, expression, and arrangement of pixels. The Verge aptly describes DragGAN as “similar to Photoshop’s Warp tool but significantly more potent,” as it employs AI to regenerate the fundamental elements within an image.

While GANs have shown increasing competence in generating realistic outputs, DragGAN introduces a distinct advantage by offering unprecedented control over the precise location of pixels. This groundbreaking technology empowers users to manipulate two-dimensional images within an AI-generated three-dimensional space, opening up endless possibilities for creative expression. Examples demonstrate users effortlessly altering a dog’s pose, fine-tuning the height and reflections of a mountain backdrop beside a lake, and making extensive modifications to a lion’s appearance and behavior.

However, DragGAN’s appeal extends beyond its impressive power and capabilities. Its user interface stands out for its simplicity and intuitiveness, ensuring that almost any user can leverage this technology without an in-depth understanding of the underlying AI mechanisms. Unlike many other AI tools that can be complex and overwhelming for newcomers, DragGAN’s user-friendly interface broadens its commercial and practical appeal, making it accessible to a wider audience.

The researchers elaborate, “Since these manipulations occur within the learned generative image manifold of a GAN, they consistently produce realistic outputs, even for challenging scenarios such as generating occluded content and deforming shapes while preserving the object’s rigidity. Both qualitative and quantitative comparisons demonstrate DragGAN’s superiority over previous methods in the domains of image manipulation and point tracking.”

In their comprehensive paper, the research team consisting of Xingang Pang, Thomas Leimkühler, Christian Theobalt from the Max Planck Institute for Informatics, Ayush Tewari from MIT CSAIL, and Abhimitra Meka from Google AR/VR provides a detailed exploration of DragGAN. The paper elucidates the inner workings of DragGAN, including code snippets and the underlying mathematical principles. Furthermore, the research showcases the impressive efficacy of DragGAN through various experiments and results.

One notable feature highlighted in the paper is DragGAN’s masking function, which empowers users to selectively mask specific regions of an image to exert influence over user-selected pixels. An illustrative example presented in the paper involves altering the orientation of a dog’s face. Without a mask, dragging a point on the dog’s body would rotate the entire body. However, with the integration of a mask within DragGAN, users gain precise control solely over the face, enabling more nuanced adjustments.

Another compelling demonstration of DragGAN’s capabilities involves a lion. In the given example, the initial image portrays a lion with a closed mouth. By dropping points on the upper and lower parts of the lion’s muzzle and subsequently manipulating their positions, users can seamlessly open the lion’s mouth. DragGAN leverages its generative AI prowess to generate new pixels for the interior of the lion’s mouth, producing realistic teeth and faithfully preserving the visual integrity of the image.

The researchers attribute these remarkable achievements to two key components of DragGAN. First, an optimization process involving latent codes progressively moves multiple handle points toward their target locations. Second, a point-tracking procedure accurately traces the trajectory of the handle points. Both components effectively leverage the discriminative qualities of intermediate feature maps within the GAN, resulting in precise pixel-level image deformations and seamless interactive performance.

The research team demonstrates that their approach surpasses state of the art in GAN-based manipulation, paving the way for new avenues in powerful image editing utilizing generative priors. Looking ahead, the team plans to expand point-based editing to encompass 3D generative models, further expanding the capabilities of DragGAN.

Conlcusion:

The introduction of DragGAN, an innovative point-based image manipulation system powered by generative AI technology, has significant implications for the market. Its revolutionary capabilities to precisely control and manipulate images, coupled with intuitive graphical control, set it apart from traditional image editing tools. DragGAN opens up new possibilities for creative expression and empowers users to transform images with unparalleled precision and realism.

This technology is poised to have a profound impact on various industries, particularly advertising, design, and digital content creation, where the ability to manipulate images with such control and ease will enhance creativity and deliver impactful visual experiences. The market can expect an increased demand for DragGAN and its integration into various software applications, leading to a transformative shift in image editing practices and the way we interact with visual content.