TL;DR:

- Turnitin’s AI writing-detection tool has a higher false positive rate than initially claimed.

- The company has not disclosed the new document-level false positive rate, leading to concerns within the education community.

- Turnitin attributes the discrepancy to differences between lab testing and real-world user experiences.

- When the tool indicates a piece of writing has less than a 20 percent chance of being machine-generated, it has a higher incidence of false positives.

- Turnitin will now include an asterisk and a cautionary message to address these results.

- The sentence-level false positive rate is approximately 4 percent, according to Turnitin.

- The tool struggles with text that combines AI-generated and human-written content, with a significant number of false positive sentences located in close proximity to AI-generated sentences.

- Turnitin plans to conduct further experimentation and testing in the future.

Main AI News:

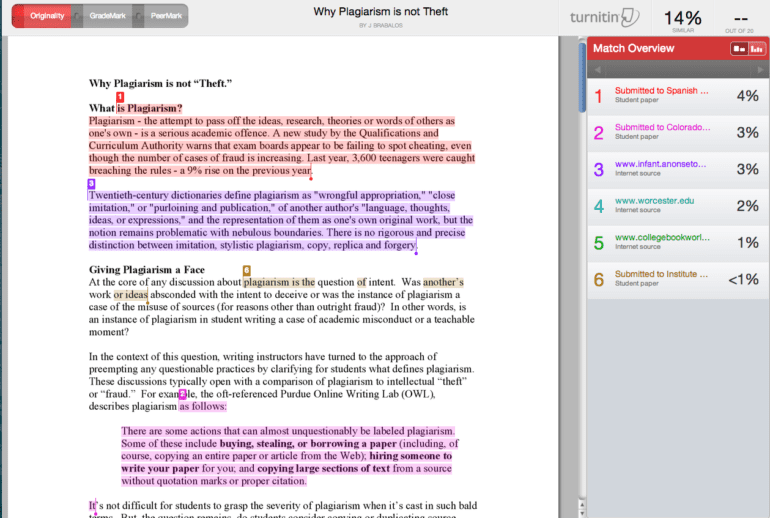

Turnitin, the renowned academic technology company, finds itself facing criticism as its AI writing-detection tool reveals a higher false positive rate than initially claimed. In a recent announcement by Annie Chechitelli, Turnitin’s chief product officer, it was disclosed that the tool’s false positive rate exceeds the previously touted figure of less than 1 percent. Disturbingly, the company has refrained from disclosing the updated document-level false positive rate, leaving many in the education community curious about the extent of this discrepancy.

In an effort to maintain transparency, Chechitelli conveyed the company’s commitment to sharing its findings and progress with the education community. According to Turnitin, the disparity in results is attributed to the disparity between lab testing and real-world user experiences. It appears that Turnitin’s AI-detection tool exhibits a higher incidence of false positives when indicating that a piece of writing has less than a 20 percent chance of being machine-generated. This revelation has prompted the company to take immediate action by appending an asterisk to these results, accompanied by a message expressing caution.

Turnitin’s AI-detection tool furnishes two distinct statistics—one at the document level and another at the sentence level. Chechitelli reveals that the sentence-level false positive rate stands at approximately 4 percent. However, the tool encounters significant challenges when confronted with a text that amalgamates AI-generated and human-written prose. Startlingly, the statement highlights that 54 percent of false positive sentences, which are erroneously classified as human-written, are located in close proximity to AI-generated sentences. Furthermore, a striking 26 percent of false positive sentences are positioned just two sentences away from an AI-generated sentence.

In response to these revelations, Turnitin has outlined its plans to embark on extensive experimentation and testing in the coming months. Acknowledging the rapidly evolving landscape of large language models and AI writing, Chechitelli emphasizes that the company’s metrics may undergo further transformations. As the education community ventures into uncharted territory, Turnitin strives for continual improvement and endeavors to meet the evolving demands of the field.

Turnitin’s pursuit of accuracy and transparency in the AI writing-detection realm serves as a reminder that advancements in technology necessitate ongoing scrutiny. While challenges persist, it is through thorough analysis, experimentation, and collaboration that the education community can navigate these uncharted waters and ensure the integrity of academic work remains uncompromised.

Conclusion:

Turnitin’s AI Detector facing a higher false positive rate has significant implications for the market. The credibility and reliability of AI writing-detection tools are essential for the education community. Turnitin’s commitment to transparency and ongoing improvement is commendable, as it demonstrates its willingness to address shortcomings and enhance its tool’s accuracy.

However, it also underscored the need for thorough evaluation and continued advancements in the field to ensure the integrity of academic work and prevent unwarranted accusations of plagiarism. This development serves as a reminder that technological advancements require careful scrutiny and adaptation to meet evolving market demands.