TL;DR:

- FitMe is an AI model developed by researchers from Imperial College London that transforms arbitrary facial images into relightable facial avatars.

- It addresses the challenges of 3D facial reconstruction from single unconstrained images, offering applications in virtual and augmented reality, social media, gaming, synthetic dataset generation, and health.

- The model utilizes 3D Morphable Models (3DMM) and deep generative models to capture face shape, appearance, and texture, enabling high-fidelity reconstructions.

- FitMe introduces precise differentiable renderings based on high-resolution face reflectance texture maps, allowing for realistic rendering in common gaming and rendering engines.

- The model achieves remarkable identity similarity and produces fully renderable avatars that can be readily used in off-the-shelf rendering programs.

- FitMe represents a significant advancement in the field, providing lifelike shading, photorealistic depictions, and efficient iterative fitting, all vital for immersive experiences.

Main AI News:

The field of 3D facial reconstruction has witnessed remarkable advancements in the past decade, yet the challenge of reconstructing a detailed face from a single unconstrained image remains a focal point of research in computer vision. With applications ranging from virtual and augmented reality to social media and gaming, the demand for accurate and realistic facial digitization has soared. However, existing methods often fall short of precisely capturing the identities of individuals when it comes to producing components for photorealistic rendering.

One popular approach for obtaining facial form and appearance from “in-the-wild” images is through 3D Morphable Models (3DMM). These models leverage Principal Component Analysis (PCA) to learn the shape and appearance variations from a dataset of over 200 participants. While initial studies achieved promising results, more complex models like LSFM, Basel Face Model, and Facescape have emerged, encompassing thousands of individuals. Furthermore, recent advancements have extended 3DMMs to encompass entire human heads and various facial features such as ears and tongue. However, these models still struggle to generate textures that exhibit photorealistic realism.

Deep generative models, especially Progressive GAN architectures, have made significant strides in learning the distributions of high-resolution 2D facial photographs. By employing Generative Adversarial Networks (GANs), these models excel at generating realistic facial samples. In recent developments, researchers have successfully learned meaningful latent regions that enable reconstruction and control of different aspects of the generated samples using style-based progressive generative networks. Techniques like UV mapping have also proven effective in acquiring 2D representations of 3D facial features. Additionally, rendering functions can utilize 3D facial models generated by 3DMMs to produce 2D facial images. The rendering process itself requires iterative optimization, which has been made possible by advancements in differentiable rendering, photorealistic face shading, and rendering libraries.

Despite these advances, the conventional Lambertian shading model employed by 3DMM falls short of accurately representing the complexity of face reflectance. Achieving lifelike facial representation necessitates the incorporation of multiple RGB textures that account for various facial reflectance factors. While attempts have been made to simplify such settings, acquiring datasets that meet these requirements remains a challenge. Although deep models can effectively capture facial appearances, they struggle with single or multiple picture reconstructions.

In a contemporary alternative paradigm that revolves around learned neural rendering, implicit representations have proven successful in capturing avatar appearance and shape. However, standard renderers struggle to work with such implicit representations and lack the ability to relight them. One notable advancement in this direction is the Albedo Morphable Model (AlbedoMM), which employs a linear PCA model to record facial reflectance and shape. Nonetheless, the resulting per-vertex color and normal reconstructions fall short of achieving photorealistic depiction due to their low resolution. AvatarMe++, on the other hand, can reconstruct high-resolution texture maps of facial reflectance from a single “in-the-wild” photograph. However, the reconstruction, upsampling, and reflectance steps cannot be directly optimized using the input image.

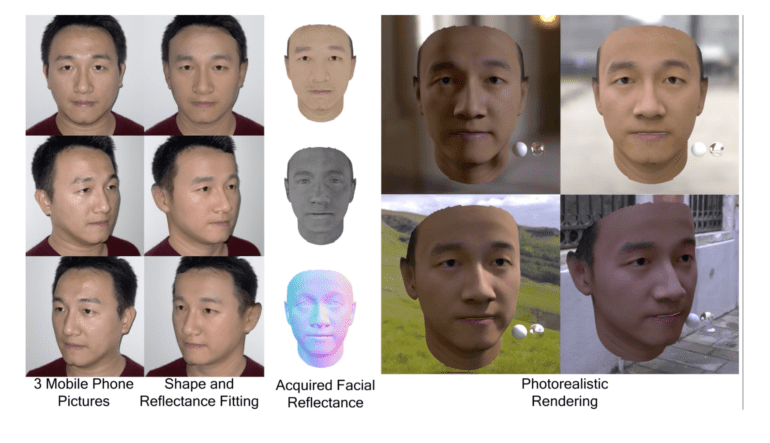

Addressing these challenges, researchers from Imperial College London introduce FitMe, a fully renderable 3DMM that can be fitted on free facial pictures using precise differentiable renderings based on high-resolution face reflectance texture maps. FitMe achieves identity similarity and produces highly realistic and fully renderable reconstructions that can be seamlessly integrated into off-the-shelf rendering programs. The texture model of FitMe encompasses a multimodal style-based progressive generator that simultaneously generates surface normals, specular albedo, and diffuse albedo for the face. To ensure effective training with various statistical modalities, a meticulously crafted branching discriminator is employed.

To build a high-quality face reflectance dataset, the researchers optimize AvatarMe++ using the publicly available MimicMe dataset, consisting of 5,000 individuals. This dataset is further modified to ensure a balanced representation of different skin tones. For face and head geometry, interchangeable models based on sizable geometry datasets are utilized. FitMe incorporates a style-based generator projection and a 3DMM fitting-based approach for single- or multi-image fitting. To facilitate effective iterative fitting in under one minute, the rendering function must be differentiable and efficient, rendering models like path tracing ineffective. Previous research has relied on slower optimization techniques or simpler shading models like Lambertian.

A key improvement over previous work is the inclusion of shading that closely resembles lifelike appearances, featuring convincing diffuse and specular rendering. FitMe excels in capturing the form and reflectance necessary for photorealistic rendering in common rendering engines. By leveraging the expanded latent space of the generator and employing photorealistic fitting techniques, FitMe achieves high-fidelity facial reflectance reconstruction with remarkable identity similarity while precisely capturing features in diffuse albedo, specular albedo, and normals.

Conclusion:

FitMe’s development and introduction mark a significant breakthrough in the market for facial avatars in gaming and rendering. By addressing the limitations of existing methods and providing a comprehensive solution for high-fidelity reconstructions, FitMe opens up new possibilities for developers, content creators, and businesses operating in virtual and augmented reality, social media, gaming, and other related industries. The ability to generate relightable facial avatars with remarkable realism and seamless integration into rendering programs will enhance the quality of visual experiences and contribute to the growth and advancement of these markets.