TL;DR:

- Douwe Kiela and Amanpreet Singh co-founded Contextual AI to address the limitations of current large language models (LLMs) in enterprise settings.

- Contextual AI employs the retrieval augmented generation (RAG) technique to enhance LLMs by incorporating external data sources.

- RAG enables LLMs to provide context-aware responses and overcome issues related to attribution and customization.

- The integration of RAG into LLMs offers improved accuracy, reliability, and traceability in generative AI for enterprises.

- Contextual AI competes in the emerging market of enterprise-focused language models, alongside other players like OpenAI and Microsoft.

- The company aims to provide an integrated solution tailored specifically for enterprise use cases, setting itself apart from other generative AI companies.

- With $20 million in seed funding, Contextual AI plans to invest in product development and expand its workforce to drive innovation.

Main AI News:

In the realm of artificial intelligence, large language models (LLMs) have emerged as game-changers with the potential to revolutionize entire industries. However, these models face limitations that make them less appealing to enterprise organizations that prioritize compliance and governance. One major drawback is the tendency of LLMs to generate information with unwarranted confidence, while also presenting challenges when it comes to modifying or revising their knowledge base.

To address these obstacles and more, Douwe Kiela co-founded Contextual AI, a company that recently emerged from stealth mode with an impressive $20 million in seed funding. Supported by prominent investors, including Bain Capital Ventures, Lightspeed, Greycroft, and SV Angel, Contextual AI aims to pioneer the “next generation” of enterprise-focused LLMs.

“We established this company to cater to the needs of enterprises operating in the expanding field of generative AI, which has predominantly served consumers thus far,” Kiela shared with TechCrunch via email. “Contextual AI is actively tackling multiple hurdles that currently hinder the adoption of generative AI in the enterprise domain.”

Kiela, along with his co-founder Amanpreet Singh, previously worked together at AI startup Hugging Face and Meta before embarking on their own venture in early February. While at Meta, Kiela spearheaded research on a technique called retrieval augmented generation (RAG), which forms the foundation of Contextual AI’s text-generating AI technology.

So, what exactly is RAG? In a nutshell, RAG enhances LLMs by incorporating external sources such as files and web pages to enhance their performance. When presented with a prompt, RAG scours these sources for relevant data, combines them with the original prompt, and feeds the enriched information into an LLM. The result is a context-aware response that incorporates the retrieved information, providing a more accurate and informed answer.

In contrast, a typical LLM, like ChatGPT, might only provide limited GDP data for Nepal up until a certain date, without citing the source of the information when posed with a question like “What’s Nepal’s GDP by year?“

Kiela asserts that RAG effectively addresses other prevailing issues associated with current LLMs, such as attribution and customization. Traditional LLMs often lack transparency regarding the reasoning behind their responses, and integrating additional data sources typically requires extensive retraining or fine-tuning—a challenge that RAG circumvents.

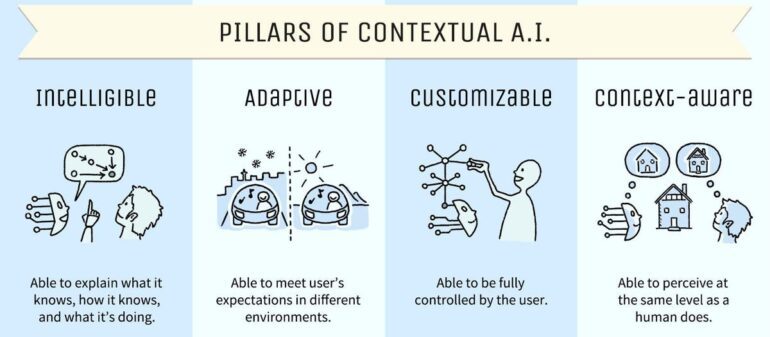

“RAG language models can achieve comparable performance to equivalent language models while being smaller in size. This enhances speed, resulting in reduced latency and cost,” explained Kiela. “Our solution tackles the limitations and inherent problems of existing approaches. We firmly believe that integrating and optimizing modules for data integration, reasoning, speech, and even vision and audio will unlock the true potential of language models for enterprise applications.”

My colleague Ron Miller has previously speculated about the future of generative AI in the enterprise, suggesting that smaller, more focused language models could play a significant role. While I don’t dispute this notion, it’s plausible that a combination of “smaller” models and existing LLMs supplemented by vast repositories of company-specific documents may be the winning formula.

Contextual AI isn’t the first player to explore this concept. OpenAI and its close collaborator, Microsoft, recently introduced a plug-in framework enabling third parties to incorporate additional sources of information into LLMs like GPT-4. Similarly, other startups like LlamaIndex are experimenting with methods to inject personal or private data, including enterprise data, into LLMs.

Nonetheless, Contextual AI claims to possess a competitive edge in the enterprise sector. Although the company is currently pre-revenue, Kiela states that Contextual AI is engaged in discussions with Fortune 500 companies to pilot its groundbreaking technology.

“Enterprises must have confidence in the accuracy, reliability, and traceability of the answers generated by generative AI,” emphasized Kiela. “Contextual AI will facilitate employers and their valuable knowledge workers in harnessing the efficiency benefits offered by generative AI, while ensuring safety and accuracy. While numerous generative AI companies have expressed intentions to target the enterprise market, Contextual AI will differentiate itself by developing a highly integrated solution explicitly designed for enterprise use cases.”

Conclusion:

The emergence of Contextual AI and its focus on developing enterprise-focused language models using the retrieval augmented generation technique signals a significant advancement in the market. By addressing limitations around accuracy, reliability, and traceability, Contextual AI aims to provide enterprises with a more efficient and trustworthy generative AI solution. This development further intensifies competition among companies like OpenAI and Microsoft, which are also targeting the enterprise market with their language models. The market is witnessing a shift toward more specialized and integrated solutions, highlighting the growing demand for AI technologies that meet the stringent compliance and governance requirements of enterprises.