TL;DR:

- Large Language Models (LLMs) have revolutionized various applications, but compressing them for memory-limited devices is challenging.

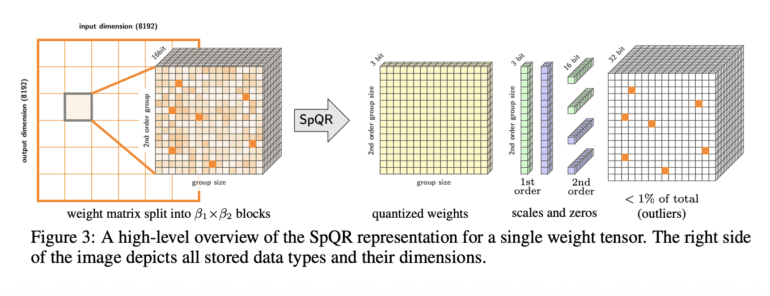

- Sparse-Quantized Representation (SpQR) is a compressed format and quantization technique that enables near-lossless compression of LLMs.

- SpQR identifies outlier weights for high precision storage and utilizes grouped quantization with small group sizes.

- The SpQR format is achieved through an extended version of post-training quantization (PTQ) approach.

- SpQR allows running 33 billion parameter LLMs on a single 24 GB GPU without performance degradation and provides a 15% speedup at 4.75 bits.

- SpQR offers effective encoding/decoding algorithms and delivers memory compression benefits of over 4x.

- This breakthrough in LLM compression opens up possibilities for deploying powerful models on memory-limited devices.

Main AI News:

The power and potential of Large Language Models (LLMs) have been nothing short of extraordinary. These models, capable of learning from vast amounts of data, have revolutionized various applications, ranging from generating human-like text to question-answering, code completion, text summarization, and even the creation of highly skilled virtual assistants. While LLMs have undoubtedly made their mark, there is now a shift towards developing smaller models trained on even larger datasets.

Smaller models offer the advantage of requiring fewer computational resources compared to their larger counterparts. A prime example is the LLaMA model, boasting 7 billion parameters and trained on a staggering 1 trillion tokens. Surprisingly, despite being 25 times smaller than the massive GPT-3 model, LLaMA produces results that are 25 times better. This remarkable feat has sparked interest in compressing LLMs to fit into memory-limited devices such as laptops and mobile phones.

However, compressing LLMs poses its own set of challenges. Maintaining generative quality becomes increasingly difficult, and accuracy degradation is often observed when employing 3 to 4-bit quantization techniques in models with 1 to 10 billion parameters. These limitations arise due to the sequential nature of LLM generation, where even slight errors can accumulate and lead to seriously compromised outputs. To overcome these hurdles, it is crucial to develop low-bit-width quantization methods that do not sacrifice predictive performance compared to the original 16-bit model.

In response to these accuracy limitations, a team of visionary researchers has introduced Sparse-Quantized Representation (SpQR), a groundbreaking compressed format and quantization technique. This innovative hybrid approach enables near-lossless compression of pretrained LLMs down to a remarkable 3–4 bits per parameter. Remarkably, SpQR is the first weight quantization technique to achieve such compression ratios while maintaining an end-to-end accuracy error of less than 1% when compared to the dense baseline, as evaluated by perplexity.

SpQR operates on two fundamental principles. Firstly, it identifies outlier weights that, when quantized, yield excessively high errors. These weights are stored in high precision, while the remaining weights are stored in a much lower format, typically occupying just 3 bits. Secondly, SpQR leverages a variant of grouped quantization with exceptionally small group size, such as 16 contiguous elements. Even the quantization scales themselves can be represented in a compact 3-bit format, further enhancing compression efficiency.

In order to convert a pretrained LLM into the SpQR format, the research team has adopted an extended version of the post-training quantization (PTQ) approach. Inspired by GPTQ, this method involves passing calibration data through the uncompressed model. By employing SpQR, it becomes possible to run LLMs with a staggering 33 billion parameters on a single 24 GB consumer GPU without experiencing any performance degradation. In fact, SpQR even provides a remarkable 15% speedup at a mere 4.75 bits. This breakthrough allows powerful LLMs to be accessible to consumers without incurring any performance penalties.

Moreover, SpQR offers highly efficient algorithms for encoding and decoding weights into its format at runtime. These algorithms have been meticulously designed to maximize the memory compression advantages provided by SpQR. Additionally, a powerful GPU inference algorithm has been developed specifically for SpQR, enabling faster inference compared to 16-bit baselines while maintaining comparable levels of accuracy. As a result, SpQR delivers memory compression benefits exceeding four times, making it an exceptionally effective solution for devices with limited memory.

Conclusion:

The introduction of Sparse-Quantized Representation (SpQR) represents a significant advancement in the compression of Large Language Models (LLMs). This breakthrough technique overcomes the challenges of maintaining accuracy and generative quality while compressing LLMs for memory-limited devices. With the ability to achieve near-lossless compression and run large models on constrained hardware, SpQR holds great potential for the market. It allows for the deployment of powerful language models in a wide range of applications, enhancing user experiences and driving innovation in industries that rely on language processing and generation.