TL;DR:

- Google is launching a virtual try-on tool for clothes on real-life models as part of its Google Shopping updates.

- The tool uses a diffusion-based model to predict how garments will drape, fold, and fit on different models in various poses.

- Initially available for women’s tops, the feature will expand to include men’s tops later.

- Google aims to address online shoppers’ concerns about representation and dissatisfaction with clothing purchases.

- Virtual try-on tech is not new, with companies like Amazon, Adobe, and Walmart also exploring this area.

- Models have raised concerns about the potential impact of AI-generated models on traditional photo shoots.

- Google emphasizes using diverse models and launches filtering options for clothing searches.

- The introduction of virtual try-on and AI-powered filters marks a significant step forward in online clothes shopping.

Main AI News:

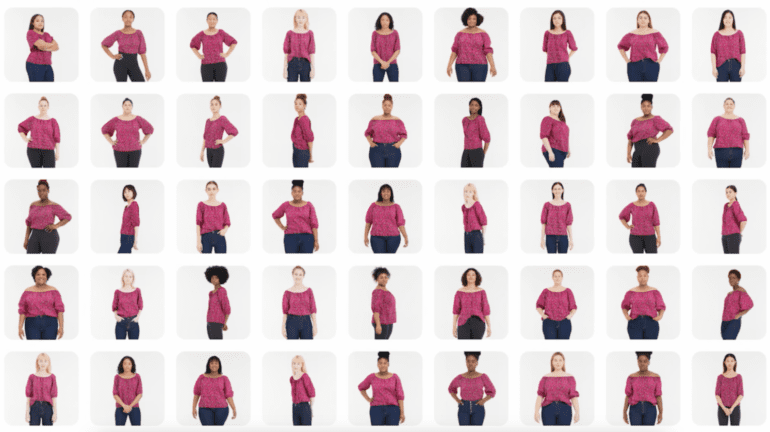

In its ongoing quest to harness the power of generative AI, Google is set to introduce a groundbreaking shopping feature that will showcase clothes on a lineup of real-life fashion models. As part of a comprehensive series of updates to Google Shopping, this new virtual try-on tool for apparel utilizes advanced technology to predict how garments would fit and appear on a diverse set of models in various poses.

The foundation of this virtual try-on capability lies in Google’s internally developed diffusion-based model. These models, including the widely acclaimed Stable Diffusion and DALL-E 2, leverage their ability to gradually eliminate noise from an initial image composed entirely of randomness. Through step-by-step refinement, they successfully bring the image closer to the desired target, taking into account aspects such as draping, folding, clinging, and stretching, as well as the formation of wrinkles and shadows.

To train this innovative model, Google employed numerous pairs of images, each featuring an individual wearing a specific garment in two distinct poses. By capturing the nuances of clothing behavior in different scenarios, such as a shirt being worn sideways versus forward, the model gains robustness. Furthermore, Google ensured that the training process involved random pairings of images, encompassing diverse garments and individuals. This approach aimed to combat visual defects, including misshapen and unnatural folds, to enhance the realism of the virtual try-on experience.

Starting today, users in the United States who turn to Google Shopping will have the opportunity to virtually try on women’s tops from renowned brands like Anthropologie, Everlane, H&M, and LOFT. These brands can be easily identified by the new “Try On” badge on Google Search. Additionally, the availability of virtual try-on for men’s tops is expected to be introduced later this year, catering to a broader audience.

Lilian Rincon, the Senior Director of Consumer Shopping Products at Google, expressed the significance of this technological advancement in a blog post shared with TechCrunch. Rincon acknowledged that while shopping for clothes in physical stores allows individuals to assess their suitability instantly, online shoppers often face challenges. According to a survey, 42% of online shoppers feel underrepresented by model images, and 59% express dissatisfaction when a purchased item looks different on them than expected. Rincon emphasized the importance of instilling confidence in online clothes shopping by leveraging virtual try-on technology.

It is worth noting that virtual try-on technology is not entirely novel. Companies such as Amazon, Adobe, and Walmart have long been experimenting with generative apparel modeling. Moreover, AI startup AIMIRR has taken this concept a step further by utilizing real-time garment rendering technology, overlaying clothing images on live videos. Google itself has previously explored virtual try-on through collaborations with industry giants like L’Oréal, Estée Lauder, MAC Cosmetics, Black Opal, and Charlotte Tilbury. These collaborations allowed users to try on makeup shades across a diverse range of models, featuring various skin tones.

However, as generative AI continues to penetrate the fashion industry, it faces resistance from models who argue that it exacerbates existing inequalities. Models often operate as independent contractors, grappling with high agency commission fees, business expenses, and the need for promotional materials to secure job opportunities. Furthermore, the lack of diversity in the modeling industry remains a pressing concern. A survey conducted in 2016 revealed that a staggering 78% of models featured in fashion advertisements were white.

Levi’s, among others, has explored the potential of AI technology to create customized AI-generated models. Defending their stance, Levi’s claimed that this technology would increase the representation of diverse models wearing their products. However, critics have questioned why the brand didn’t actively recruit models with the very characteristics it seeks to promote. While Rincon highlighted Google’s commitment to employing real and diverse models, encompassing a wide range of sizes, ethnicities, skin tones, body shapes, and hair types, she did not directly address the potential impact on traditional photo shoot opportunities for models.

Alongside the introduction of virtual try-on, Google is also launching filtering options for clothing searches, powered by AI and visual matching algorithms. These filters, accessible within product listings on Google Shopping, enable users to refine their searches based on color, style, and pattern. Rincon emphasized that these filters aim to replicate the assistance provided by store associates, who suggest alternative options based on previous try-on. In this way, Google seeks to provide shoppers with an extra helping hand during their online clothes shopping journey.

Google’s new AI-powered try-on feature, which taps generative AI to adapt clothing to different models. Source: Yahoo

Conclusion:

Google’s launch of a virtual try-on tool for fashion represents a major breakthrough in the market. By leveraging generative AI and diffusion models, Google empowers online shoppers to preview clothes on real-life models, addressing concerns of representation and dissatisfaction. This innovation not only enhances the customer experience but also signifies the increasing impact of AI in the fashion industry.

While it opens up new possibilities, there are ongoing discussions about the implications for traditional models and the need to ensure diversity and inclusivity. Nevertheless, this advancement, coupled with AI-powered filtering options, marks a significant step forward for the online clothing market, providing shoppers with greater confidence and convenience.