TL;DR:

- “Blackout Diffusion” is an AI framework revolutionizing image generation.

- It generates images from scratch without requiring a random seed, making it unique.

- This innovation matches the quality of existing models like DALL-E while using fewer computational resources.

- Generative modeling holds immense potential across industries, including software, legal documents, and art.

- Its discrete working space opens up possibilities for text and scientific applications.

- Blackout Diffusion’s success was validated through rigorous testing on various datasets.

- It dispels misconceptions about diffusion models and lays the groundwork for scientific applications.

- Its efficiency can drastically speed up scientific simulations, reducing computational science’s carbon footprint.

Main AI News:

In a recent breakthrough unveiled at the International Conference on Machine Learning, a groundbreaking artificial intelligence framework known as “Blackout Diffusion” has emerged as a potential game-changer in the realm of generative models. Unlike its predecessors, this innovative machine-learning algorithm sets itself apart by eliminating the need for a “random seed” to kickstart the image generation process.

Comparable in output quality to existing diffusion models such as DALL-E and Midjourney, Blackout Diffusion boasts the significant advantage of requiring fewer computational resources to achieve similar results. The implications of this advancement extend far beyond aesthetics, with the potential to reshape industries ranging from software development and legal documentation to the world of art.

Javier Santos, an AI researcher at Los Alamos National Laboratory and co-author of Blackout Diffusion, commented, “Generative modeling is bringing in the next industrial revolution with its capability to assist many tasks, such as generation of software code, legal documents, and even art.” Mr. Santos continued, emphasizing the vast potential of generative modeling for scientific discoveries and practical applications across domains.

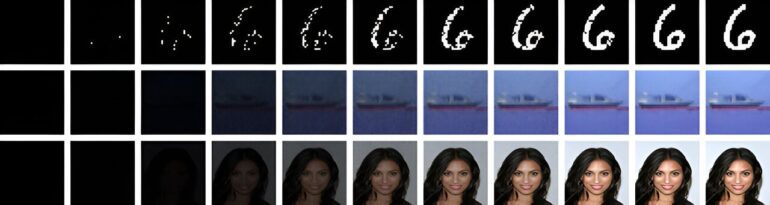

How does it work? Diffusion models, including Blackout Diffusion, begin with an image and iteratively introduce noise until the original image becomes unrecognizable. Throughout this transformation, the model learns how to reverse the process effectively. The key differentiator here is that Blackout Diffusion doesn’t rely on input noise, eliminating the need for initial data to initiate the image generation process.

Yen-Ting Lin, the lead physicist behind the Blackout Diffusion collaboration at Los Alamos, pointed out, “We showed that the quality of samples generated by Blackout Diffusion is comparable to current models using a smaller computational space.” This means that Blackout Diffusion offers efficiency without compromising on quality, a promising proposition for industries seeking streamlined AI-powered solutions.

A fundamental difference between Blackout Diffusion and its counterparts lies in the space in which they operate. Traditional generative diffusion models function in continuous spaces, which, while versatile, have limitations in scientific applications. Lin explained, “In order to run existing generative diffusion models, mathematically speaking, diffusion has to be living on a continuous domain; it cannot be discrete.”

Blackout Diffusion, on the other hand, operates in discrete spaces, opening up exciting possibilities in various applications, including text and scientific research. The ability to work with discrete data can have a transformative impact on fields like computational science and data analysis.

To validate the efficacy of Blackout Diffusion, the research team conducted extensive testing on standardized datasets, including the Modified National Institute of Standards and Technology database, CIFAR-10, and the CelebFaces Attributes Dataset. These tests not only demonstrated its capabilities but also debunked misconceptions about how diffusion models function internally.

In addition, the team laid the groundwork for future scientific applications by providing essential design principles. Lin noted, “This demonstrates the first foundational study on discrete-state diffusion modeling and points the way toward future scientific applications with discrete data.“

One of the most promising aspects of generative diffusion modeling is its potential to accelerate scientific simulations on supercomputers, ultimately contributing to scientific progress while reducing the carbon footprint of computational science. Fields like subsurface reservoir dynamics, drug discovery, and gene expression analysis are poised to benefit immensely from this innovation.

Conclusion:

The emergence of “Blackout Diffusion” marks a significant advancement in the field of AI image generation. Its efficiency, ability to operate in discrete spaces, and potential applications across industries, including scientific research, position it as a transformative force. This innovation has the potential to reshape markets by streamlining AI-powered solutions, reducing resource requirements, and accelerating scientific progress. Businesses and researchers should closely monitor and leverage the capabilities of Blackout Diffusion to unlock new opportunities for innovation and efficiency.