TL;DR:

- A majority of US adults (58%) believe AI tools will amplify misinformation in the 2024 presidential election.

- Only 30% of Americans have used AI chatbots or image generators, with limited exposure to AI tools.

- Strong bipartisan consensus against political candidates using AI for deceptive media in campaigns.

- Concerns were raised after AI’s use in the Republican presidential primary, including AI-generated ads and deepfake imagery.

- Most Americans prefer traditional sources like news media, friends, and family for election information over AI chatbots.

- High skepticism (61%) regarding the accuracy and reliability of information provided by AI chatbots.

- Support across political lines for regulations on AI-generated content, including bans and labeling requirements.

- President Biden’s executive order initiates AI technology guidelines, marking a significant development.

- Shared responsibility is perceived among technology companies, news media, social media, and the federal government in addressing AI-generated misinformation.

Main AI News:

In the countdown to the 2024 presidential election, a resounding concern has gripped the nation: the escalating influence of artificial intelligence (AI) tools, poised to amplify misinformation on an unprecedented scale. This burgeoning apprehension is shared by the majority of American adults, as revealed in a recent survey conducted by The Associated Press-NORC Center for Public Affairs Research in collaboration with the University of Chicago Harris School of Public Policy.

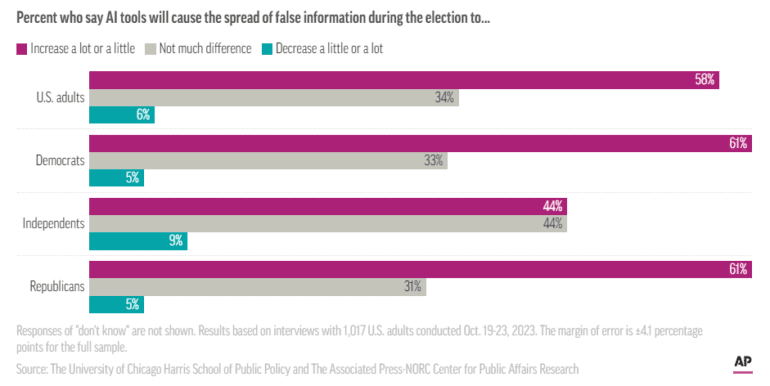

According to the poll’s findings, a substantial 58% of surveyed adults believe that AI tools, armed with the capability to micro-target political demographics, churn out persuasive narratives en masse, and conjure eerily realistic fake images and videos in mere seconds, will exacerbate the proliferation of false and misleading information during the upcoming electoral proceedings. In stark contrast, a mere 6% hold the belief that AI will contribute to reducing misinformation, while one-third maintain that it will bear little consequence.

As 66-year-old Rosa Rangel of Fort Worth, Texas aptly puts it, “Look what happened in 2020 — and that was just social media.” Rangel, a Democrat who witnessed an inundation of falsehoods on social media in 2020, anticipates that AI’s impact in 2024 will be akin to a simmering pot on the verge of boiling over.

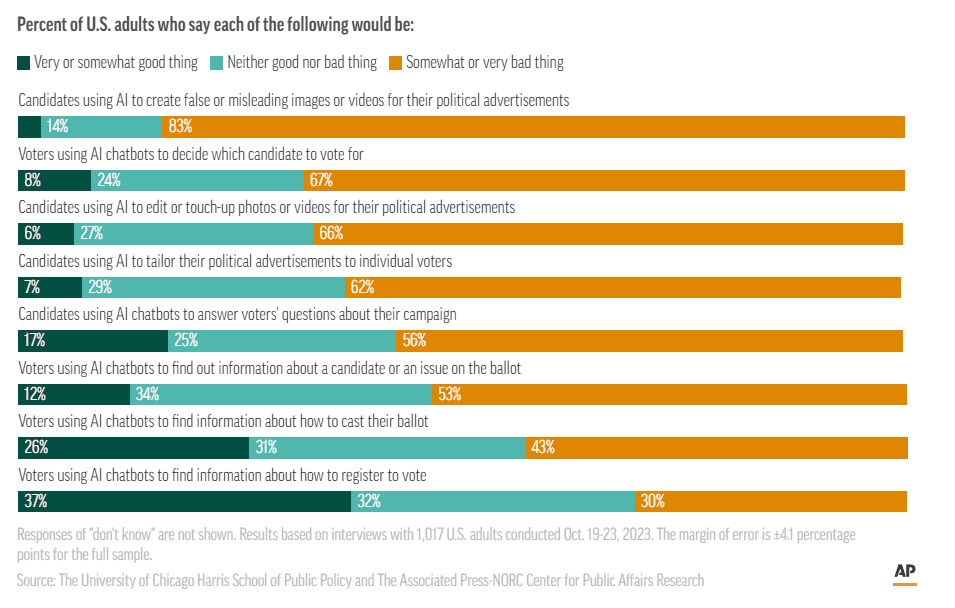

Surprisingly, only 30% of American adults have interacted with AI chatbots or image generators, and less than half (46%) have encountered information about AI tools. However, a consensus, spanning political affiliations, emerges against the use of AI by political candidates. The vast majority of respondents disapprove of candidates employing AI to create deceptive media for political advertisements (83%), manipulate photos or videos for political campaigns (66%), tailor political ads to individual voters (62%), or respond to voter inquiries via chatbots (56%).

This bipartisan aversion towards AI utilization in political campaigns gains further credence, especially after its deployment in the Republican presidential primary. In April, the Republican National Committee unveiled an entirely AI-generated advertisement depicting a dystopian future if President Joe Biden were to secure re-election. Ron DeSantis, the Republican governor of Florida, similarly harnessed AI-generated imagery to craft a narrative involving former President Donald Trump and Dr. Anthony Fauci. Moreover, Never Back Down, a super PAC supporting DeSantis, employed AI voice-cloning technology to mimic Trump’s voice in a social media post.

Andie Near, a 42-year-old from Holland, Michigan, who typically supports Democrats, firmly advocates for a focus on candidates’ merits rather than their capacity to instill fear in voters. She acknowledges the creative potential of AI but asserts that its misuse can exacerbate the impact of conventional attack ads.

Thomas Besgen, a 21-year-old Republican and mechanical engineering student at the University of Dayton in Ohio, strongly condemns campaigns resorting to deepfake audio or imagery to deceive voters. He advocates for a ban on deepfake advertisements or, failing that, stringent labeling requirements identifying them as AI-generated content. The Federal Election Commission is presently deliberating a petition urging it to regulate AI-generated deepfakes in political advertising ahead of the 2024 election.

Despite their skepticism towards AI’s role in politics, respondents like Besgen express enthusiasm for AI’s broader potential in the realms of economy and society. They utilize AI tools for various purposes, from exploring historical topics to generating imaginative images of the future. Nevertheless, the poll indicates that most Americans are more inclined to turn to traditional sources such as news media (46%), friends and family (29%), or social media (25%) for information regarding the presidential election, rather than relying on AI chatbots.

Intriguingly, when it comes to the trustworthiness of information provided by AI chatbots, the vast majority of respondents exhibit skepticism. A mere 5% express extreme or very high confidence in the factual accuracy of AI-generated information, while 33% have a moderate level of confidence. Conversely, 61% harbor very little or no confidence in the reliability of such information.

This skepticism aligns with the warnings issued by AI experts, cautioning against using chatbots as information retrieval tools. These AI models excel at predicting the most plausible next word in a sentence, enabling them to mimic writing styles effectively but also making them susceptible to fabricating information.

Interestingly, respondents from both major political parties generally support regulations on AI. They exhibit a more favorable disposition towards measures to ban or label AI-generated content imposed by tech companies, the federal government, social media firms, or news outlets. Approximately two-thirds endorse government-enforced bans on AI-generated content featuring false or misleading images in political advertisements, and a similar proportion advocate for technology companies to label all AI-generated content on their platforms.

President Biden has initiated a pivotal step by signing an executive order to guide the development of AI technology, setting forth safety and security standards and directing the Commerce Department to issue guidelines for labeling and watermarking AI-generated content.

Moreover, shared responsibility is perceived by the majority of Americans in the endeavor to combat AI-generated false or misleading information during the 2024 presidential elections. Approximately 63% contend that technology companies bear a significant portion of this responsibility, while around half attribute a substantial role to the news media (53%), social media companies (52%), and the federal government (49%).

Although Democrats are somewhat more inclined to emphasize the responsibility of social media companies, there is a consensus across the political spectrum regarding the responsibilities of technology firms, the news media, and the federal government. The prevailing sentiment underscores the urgent need for stringent measures to safeguard the integrity of democratic processes amidst the burgeoning influence of AI in electoral campaigns.

Conclusion:

The public’s growing apprehension about AI’s potential to exacerbate misinformation in the 2024 presidential election signals a need for caution and regulation in the AI-driven political advertising landscape. The strong bipartisan consensus against deceptive AI media in campaigns underscores the importance of transparency and ethical use of AI in political contexts. For the market, this suggests a growing demand for AI tools and services that focus on ensuring accuracy, transparency, and responsible use, particularly in political and election-related applications. Companies operating in this space should prioritize building trust and adhering to regulatory guidelines to address these concerns.