- Abacus.AI unveils Smaug-Llama-3-70B-Instruct, rivaling GPT-4 Turbo in open-source conversational AI.

- Aimed at improving multi-turn conversation handling, surpassing predecessors with contextually relevant responses.

- Utilizes advanced techniques and datasets for superior performance, seamlessly integrating with popular frameworks like transformers.

- Demonstrated efficacy through benchmarks like MT-Bench and Arena Hard, outperforming competitors in context preservation and task complexity.

Main AI News:

The integration of artificial intelligence (AI) has transformed numerous sectors by introducing sophisticated models for natural language processing (NLP). NLP has empowered computers to comprehend, interpret, and reciprocate human language in meaningful ways. Its applications span text generation, translation, and sentiment analysis, profoundly impacting domains like healthcare, finance, and customer service. The evolution of NLP models continues to drive progress, constantly pushing the boundaries of what AI can accomplish in understanding and generating human language.

However, despite these strides, the development of models adept at handling intricate multi-turn conversations poses an ongoing challenge. Existing models often struggle to maintain context and coherence during prolonged interactions, resulting in subpar performance in real-world scenarios. Sustaining a coherent conversation across multiple turns holds paramount importance for applications such as customer service bots, virtual assistants, and interactive learning platforms.

Current methodologies for enhancing AI conversation models involve fine-tuning diverse datasets and integrating reinforcement learning techniques. While popular models like GPT-4 Turbo and Claude-3-Opus have established benchmarks in performance, they still face hurdles in managing complex dialogues and ensuring consistency. These models typically rely on extensive datasets and sophisticated algorithms to augment their conversational capabilities. However, despite these endeavors, maintaining context over extended conversations remains a significant obstacle. Although impressive, the performance of these models underscores the potential for further enhancements in handling dynamic and contextually rich interactions.

Abacus.AI researchers have unveiled the Smaug-Llama-3-70B-Instruct model, a promising addition to the open-source landscape, poised to rival GPT-4 Turbo. This innovative model aims to elevate performance in multi-turn conversations through a groundbreaking training approach. Abacus.AI’s strategy centers on enhancing the model’s capacity to comprehend and generate contextually relevant responses, surpassing previous models within the same category. Built upon the Meta-Llama-3-70B-Instruct foundation, Smaug-Llama-3-70B-Instruct incorporates advancements that enable it to outshine its predecessors.

The Smaug-Llama-3-70B-Instruct model leverages advanced techniques and novel datasets to achieve unparalleled performance. Researchers have employed a tailored training protocol that prioritizes real-world conversational data, ensuring the model can adeptly handle diverse and complex interactions. Seamlessly integrating with popular frameworks like transformers, the model can be deployed across various text-generation tasks, generating precise and contextually appropriate responses. Transformers facilitate efficient processing of extensive datasets, bolstering the model’s ability to comprehend and craft detailed and nuanced conversational exchanges.

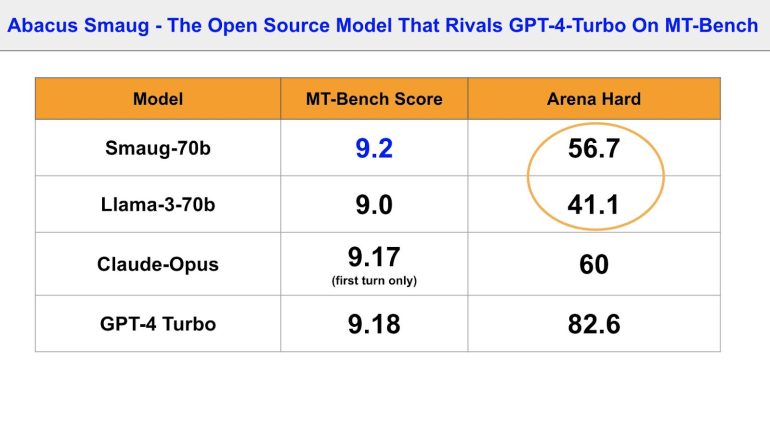

The efficacy of the Smaug-Llama-3-70B-Instruct model is underscored by benchmarks such as MT-Bench and Arena Hard. On MT-Bench, the model boasts impressive scores, with 9.4 in the first turn, 9.0 in the second turn, and an average of 9.2, surpassing both Llama-3 70B and GPT-4 Turbo, which scored 9.2 and 9.18, respectively. These scores attest to the model’s robustness in preserving context and delivering coherent responses across extended dialogues. Corroborated by human evaluations, the MT-Bench results underscore Smaug’s efficacy in handling straightforward prompts effectively.

Nevertheless, real-world tasks demand intricate reasoning and planning, aspects that MT-Bench incompletely addresses. Arena Hard, a novel benchmark gauging an LLM’s ability to tackle complex tasks, showcased significant advancements for Smaug over Llama-3, with Smaug achieving a score of 56.7 compared to Llama-3’s 41.1. This enhancement underscores the model’s aptitude in addressing more sophisticated and agentive tasks, reflecting its advanced comprehension and processing of multi-turn interactions.

Conclusion:

The introduction of Smaug-Llama-3-70B-Instruct by Abacus.AI signifies a significant leap forward in open-source conversational AI, challenging established models like GPT-4 Turbo. With its ability to handle multi-turn conversations more adeptly and outperforming competitors in context preservation and task complexity, this advancement sets a new standard for the market, potentially reshaping the landscape of conversational AI solutions. Companies invested in AI development should take note of these advancements and consider the implications for their own product offerings and strategic directions.