- Accenture has launched the AI Refinery framework developed with Nvidia’s AI Foundry service.

- The framework enables enterprises to create and personalize large language models (LLMs) using Llama 3.1.

- Nvidia AI Foundry provides tools and infrastructure for custom AI model development, including foundation models, accelerated computing, expert support, and a partner ecosystem.

- Key components of the AI Refinery framework include domain model customization, the Switchboard Platform for model selection, the Enterprise Cognitive Brain for data indexing, and Agentic Architecture for autonomous AI operation.

- Companies like Amdocs, Capital One, and ServiceNow are already using Nvidia AI Foundry to integrate custom models into their workflows.

- Nvidia’s Inference Model (NIM) supports various Llama 3.1 models, offering flexibility for different AI applications.

Main AI News:

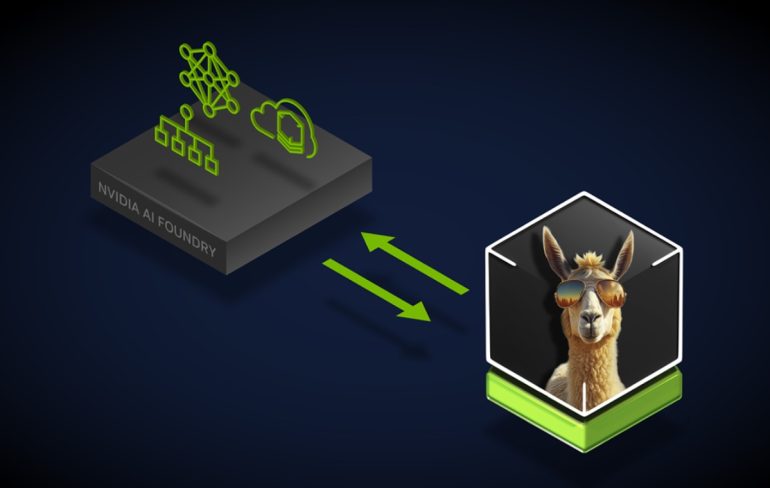

Accenture Plc unveiled its AI Refinery framework on Tuesday, developed in partnership with Nvidia Corp. Utilizing Nvidia’s new AI Foundry service, this framework aims to enable enterprises to construct bespoke large language models (LLMs) using Llama 3.1. The initiative focuses on refining and personalizing these models with proprietary data and processes, allowing businesses to create domain-specific generative AI solutions.

The Evolution of Generative AI

Kari Briski, Vice President of AI Software at Nvidia, addressed the increasing interest in generative AI during a recent briefing. She explained that while the investment in generative AI has been substantial, the technology’s potential to enhance productivity by automating repetitive tasks such as summarization and identifying best practices is compelling.

“Nvidia AI Foundry is a comprehensive service designed to provide enterprises with the tools and expertise required to develop and deploy custom AI models,” Briski stated. The service leverages Nvidia’s accelerated computing and software tools, delivering an infrastructure conducive to large-scale AI projects. Key features of the AI Foundry include:

- Foundation Models: A selection of Nvidia and community models, including Llama 3.1.

- Accelerated Computing: DGX Cloud offers scalable computing resources necessary for extensive AI initiatives.

- Expert Support: Assistance from Nvidia AI Enterprise experts in model development and deployment.

- Partner Ecosystem: Collaborations with industry leaders like Accenture for AI-driven transformation.

Briski noted that evaluating customized models remains a challenge for many customers. To address this, Nvidia offers various evaluation methods including academic benchmarks, custom evaluation benchmarks, third-party human evaluators, and LLM-based assessments.

Industry Adoption and Advantages

Several companies, including Amdocs, Capital One, and ServiceNow, are already integrating Nvidia AI Foundry into their operations. These organizations are developing custom models tailored to their specific industries, gaining a competitive advantage through specialized knowledge integration.

One notable aspect of Nvidia’s offering is the Nvidia Inference Model (NIM), a customizable model and container accessible via a standard API. NIM supports Llama 3.1 variants, including the 8B, 70B, and 405B models, catering to various needs from single GPU operations to high-accuracy and synthetic data generation.

Accenture’s AI Refinery Framework

Accenture’s AI Refinery framework, which operates on Nvidia AI Foundry, promises to advance enterprise generative AI capabilities. The framework incorporates four key components:

- Domain Model Customization and Training: Allows enterprises to refine LLMs with their data and processes, enhancing model relevance.

- Switchboard Platform: Facilitates model selection and combination based on business context, cost, and accuracy.

- Enterprise Cognitive Brain: Scans and vectorizes corporate data, creating an index that boosts generative AI capabilities.

- Agentic Architecture: Supports autonomous AI operations with minimal human oversight, promoting responsible AI behavior.

Julie Sweet, Accenture’s Chair and CEO, emphasized the framework’s transformative potential in enterprise functions, particularly in marketing, and its broader applications. By utilizing the framework internally before client deployment, Accenture demonstrates its commitment to innovation and industry-wide transformation.

Jensen Huang, Nvidia’s Founder and CEO, echoed the sentiment, highlighting that Accenture’s AI Refinery framework will provide businesses with essential resources and expertise to develop custom Llama LLMs.

Conclusion:

The collaboration between Accenture and Nvidia represents a significant advancement in the generative AI market, providing enterprises with powerful tools to create highly specialized LLMs. This innovation facilitates more tailored AI solutions, potentially leading to improved operational efficiencies and competitive advantages across industries. As businesses increasingly adopt these advanced AI frameworks, they are likely to gain substantial benefits from enhanced model personalization and automation capabilities.