- Researchers at Amii have identified a critical issue in machine learning called “loss of plasticity,” where AI systems lose the ability to learn new information over time.

- This issue is particularly problematic for AI, which must adapt continuously, leading to significant performance degradation.

- Current deep learning models, such as ChatGPT, rely on periodic retraining, which is costly and inefficient. It highlights the need for solutions that support continual learning.

- The research team developed a method called “continual backpropagation,” which reinitializes inactive units in the neural network to maintain its learning ability over time.

- The study significantly contributes to the field, urging further exploration of solutions to enable continuous, adaptive learning in AI systems.

Main AI News:

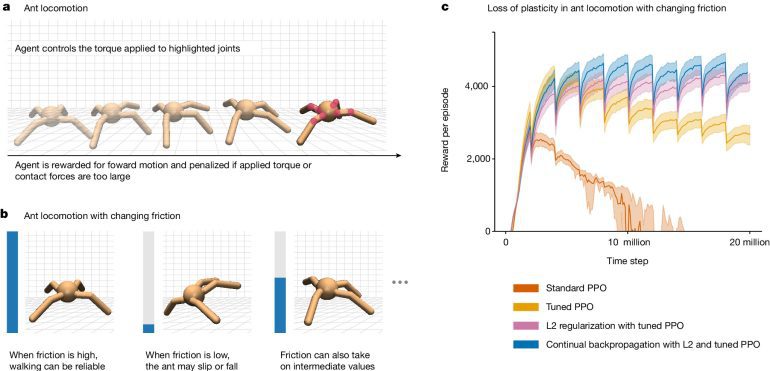

Researchers at the Alberta Machine Intelligence Institute (Amii) have uncovered a critical issue in machine learning that could revolutionize how AI adapts to real-world challenges. In their study, “Loss of Plasticity in Deep Continual Learning,” published in Nature, the team, including Shibhansh Dohare, J. Fernando Hernandez-Garcia, Qingfeng Lan, Parash Rahman, and Amii Fellows A. Rupam Mahmood and Richard S. Sutton, explored a problem called “loss of plasticity.” This phenomenon occurs when AI systems, over time, lose their ability to learn new information, leading to significant performance degradation.

Mahmood emphasizes that this issue is a major obstacle for AI, and it needs to adapt continually. Once these systems lose plasticity, they struggle to learn new tasks and even fail to relearn previously acquired knowledge. This challenge is particularly pressing as most current deep learning models, such as ChatGPT, refrain from engaging in continual learning, relying instead on periodic retraining, which is costly and inefficient.

The research team designed experiments to confirm the widespread Nature of this problem. They discovered that as deep learning networks lose plasticity, their performance deteriorates with each new task. Recognizing the urgency of this issue, the researchers proposed a solution: “continual backpropagation.” This method periodically reinitializes inactive units in the neural network, allowing it to maintain its learning ability over time.

While the method is a promising step forward, Sutton acknowledges that future research may uncover even better solutions. The team’s work is a significant contribution, highlighting a fundamental challenge in deep learning and urging the AI community to explore new approaches to enable continuous, adaptive learning in AI systems.

Conclusion:

The discovery and proposed solution to AI’s “loss of plasticity” problem marks a pivotal moment for the AI market. As industries increasingly rely on AI for dynamic and evolving environments, the ability of AI systems to continually learn and adapt will become a critical competitive advantage. This advancement could lead to more efficient AI models, reducing the need for costly retraining and enabling quicker adaptation to market changes. Companies that invest in developing or adopting such technologies will likely gain a significant edge in innovation and operational efficiency.