TL;DR:

- DMV3D, a collaborative effort by Adobe Research and Stanford, introduces a single-stage category-agnostic diffusion model for 3D generation.

- It utilizes a Large Reconstruction Model (LRM) and multi-view denoiser for efficient noise reduction and high-quality 3D rendering.

- DMV3D can generate 3D Neural Radiance Fields (NeRFs) from text or single-image input, significantly reducing 3D object creation time.

- By integrating 3D NeRF reconstruction and rendering into its denoiser, it streamlines the optimization process and minimizes self-occlusions.

- The model leverages large transformer models to address sparse-view 3D reconstruction, achieving state-of-the-art results in single-image 3D reconstruction.

- DMV3D demonstrates the ability to generate 3D models in just 30 seconds on a single A100 GPU.

- Its innovative approach bridges the gap between 2D and 3D generative models, promising broad applications in 3D vision and graphics.

Main AI News:

In the realm of Augmented Reality (AR), Virtual Reality (VR), robotics, and gaming, the quest for streamlined 3D asset creation has intensified. While the adoption of 3D diffusion models has simplified this intricate process, a significant hurdle remains – the necessity for ground-truth 3D models or point clouds for training, particularly when dealing with real-world images. Additionally, the latent 3D diffusion approach often results in a convoluted and challenging-to-denoise latent space, posing a significant barrier to achieving high-quality rendering.

Existing solutions to this problem often involve time-consuming manual efforts and optimization processes. Enter the collaborative efforts of Adobe Research and Stanford University researchers, aimed at revolutionizing the 3D generation process by making it faster, more realistic, and universally applicable. Their recent breakthrough, DMV3D, introduces a single-stage category-agnostic diffusion model, promising to reshape the landscape of 3D object creation.

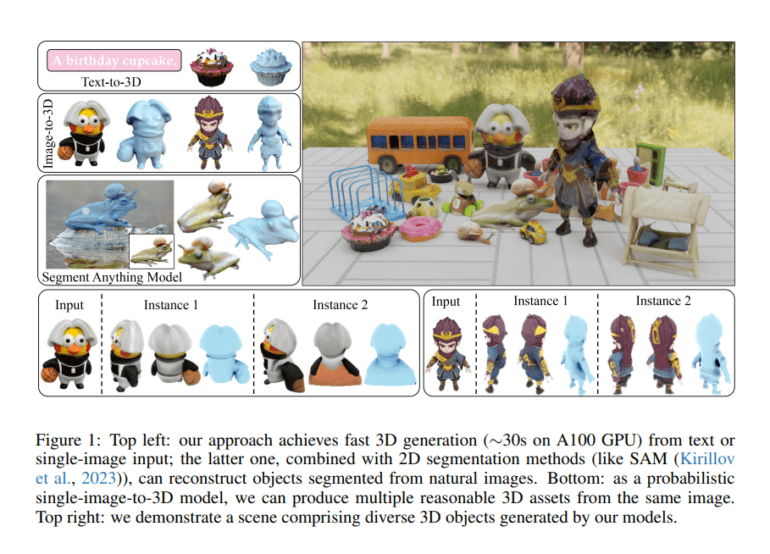

DMV3D’s groundbreaking contributions encompass a pioneering single-stage diffusion framework employing a multi-view 2D image diffusion model for 3D generation. Additionally, it introduces a Large Reconstruction Model (LRM), a multi-view denoiser capable of reconstructing pristine triplane NeRFs from noisy multi-view images. This model presents a versatile probabilistic approach to high-quality text-to-3D generation and single-image reconstruction, with the added benefit of rapid direct model inference, requiring a mere 30 seconds on a single A100 GPU.

One of DMV3D’s remarkable achievements lies in seamlessly integrating 3D NeRF reconstruction and rendering into its denoiser, all without the need for separately training 3D NeRF encoders for latent-space diffusion. This innovation streamlines the per-asset optimization process and relies on a strategically chosen sparse set of four multi-view images encircling an object to effectively capture its 3D structure, minimizing self-occlusions.

Leveraging the power of large transformer models, the researchers tackle the complex task of sparse-view 3D reconstruction. Building upon the foundation of the 3D Large Reconstruction Model (LRM), they introduce a novel joint reconstruction and denoising model capable of handling various noise levels encountered during the diffusion process. This model serves as the multi-view image denoiser within a comprehensive multi-view image diffusion framework.

With training conducted on extensive datasets encompassing synthetic renderings and real-world captures, DMV3D showcases its ability to generate single-stage 3D models in approximately 30 seconds on a single A100 GPU. Moreover, it achieves state-of-the-art results in single-image 3D reconstruction. This pioneering work transcends immediate applications, ushering in a new era of 3D generation by bridging the domains of 2D and 3D generative models, harmonizing 3D reconstruction and generation. The implications of DMV3D extend far beyond its initial applications, offering a promising foundation for addressing diverse challenges in 3D vision and graphics.

Conclusion:

DMV3D represents a groundbreaking advancement in 3D generation, offering a faster, more efficient, and versatile solution for various industries, including AR, VR, robotics, and gaming. Its potential to simplify complex 3D asset creation processes and reduce manual work signifies a significant market opportunity for improved efficiency and creativity in 3D content development.