- Advancements in AI perception are driving the need for machines to comprehend their environment with human-like precision.

- Semantic segmentation, assigning a semantic label to every pixel in an image, is crucial for AI’s perception capabilities.

- Conventional segmentation techniques face challenges in suboptimal conditions, emphasizing the demand for more robust methodologies.

- Multi-modal semantic segmentation combines traditional visual data with additional sources like thermal imaging and depth sensing for a nuanced understanding.

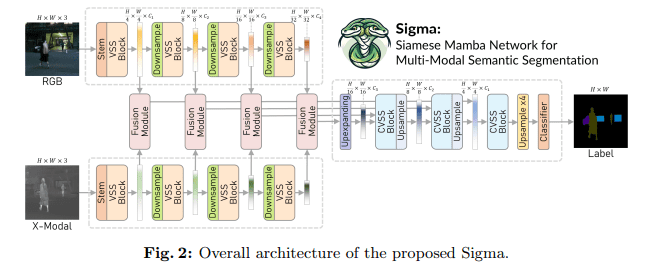

- Sigma, leveraging a Siamese Mamba network architecture, strikes a balance between global contextual understanding and computational efficiency.

- Sigma outperforms existing models in RGB-Thermal and RGB-Depth segmentation tasks, demonstrating superior accuracy with fewer parameters and lower computational demands.

- The Siamese encoder and Mamba fusion mechanism ensure crucial information preservation and seamless integration across different data modalities, leading to remarkably accurate segmentations.

Main AI News:

Advancements in AI are incessantly pursuing machines that can understand their surroundings with human-like precision. Central to this quest is semantic segmentation, a field vital for AI’s perception capabilities. It involves assigning a semantic label to every pixel in an image, facilitating a comprehensive comprehension of the scene. However, conventional segmentation techniques often stumble in less-than-optimal conditions, like poor lighting or obstructions, underscoring the urgency for more robust methodologies.

Enter multi-modal semantic segmentation, an emerging solution that amalgamates traditional visual data with supplementary information sources like thermal imaging and depth sensing. This approach furnishes a more nuanced perspective of the environment, enabling enhanced performance where individual data modalities might fall short. While RGB data provides detailed color information, thermal imaging can identify entities based on heat signatures, and depth sensing offers a 3D scene perspective.

Despite the potential of multi-modal segmentation, prevalent methodologies, chiefly CNNs and ViTs, exhibit notable constraints. CNNs, for instance, grapple with their confined local field of view, constraining their capacity to grasp the broader image context. On the other hand, ViTs can capture global context but at a computationally prohibitive cost, making them less feasible for real-time applications. These challenges underscore the necessity for an inventive approach to efficiently harness the power of multi-modal data.

In response, researchers from the Robotics Institute at Carnegie Mellon University and the School of Future Technology at the Dalian University of Technology introduced Sigma, a groundbreaking solution. Sigma leverages a Siamese Mamba network architecture, integrating the Selective Structured State Space Model, Mamba, to strike a balance between global contextual understanding and computational efficiency. This departure from conventional methods offers global receptive field coverage with linear complexity, facilitating faster and more accurate segmentation across diverse conditions.

In rigorous evaluations on RGB-Thermal and RGB-Depth segmentation tasks, Sigma consistently outshined existing state-of-the-art models. Notably, in experiments on the MFNet and PST900 datasets for RGB-T segmentation, Sigma showcased superior accuracy, with mean Intersection over Union (mIoU) scores surpassing those of comparable methods. Sigma’s innovative design enabled it to achieve these results with substantially fewer parameters and lower computational demands, underscoring its potential for real-time applications and devices with limited processing power.

The Siamese encoder adeptly extracts features from different data modalities, which are then judiciously fused using a novel Mamba fusion mechanism. This ensures that crucial information from each modality is preserved and seamlessly integrated. Subsequently, the decoding phase employs a channel-aware Mamba decoder, further enhancing the segmentation output by prioritizing the most pertinent features across the fused data. This stratified approach empowers Sigma to deliver remarkably accurate segmentations, even in scenarios where traditional methods falter.

Source: Marktechpost Media Inc.

Conclusion:

The introduction of Sigma signifies a significant leap in AI perception capabilities, particularly in environments where traditional methods falter. Its innovative multi-modal semantic segmentation approach not only enhances accuracy but also reduces computational demands, making it an ideal solution for real-time applications and devices with constrained processing power. This advancement opens up new opportunities in various industries, including autonomous vehicles, surveillance systems, and medical imaging, where precise environmental understanding is paramount for success. Organizations investing in AI technologies should closely monitor developments like Sigma to stay competitive and leverage these advancements for strategic advantage.