TL;DR:

- AI is divided into Predictive AI and Generative AI, exemplified by Large Language Models (LLMs).

- Trustworthy AI requires safety, reliability, and resilience, as highlighted by NIST’s AI Risk Management Framework.

- NIST’s Trustworthy and Responsible AI team aims to advance Adversarial Machine Learning (AML) through a comprehensive taxonomy.

- The taxonomy covers ML techniques, attack phases, attacker motives, and attacker knowledge, offering strategies to combat AML attacks.

- AML challenges are dynamic and require ongoing consideration throughout AI system development.

- The research aligns with current AML literature and aims to establish a common language and understanding within the AML domain.

Main AI News:

In the fast-evolving realm of artificial intelligence (AI), the delineation between Predictive AI and Generative AI has become increasingly apparent. Predominantly exemplified by the rise of Large Language Models (LLMs), Generative AI is responsible for generating original content, whereas Predictive AI focuses on data-driven prognostication.

As AI systems play an integral role across diverse industries, ensuring their safety, reliability, and resilience is paramount. The NIST AI Risk Management Framework and AI Trustworthiness taxonomy underscore the need for these operational attributes in order to establish trustworthy AI.

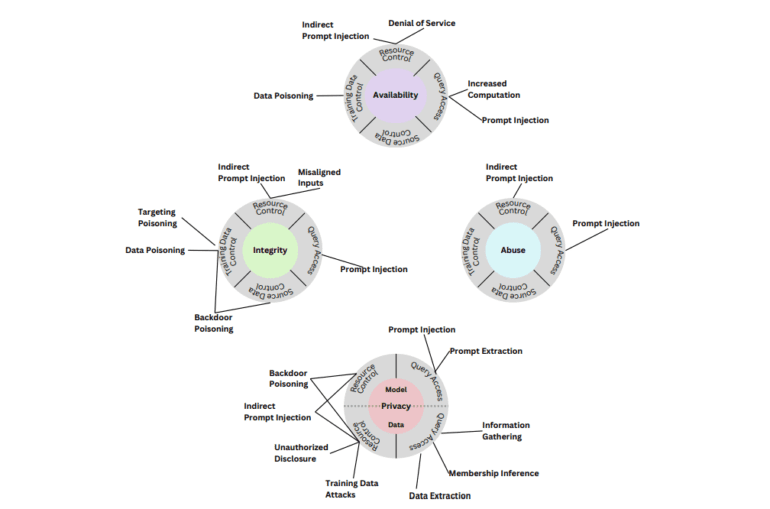

A recent initiative by the NIST Trustworthy and Responsible AI research team aims to advance the field of Adversarial Machine Learning (AML). Their primary objective is to construct an exhaustive taxonomy of AML concepts and provide comprehensive definitions, drawing insights from an extensive analysis of existing AML literature.

The taxonomy encompasses fundamental categories of Machine Learning (ML) techniques, various phases within the attack lifecycle, the motives and goals of attackers, and their expertise and knowledge pertaining to the learning process. In addition to outlining this taxonomy, the research offers strategic insights into managing and mitigating the impacts of AML attacks.

It is worth noting that AML challenges are fluid and dynamic, demanding constant consideration at every juncture of AI system development. The ultimate aspiration is to offer a comprehensive resource that will shape future guidelines and standards for the evaluation and governance of AI system security.

The terminology featured in the research paper aligns seamlessly with the existing body of AML literature, complemented by a glossary elucidating vital aspects of AI system security. The research team’s mission is to establish a unified language and comprehension within the AML domain, fostering the evolution of future norms and standards. This concerted effort aims to facilitate a coordinated and informed approach to addressing security concerns arising from the rapidly evolving AML landscape.

Conclusion:

The development of a comprehensive AML taxonomy and terminology by NIST Trustworthy and Responsible AI serves as a crucial milestone in fostering trustworthy AI systems. This initiative not only enhances the security of AI technologies but also paves the way for standardized practices and guidelines in the ever-evolving AML landscape, providing businesses with a solid foundation to navigate and thrive in this dynamic market.