- Eagle (RWKV-5) and Finch (RWKV-6) introduce innovative architectures replacing the Transformer’s attention mechanism.

- Eagle implements multi-headed matrix-valued states and additional gating, while Finch incorporates data-dependent functions for dynamic modeling.

- Both models employ dynamic, data-driven recurrence mechanisms, enhancing adaptability and memory dynamics.

- The RWKV World Tokenizer and RWKV World v2 dataset are introduced to bolster performance across diverse data.

- Eagle and Finch outperform comparably-sized models on multilingual benchmarks, excel in associative recall and long context modeling, and demonstrate efficiency gains in inference and memory usage.

- They showcase proficiency beyond language tasks, with Eagle excelling in music modeling and VisualRWKV performing impressively in visual comprehension benchmarks.

- Despite limitations in text embedding tasks, Eagle and Finch signify a significant advancement in efficient and high-performing language modeling.

Main AI News:

In the realm of Natural Language Processing, Large Language Models (LLMs) have ushered in a paradigm shift, yet the prevailing Transformer framework grapples with quadratic complexity challenges. While sparse attention techniques have endeavored to mitigate this issue, a new wave of models is making remarkable strides by reimagining core architectures.

This article delves into the groundbreaking research introducing Eagle (RWKV-5) and Finch (RWKV-6), two pioneering architectures that reimagine the conventional Transformer’s attention mechanism with streamlined recurrence modules. Evolving from RWKV-4, Eagle introduces multi-headed matrix-valued states, redefined receptance, and supplementary gating mechanisms. Finch, taking a step further, incorporates data-dependent functions for time-mixing and token-shifting, thereby facilitating more dynamic and adaptable modeling capabilities.

The distinguishing feature of these models lies in their dynamic, data-driven recurrence mechanisms. In Eagle, time-mixing weights remain static yet are uniquely learned per channel, progressively assimilating information over time. Finch, on the other hand, introduces time-varying and data-dependent weights, empowering each channel to tailor its memory dynamics according to the input context. This innovative approach is complemented by methodologies like Low Rank Adaptation, which efficiently fine-tunes the recurrence parameters.

To enhance performance across diverse datasets, the researchers unveil the RWKV World Tokenizer and the extensive 1.12 trillion token RWKV World v2 dataset, with a strong emphasis on multilingualism and code.

The empirical evidence speaks volumes. Across multilingual benchmarks, Eagle and Finch consistently outshine models of similar scale, marking a substantial advancement in the accuracy-compute trade-off spectrum. They excel in tasks such as associative recall, prolonged context comprehension, and the exhaustive Bamboo benchmark. Furthermore, their streamlined architectures facilitate expedited inference and reduced memory footprint when compared to sparse Transformer counterparts.

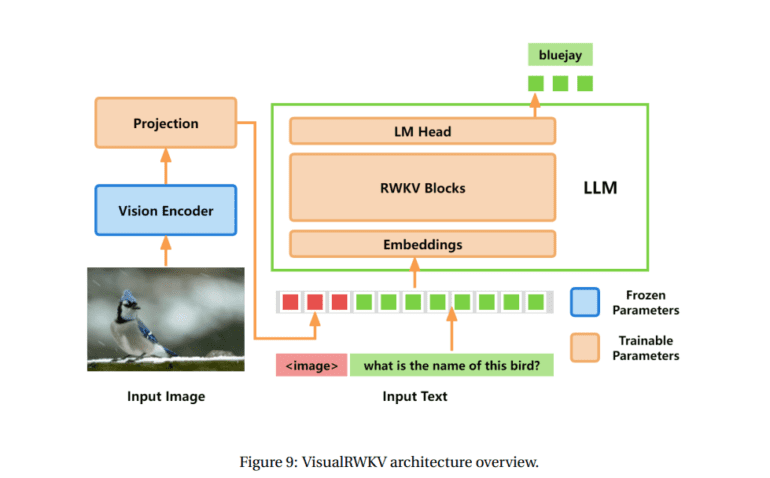

However, these models transcend mere linguistic prowess. The team showcases Eagle’s prowess in music modeling, achieving a 2% enhancement over the preceding RWKV-4 architecture. VisualRWKV, a meticulously crafted multimodal variant, demonstrates remarkable proficiency in visual comprehension benchmarks, rivaling or surpassing models of much larger magnitude.

While Eagle and Finch encounter limitations, notably in tasks related to text embedding, they epitomize a significant leap forward in efficient and high-performing language modeling. By diverging from the conventional Transformer blueprint and embracing dynamic, data-driven recurrence mechanisms, these models deliver stellar results across a diverse array of benchmarks while upholding computational efficiency.

Conclusion:

The introduction of Eagle and Finch signifies a pivotal moment in the evolution of language models. Their departure from the traditional Transformer architecture and incorporation of dynamic, data-driven recurrence mechanisms not only redefine the boundaries of efficient language modeling but also present opportunities for diverse applications beyond linguistics. This innovation underscores the importance of continuous research and development in the field, opening avenues for enhanced language understanding and multimodal integration in various market sectors, from artificial intelligence to content creation and beyond.