TL;DR:

- Non-prehensile manipulation is a challenging task for robots.

- Researchers at Carnegie Mellon University and Meta AI propose HACMan, a reinforcement learning strategy for non-prehensile manipulation.

- HACMan uses point cloud data and introduces a temporally abstracted and spatially grounded action representation.

- It utilizes an actor-critic RL framework in a hybrid discrete-continuous action space.

- HACMan demonstrates success in 6D object poses alignment with a 79% success rate on unseen, non-flat objects.

- The alternative action representation in HACMan outperforms the best baseline by more than threefold.

- Zero-shot sim2real transfer tests show dynamic object interactions across various shapes and non-planar objectives.

- HACMan’s limitations include reliance on point cloud registration and limited contact position visibility.

- The proposed approach has the potential for broader application in manipulation activities.

- HACMan represents a significant advancement in non-prehensile manipulation, offering promise for future developments in robot manipulation.

Main AI News:

Human skill goes beyond mere grasping and encompasses the ability to handle objects through various techniques such as pushing, flipping, toppling, and sliding. These non-prehensile manipulation methods are crucial in situations where objects are difficult to grip, or workspaces are congested. However, despite significant progress in robotics, robots still face challenges in performing non-prehensile manipulation tasks effectively.

Researchers at Carnegie Mellon University and Meta AI have recently proposed a groundbreaking approach to address these challenges and enable robots to perform complex non-prehensile manipulation tasks while generalizing across different item geometries. Their innovative solution leverages reinforcement learning (RL) and is known as Hybrid Actor-Critical Maps for Manipulation (HACMan), which utilizes point cloud data to inform the robot’s decision-making process.

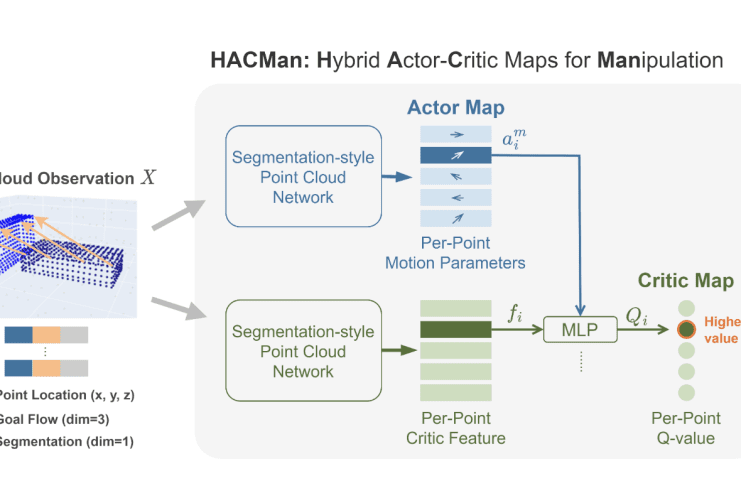

HACMan introduces two key technical advancements that revolutionize the field of non-prehensile manipulation. Firstly, it presents a temporally abstracted and spatially grounded action representation that is object-centric. The robot decides where to make contact with the object and then selects a set of motion parameters to determine its subsequent action. By utilizing the object’s point cloud data, the robot establishes a solid geographical foundation for its actions. However, it is worth noting that this abstraction of contact-rich parts introduces a temporal aspect to the robot’s decision-making process.

Secondly, HACMan employs an actor-critic RL framework to implement the suggested action representation. This framework operates in a hybrid discrete-continuous action space, where motion parameters are defined over a continuous action space, while contact location is defined over a discrete action space.

The critic network in HACMan predicts Q-values at each pixel of the object’s point cloud, while the actor network generates continuous motion parameters for each pixel. The per-point Q-values are then used to update the actor and determine the optimal contact position, which distinguishes HACMan from conventional continuous action space RL algorithms.

The updated rule of a standard off-policy RL algorithm is tailored to accommodate this new hybrid action space. The researchers successfully utilized HACMan to achieve a remarkable 79% success rate on simulations involving unseen, non-flat objects during a 6D object pose alignment assignment. These results demonstrate the policy’s ability to generalize well to previously unseen classes of objects.

Furthermore, HACMan’s alternative action representation outperforms the best baseline method by more than threefold in terms of training success rate. The researchers also employed zero-shot sim2real transfer to evaluate HACMan’s performance with real robots. These experiments showcased dynamic object interactions across a wide range of previously unseen object shapes and non-planar objectives.

While HACMan shows tremendous potential, it does have a few limitations. Firstly, it relies on point cloud registration to estimate the object-goal transformation, necessitating somewhat accurate camera calibration. Additionally, the contact position is limited to the visible parts of the object.

However, the research team emphasizes that their proposed approach can be further expanded and applied to various manipulation activities. For instance, they envision extending the approach to include grasping and other non-prehensile behaviors. The combination of the proposed strategy and the promising experimental results indicates a significant step forward in the field of robot manipulation, enabling robots to operate with a wider range of objects.

In conclusion, the introduction of HACMan represents a significant breakthrough in non-prehensile manipulation. By leveraging reinforcement learning and point cloud data, this approach enables robots to perform complex tasks with improved generalization capabilities. While certain limitations remain, the research conducted by Carnegie Mellon University and Meta AI paves the way for future advancements in robotic manipulation, offering immense potential for a broad spectrum of practical applications.

Conlcusion:

The introduction of HACMan, a reinforcement learning strategy for non-prehensile manipulation, signifies a significant breakthrough in the field of robotics. This innovative approach, developed by researchers at Carnegie Mellon University and Meta AI, has the potential to revolutionize the market by enabling robots to perform complex manipulation tasks with improved success rates and generalization capabilities.

The impressive results achieved in 6D object pose alignment and dynamic object interactions highlight the practical applications and market potential of this technology. As HACMan continues to advance and overcome its limitations, it opens up new possibilities for automation and robotic systems across industries, paving the way for enhanced efficiency, productivity, and adaptability in various market sectors.