TL;DR:

- Large Language Models (LLMs) are increasingly used in various fields, including tasks requiring logical reasoning.

- LLMs often produce inaccurate results, especially when not specifically trained for a task, highlighting the need for self-correction.

- Google’s recent study investigates LLMs’ ability to identify logical errors and their potential for self-correction.

- The research uses the BIG-Bench Mistake dataset, evaluating 300 traces with incorrect and correct answers.

- Key focus areas include the effectiveness of mistake detection as an accuracy indicator and LLMs’ ability to backtrack and rectify errors.

- Findings indicate room for improvement in LLMs’ error detection capabilities.

- Enhanced error detection and the proposed backtracking approach offer avenues for improving LLMs’ performance.

Main AI News:

Language models have become an integral part of various industries, with their prominence expanding alongside the growth of artificial intelligence. Their application extends to tasks requiring logical reasoning, such as addressing multi-turn queries, task completion, and code generation. However, the reliability of these Large Language Models (LLMs) remains a concern, particularly when applied to tasks that demand specialized training. LLMs must possess the capability to identify and rectify errors, and this process is known as self-correction. This article explores the recent breakthroughs in self-correction, shedding light on the intricacies of logical error recognition and the effectiveness of backtracking strategies.

Google researchers have conducted a comprehensive study titled “LLMs cannot find reasoning errors but can correct them!” to investigate the facets of self-correction. The study aims to address the limitations of LLMs in this regard, including their ability to discern logical errors, the feasibility of utilizing mistake identification as an accuracy indicator, and their capacity to backtrack and rectify errors once identified.

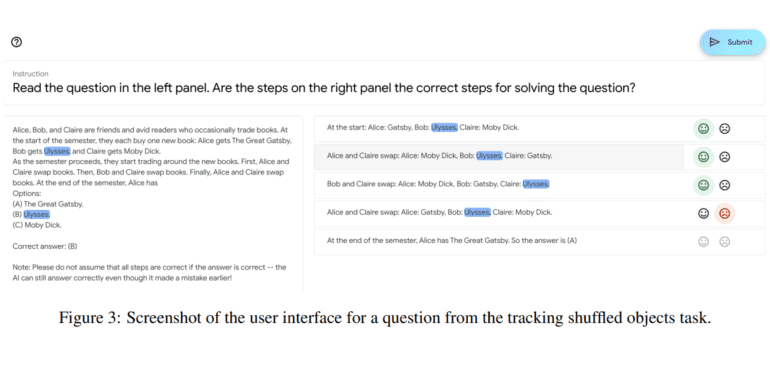

To conduct this research, the team employed the BIG-Bench Mistake dataset, comprising 300 carefully selected traces. Out of these, 255 traces featured incorrect answers, ensuring the presence of at least one error, while 45 traces contained answers that may or may not have contained errors. Each trace underwent thorough evaluation by a panel of at least three human labelers.

The study’s primary focus is to ascertain whether LLMs can effectively recognize logical errors in Chain of Thought (CoT)-style reasoning and whether mistake detection can serve as a dependable accuracy indicator. Furthermore, the research explores whether an LLM can generate accurate responses once it identifies the location of the error and whether these mistake-detection skills can be extrapolated to new tasks.

The research findings suggest that current state-of-the-art LLMs have room for improvement in error detection capabilities. The study underscores that the challenge of identifying errors significantly contributes to LLMs’ inability to self-correct reasoning errors. Researchers emphasize the need to enhance error detection abilities. Additionally, they propose the concept of backtracking, alongside a trained classifier, as a rewarding model to enhance LLMs’ performance.

Conclusion:

The research underscores the importance of advancing error detection and correction in LLMs. This has significant implications for the market, highlighting the need for continued research and development in AI to enhance the reliability and performance of Language Models, making them more dependable in various applications and industries.