- Microsoft introduces automated AD generation using GPT-4V(ision) for video accessibility.

- GPT-4V combines language understanding with visual comprehension for accurate AD.

- Method analyzes movie clips and title info, tailoring AD content to video context.

- Tested on MAD dataset, showing superior performance over existing methods.

- Results indicate higher ROUGE-L and CIDEr scores, establishing new benchmarks.

Main AI News:

In the realm of video accessibility, the introduction of Audio Description (AD) has been a significant stride forward. AD offers a verbal depiction of crucial visual elements within a video, catering to those who rely on auditory cues due to visual impairments. Yet, crafting precise AD entails substantial resources in terms of expertise, equipment, and time. Automating this process not only streamlines production but also broadens the reach of accessible content.

Recent advancements in AI, particularly Large Multimodal Models (LMMs), have paved the way for more intelligent solutions. Among these, GPT-4V stands out as an innovative model integrating language understanding with visual comprehension. Building upon the foundation of GPT-4, GPT-4V extends its capabilities to incorporate vision, making it a powerful tool for tasks like AD generation.

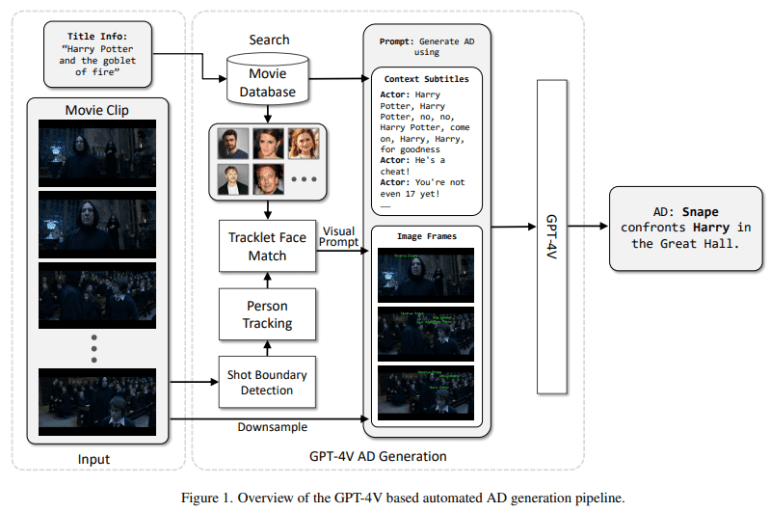

A breakthrough method, spearheaded by Microsoft, harnesses the potential of GPT-4V to revolutionize AD production for videos. By leveraging both textual and visual cues, this automated pipeline generates AD content seamlessly. The system analyzes movie clips along with title information, allowing it to tailor AD content to the specific context of each video. This adaptive approach ensures that AD aligns with speech gaps and adheres to production guidelines, ensuring a natural flow.

The efficacy of this method was put to the test using the MAD dataset, a comprehensive collection of audio descriptions from a vast array of movies. Employing a multi-step process, the system first identifies characters using a multiple-person tracker and then extracts relevant visual data using advanced models like TransNetV2 and YOLOv7. GPT-4V is then tasked with generating AD based on predefined word counts, optimizing its performance against established benchmarks.

The results speak volumes. With an emphasis on 10-word prompts, the proposed method surpasses previous benchmarks, boasting higher ROUGE-L and CIDEr scores. Compared to existing techniques like AutoAD-II, this approach establishes a new standard for AD generation, marking a paradigm shift in video accessibility technology.

Conclusion:

Microsoft’s innovative approach to automated AD generation signifies a significant leap forward in video accessibility technology. By harnessing the capabilities of GPT-4V(ision), the system not only streamlines AD production but also ensures accuracy and adaptability. This advancement is poised to transform the market landscape, catering to the needs of individuals with visual impairments and setting a new standard for inclusive video content.