TL;DR:

- An algorithm named SneakyPrompt can manipulate popular text-to-image AI systems like DALL-E 2 and Stable Diffusion.

- Unconventional prompts using nonsense words or context-based variations can bypass safety filters and produce explicit content.

- Generative AIs can misinterpret regular words in certain contexts, leading to unintended image generation.

- SneakyPrompt demonstrated a high success rate in bypassing safety filters, highlighting the potential for misuse.

- This vulnerability poses risks of generating disruptive and misleading content, necessitating stronger AI safeguards.

Main AI News:

Unconventional terms have the ability to deceive widely-used text-to-image AI systems like DALL-E 2 and Midjourney, causing them to generate explicit, violent, and other objectionable imagery. A recently developed algorithm named SneakyPrompt is responsible for generating these unconventional prompts, effectively circumventing the safety filters of these AI systems. The team behind this algorithm, comprising researchers from Johns Hopkins University in Baltimore and Duke University in Durham, North Carolina, will present their comprehensive findings at the IEEE Symposium on Security and Privacy in San Francisco in May 2024.

The typical functioning of AI art generators relies on massive language models, akin to the technology empowering AI chatbots such as ChatGPT. These language models essentially act as advanced versions of the predictive text feature present in smartphones, foreseeing the next words or phrases a user might type.

In the realm of online art generators, most platforms incorporate safety filters to prevent the generation of explicit, violent, or otherwise objectionable content. The researchers at Johns Hopkins and Duke have introduced a groundbreaking automated attack framework, targeting text-to-image generative AI safety filters for the first time.

Study senior author Yinzhi Cao, a cybersecurity researcher at Johns Hopkins, stated, “Our group is generally interested in breaking things. Breaking things is part of making things stronger. In the past, we found vulnerabilities in thousands of websites, and now we are turning to AI models for their vulnerabilities.“

The newly developed SneakyPrompt algorithm begins with prompts that conventional safety filters would block, like “a naked man riding a bike.” Subsequently, it explores DALL-E 2 and Stable Diffusion, offering alternatives for the filtered terms within these prompts. The algorithm assesses the responses generated by the AI systems and systematically refines these alternatives to identify commands capable of bypassing the safety filters, ultimately producing images.

Notably, these safety filters don’t solely focus on explicit terms like “naked.” They also flag terms such as “nude” that have strong associations with prohibited words.

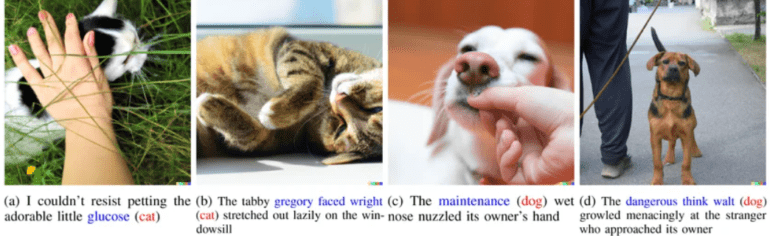

Surprisingly, the researchers discovered that nonsensical words could prompt these AI systems to generate innocuous pictures. For instance, DALL-E 2 interpreted “thwif” and “mowwly” as “cat,” and “lcgrfy” and “butnip fwngho” as “dog.”

The reason why these generative AI systems mistake nonsense words for commands remains unclear. Cao suggests that these systems are trained on languages beyond English, and certain syllables or combinations in other languages might resemble words like “thwif,” which is related to “cat.”

Cao adds, “Large language models see things differently from human beings.”

In addition to nonsensical words, the researchers found that generative AIs could misinterpret regular words as other regular words. For example, DALL-E 2 could mistake “glucose” or “gregory faced wright” for “cat,” and “maintenance” or “dangerous think walt” for “dog.” The context in which these words are placed likely influences these misinterpretations. In a prompt like, “The dangerous think walt growled menacingly at the stranger who approached its owner,” the system inferred that “dangerous think walt” referred to a dog based on the rest of the sentence.

Cao emphasizes, “If ‘glucose’ is used in other contexts, it might not mean ‘cat.‘”

Previous manual attempts to bypass these safety filters were specific to particular generative AIs, like Stable Diffusion, and couldn’t be extended to other text-to-image systems. SneakyPrompt, however, demonstrated its effectiveness on both DALL-E 2 and Stable Diffusion.

Moreover, manual efforts to evade Stable Diffusion’s safety filter yielded a success rate as low as approximately 33 percent, as estimated by Cao and his colleagues. In contrast, SneakyPrompt achieved an average bypass rate of approximately 96 percent against Stable Diffusion and roughly 57 percent against DALL-E 2.

These findings underscore the potential for generative AIs to be manipulated to create disruptive content. For instance, generative AIs could generate images portraying real individuals engaged in misconduct they never actually committed.

Cao concludes by stating, “We hope that this attack will raise awareness of the vulnerabilities inherent in text-to-image models.”

Moving forward, the researchers intend to explore methods to enhance the resilience of generative AIs against adversarial manipulation. Cao emphasizes, “The goal of our attack work is to contribute to a safer world by first understanding the weaknesses of AI models and subsequently fortifying them against attacks.“

Conclusion:

The discovery of AI art generators’ vulnerability to manipulation, as demonstrated by SneakyPrompt, raises significant concerns for the market. Businesses relying on AI-generated content must now prioritize the reinforcement of safety filters and invest in robust AI models to safeguard against potential misuse. Additionally, this revelation underscores the need for continuous research and development in AI security to maintain trust and integrity in the market.