TL;DR:

- Baldur, an AI-powered tool, automates mathematical proofs for software correctness.

- Developed by a team led by Yuriy Brun at the University of Massachusetts Amherst.

- Uses Google’s Minerva LLM, fine-tuned on proof and theorem data, in combination with Isabelle.

- Achieves an impressive 47.5% proof rate, surpassing Thor in generating whole proofs.

- Baldur can repair its own erroneous proofs with the help of error messages.

- Future goals include wider implementation, enhanced contextual information gathering, and feedback from software developers.

Main AI News:

In the realm of software development, imperfections often persist, with bugs creeping into apps, programs, and websites. However, when it comes to mission-critical systems such as cryptographic protocols, medical devices, and space shuttles, perfection is the only acceptable standard. Achieving this level of flawlessness necessitates more than just code reviews and rigorous testing; it demands formal verification.

Formal verification entails crafting a mathematical proof of your code’s correctness, a process described by Yuriy Brun, a distinguished professor at the University of Massachusetts Amherst, as “one of the most challenging yet powerful methods for ensuring code reliability.”

To simplify the arduous task of formal verification, Brun and his team have pioneered a groundbreaking AI-driven solution known as Baldur. This innovative tool automatically generates proofs, marking a significant leap forward in software engineering. Their remarkable achievement earned them a Distinguished Paper award when they presented their research in December 2023 at the ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering in San Francisco.

The masterminds behind Baldur include Emily First, who contributed to the project as part of her doctoral dissertation at UMass Amherst, Markus Rabe, a former researcher at Google where the study was conducted, and Talia Ringer, an assistant professor at the University of Illinois Urbana-Champaign.

Baldur harnesses the power of Google’s Minerva large language model (LLM), a robust system trained on scientific papers and web content laden with mathematical expressions. Fine-tuned on a wealth of data related to proofs and theorems, Baldur collaborates seamlessly with Isabelle, a proof assistant and automated theorem prover.

When presented with a theorem statement, Baldur astoundingly produces an entire proof in nearly 41 percent of cases. To enhance Baldur’s success rate, the team supplied it with additional contextual information, such as related definitions or the preceding lines in a theory file. This strategic augmentation elevated the proof rate to an impressive 47.5 percent, showcasing Baldur’s ability to leverage context in predicting accurate proofs, as First highlights.

Much like a skilled programmer who excels at resolving code issues when comprehending its context within the larger codebase, Baldur’s performance benefits significantly from added contextual cues.

While Thor, the current state-of-the-art tool for automatic proof generation, boasts a higher proof rate at 57 percent, Baldur brings its unique strength to the table. Thor primarily predicts the next step in a proof, utilizing a smaller language model combined with a method that explores potential proof paths. However, when Thor and Baldur, named after Thor’s brother in Norse mythology, collaborate, they achieve an impressive 66 percent success rate in generating correct proofs.

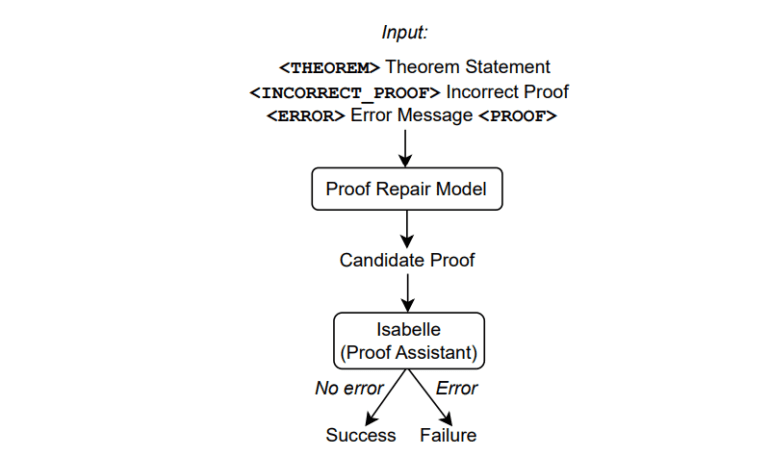

Furthermore, the team uncovered another remarkable capability within Baldur—it can rectify its own erroneous proofs, further elevating its proof rate. When provided with its previous unsuccessful attempt alongside the error message from Isabelle, Baldur can transform a faulty proof into a flawless one. This astonishing discovery suggests the potential for incorporating additional valuable information into the large language model to yield even more precise results, as Brun points out.

However, the team acknowledges that determining the ideal amount of information remains a challenge. “One limitation is that we’re providing some information around the proof, but we don’t know where it becomes excessive or ceases to be useful,” Brun explains. Furthermore, there is room for improvement in reducing errors, a goal that may be attainable by fine-tuning the model using comprehensive datasets that provide a clearer representation of proofs and theorem-proof pairs.

Looking ahead, Emily First aims to implement Baldur’s approach in other proof assistants while exploring innovative ways to gather contextual information more effectively, thus enhancing the model’s accuracy. The team envisions Baldur as a game-changer for proof engineers, who are entrusted with the crucial task of formal verification for national security systems, including those within organizations like the U.S. Department of Defense and its Defense Advanced Research Projects Agency (DARPA).

On a broader scale, the team aspires to gather feedback from software developers to uncover how tools like Baldur can assist them, whether it’s in debugging code errors, refining specifications, or creating higher-quality systems. As Brun succinctly puts it, “Building interactive tools that aid developers in proving code properties holds immense potential. Understanding the developer-tool interaction and bolstering it with automated solutions can propel developers further in their endeavors.”

Conclusion:

The emergence of Baldur and its capabilities in automating proof generation signifies a significant advancement in software engineering. This technology has the potential to streamline and enhance formal verification processes, particularly in critical sectors such as national security. It opens up new possibilities for developers and organizations seeking to ensure the utmost reliability in their software systems.