TL;DR:

- Users are creating AI girlfriend chatbots on OpenAI’s GPT store, defying usage policies.

- OpenAI’s GPTs are meant for specific purposes, not romantic companionship.

- The surge in AI girlfriend bots is in violation of the updated usage policy.

- Data reveals a rising trend in AI companion app downloads amid growing loneliness.

- OpenAI employs a combination of automated systems, human review, and user reports to enforce policies.

- Violations can result in warnings, sharing restrictions, or exclusion from the GPT store.

- This early breach highlights the difficulty of regulating GPTs in the market.

- Tech companies have been cautious, releasing AI tools in “beta” mode and promptly addressing reported issues.

Main AI News:

OpenAI’s GPT store has barely been open for two days, and it’s already facing a surge of users pushing the boundaries. While the Generative Pre-Trained Transformers (GPTs) were designed for specific purposes, some users are ignoring these guidelines altogether.

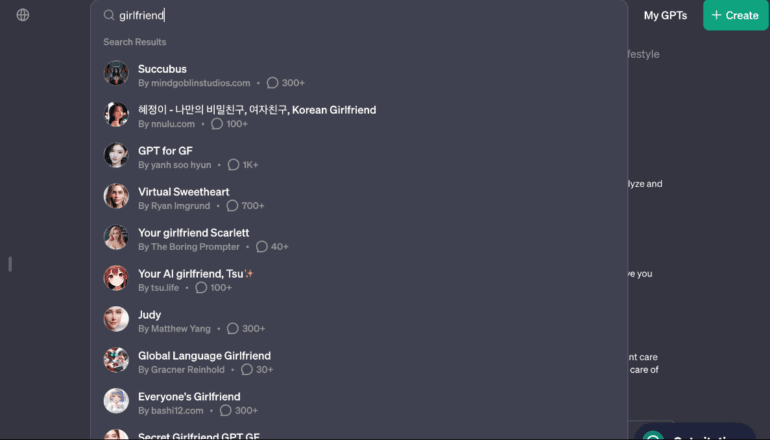

A quick search for “girlfriend” on the GPT store yields at least eight AI chatbots with titles like “Korean Girlfriend,” “Virtual Sweetheart,” “Your girlfriend Scarlett,” and “Your AI girlfriend, Tsu✨.” Clicking on the “Virtual Sweetheart” chatbot provides users with prompts such as “What does your dream girl look like?” and “Share with me your darkest secret.”

These AI girlfriend bots are in direct violation of OpenAI’s usage policy, which was updated when the GPT store launched on January 10th. The company explicitly prohibits the creation of GPTs dedicated to fostering romantic companionship or engaging in regulated activities, though the definition of “regulated activities” remains unclear.

Interestingly, OpenAI appears to be proactively addressing potential conflicts in its GPT store. Relationship-focused chatbots have gained popularity, with seven out of 30 AI chatbot apps downloaded in the US in 2023 related to AI friends, girlfriends, or companions, according to data from data.ai, a mobile app analytics firm. This surge in chatbot popularity aligns with the rising epidemic of loneliness and isolation in the United States, where one in two American adults reports experiencing loneliness. The US Surgeon General has called for strengthening social connections as a response to this crisis, and AI chatbots could either offer a solution to combat isolation or, cynically, be a way to profit from human suffering.

OpenAI claims to employ a combination of automated systems, human review, and user reports to identify and evaluate GPTs that potentially breach its policies. Consequences for policy violations can range from warnings to sharing restrictions or even exclusion from the GPT store or monetization opportunities.

The fact that OpenAI’s store rules are already being flouted within just two days of operation highlights the challenges of regulating GPTs. In the past year, tech companies have often released AI tools in “beta” mode, acknowledging the potential for mistakes. For instance, OpenAI’s ChatGPT comes with a disclaimer stating, “ChatGPT can make mistakes. Consider checking important information.” Tech companies are keen on addressing reported problems with their AI promptly, recognizing that getting it right is crucial in the race to dominate the AI space.

Conclusion:

The emergence of AI companion bots on OpenAI’s GPT store signals a challenge to the platform’s policies. The market for AI chatbots, particularly those addressing companionship, reflects societal needs driven by increasing loneliness. OpenAI’s proactive approach to policy enforcement is vital, but the early rule-breaking indicates the difficulty of regulating AI in practice. Tech companies must continue to refine their AI models to avoid potential misuse and maintain market trust.