TL;DR:

- Neural View Synthesis (NVS) challenges in generating realistic 3D scenes are highlighted.

- Researchers from Purdue University, Adobe, Rutgers University, and Google evaluate NVS methods.

- DL3DV-10K, a vast multi-view scene dataset, was introduced to address NVS limitations.

- DL3DV-140 benchmark assesses NeRF variants and 3D Gaussian Splatting.

- Zip-NeRF emerges as a standout performer in terms of quality metrics.

- Detailed analysis of scene complexity across various scenarios.

- DL3DV-10K enhances the generalizability of IBRNet for 3D vision.

- Large-scale real-world scene datasets like DL3DV-10K drive adaptable NeRF methods.

Main AI News:

Neural View Synthesis (NVS) is a formidable challenge in the realm of generating lifelike 3D scenes from multi-view videos, particularly in the dynamic tapestry of real-world scenarios. The constraints of prevailing state-of-the-art (SOTA) NVS techniques are unmasked when confronted with the kaleidoscope of lighting variations, reflections, transparency, and overall scene intricacies. In response to these daunting challenges, visionaries within the research community have set their sights on pushing the boundaries of NVS capabilities.

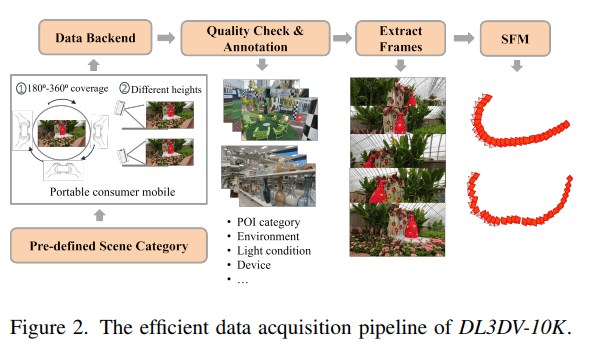

In an insightful exploration of NVS, a formidable consortium comprising scholars from Purdue University, Adobe, Rutgers University, and Google embarked on a comprehensive assessment of established methodologies, encompassing NeRF variants and 3D Gaussian Splatting. Their canvas was the newly unveiled DL3DV-140 benchmark, born from the colossal DL3DV-10K dataset. This monumental benchmark stands as a crucible for gauging the mettle of NVS techniques, showcasing their mettle across an array of testing scenarios. In light of the recognized shortcomings, these pioneering researchers introduced DL3DV-10K as a potent dataset, poised to forge a universal foundation for Neural Radiance Fields (NeRF). The strategic design of this dataset is artfully tailored to encapsulate the tapestry of real-world scenes, capturing the symphony of environmental nuances, luminous variances, reflective surfaces, and diaphanous materials.

DL3DV-140 undertakes a meticulous examination of NeRF variants and 3D Gaussian Splatting, dissecting their strengths and weaknesses along the labyrinth of complexity indices. Noteworthy among them are Zip-NeRF, Mip-NeRF 360, and 3DGS, which emerge as unwavering titans, consistently outshining their peers. Zip-NeRF, in particular, emerges as a frontrunner, a virtuoso showcasing unparalleled performance in terms of Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM). The researchers, akin to discerning art collectors, dissect the subtleties of scene complexity, navigating the terrain of indoor versus outdoor settings, lighting mosaics, the taxonomy of reflective surfaces, and the taxonomy of transparent materials. This in-depth performance assessment paints a nuanced portrait of how these methodologies navigate the tapestry of diverse scenarios. Remarkably, Zip-NeRF demonstrates resilience and efficiency, even as it feasts on a heftier serving of GPU memory within the default batch size.

Beneath the surface of benchmarking, the research luminary squad embarks on an odyssey, exploring the untapped potential of DL3DV-10K in sculpting generalizable NeRFs. By harnessing the dataset’s vast treasure trove of knowledge to pre-train IBRNet, they unveil the dataset’s potent prowess in elevating the performance of this state-of-the-art methodology. The experiments uncover a profound revelation: the prior wisdom distilled from a subset of DL3DV-10K exerts a transformative influence on the versatility of IBRNet, as it triumphantly navigates a diverse landscape of benchmarks. This experimentation etches a persuasive narrative, firmly endorsing the pivotal role of gargantuan, real-world scene datasets such as DL3DV-10K in steering the evolution of learning-based, adaptable NeRF methodologies.

Conclusion:

The introduction of DL3DV-10K and its benchmarking results, particularly the success of Zip-NeRF, signify a significant advancement in the field of 3D vision. This breakthrough paves the way for improved 3D scene generation, which can find applications across various industries, including gaming, architecture, virtual reality, and augmented reality. Companies investing in 3D vision technologies should consider leveraging this dataset to enhance their products and services, ensuring a competitive edge in the market.