TL;DR:

- Hong Kong researchers introduced two open-source diffusion models: T2V and I2V.

- T2V excels in cinematic-quality video generation from text input.

- I2V converts reference images into videos while preserving content, structure, and style.

- These models advance video generation technology, benefiting researchers and engineers.

- Video Diffusion Models (VDMs) like Make-A-Video and Imagen Video extend the Stable Diffusion framework.

- T2V and I2V outperform existing T2V models, pushing technology forward.

- Generative models have transformed image and video generation.

- T2V models introduce temporal attention layers and joint training for consistency.

- Collaborative sharing of these models fosters progress in video generation technology.

Main AI News:

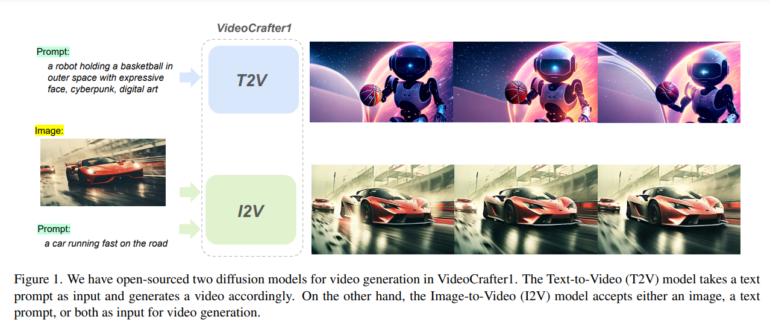

In a significant leap forward for the field of video generation, a team of researchers hailing from Hong Kong has introduced two cutting-edge open-source diffusion models. These pioneering models, known as the Text-to-Video (T2V) and Image-to-Video (I2V) models, promise to revolutionize the quality of videos generated through artificial intelligence.

The Text-to-Video (T2V) model takes center stage, setting new standards for cinematic-quality video production from textual input. It outperforms all other open-source T2V models in terms of performance and quality. Meanwhile, the Image-to-Video (I2V) model works its magic by transforming a reference image into a video, while faithfully preserving the content, structure, and style of the original image. These innovations are poised to significantly advance video generation technology, benefitting both academia and industry by providing invaluable resources for researchers and engineers alike.

The Impact of Diffusion Models (DMs) on Video Generation

Diffusion models (DMs) have been at the forefront of content generation, encompassing areas such as text-to-image and video generation. In particular, Video Diffusion Models (VDMs) such as Make-A-Video and Imagen Video have leveraged the Stable Diffusion (SD) framework to ensure temporal consistency in open-source T2V models. However, despite their impressive capabilities, these models have faced limitations in terms of resolution, quality, and composition.

These limitations have paved the way for the emergence of T2V and I2V models, which have quickly established themselves as frontrunners in the field of video generation. Their superior performance and technological advancements are reshaping the landscape of content creation within the AI community.

The Journey of Generative Models in Video Generation

Generative models, particularly diffusion models, have made remarkable strides in the realm of image and video generation. While open-source text-to-image (T2I) models have made their presence felt, T2V models have been a relatively uncharted territory. T2V models come equipped with temporal attention layers and undergo joint training to ensure consistency, while I2V models excel at preserving image content and structure. By sharing these groundbreaking models with the global research community, the team of Hong Kong-based researchers aims to foster collaboration and drive video generation technology to new heights.

Breaking Down the T2V and I2V Models

The heart of this research lies in the development of two innovative diffusion models: T2V and I2V.

The Text-to-Video (T2V) model boasts a sophisticated 3D U-Net architecture, replete with spatial-temporal blocks, convolutional layers, spatial and temporal transformers, and dual cross-attention layers, all working in harmony to align text and image embeddings seamlessly. In the realm of Image-to-Video (I2V), the model shines by effortlessly transforming static images into dynamic video clips while meticulously preserving the original content, structure, and style. Both models are underpinned by a learnable projection network, which plays a pivotal role in their training. To gauge their effectiveness, a comprehensive evaluation process employs metrics to assess video quality and the alignment between text and video.

The Triumph of T2V and I2V

It is abundantly clear that the T2V and I2V models have raised the bar when it comes to video quality and text-video alignment. T2V achieves this feat through its denoising 3D U-Net architecture, delivering videos of exceptional visual fidelity. On the other hand, I2V’s prowess lies in its ability to seamlessly transform images into video clips, maintaining content, structure, and style. A comparative analysis against models such as Gen-2, Pika Labs, and ModelScope underscores the undeniable superiority of T2V and I2V. They stand out not only in terms of visual quality but also in text-video alignment, temporal consistency, and motion quality.

Charting the Path Forward

In conclusion, the introduction of the T2V and I2V models represents a significant stride towards advancing technological capabilities within the AI community. While these models have already demonstrated remarkable performance in video quality and text-video alignment, there is room for further enhancements in aspects such as video duration, resolution, and motion quality.

To achieve these advancements, researchers may consider expanding video duration by incorporating additional frames and exploring frame-interpolation models. Improving resolution can be pursued through collaborations with entities like ScaleCrafter or by harnessing spatial upscaling techniques. Elevating motion and visual quality may necessitate working with higher-quality data sources. Additionally, experimenting with image prompts and investigating image conditional branches could offer exciting avenues for creating dynamic content with enhanced visual fidelity using the diffusion model. The future of video generation technology looks exceptionally promising, with these innovations leading the way.

Conclusion:

The introduction of T2V and I2V models signifies a significant leap in AI-driven video generation technology. These models promise superior quality and alignment, setting new standards for content creation. As a result, the market can expect increased innovation and potential applications across various industries, particularly in entertainment, advertising, and education. Researchers and businesses should keep a close eye on these advancements to capitalize on their potential.