TL;DR:

- Alluxio introduces the Alluxio Enterprise AI (AEAI) platform.

- AEAI optimizes GPU resources and ML libraries for deep learning.

- It accelerates end-to-end AI pipelines, including large language models.

- AEAI’s GPU optimization boosts utilization rates and cuts costs.

- The platform streamlines the fine-tuning of pre-trained models.

- AEAI transforms AI platforms, making them more pragmatic and economical.

- Market impact: AEAI could set a new standard for AI platforms, driving efficiency and scalability.

Main AI News:

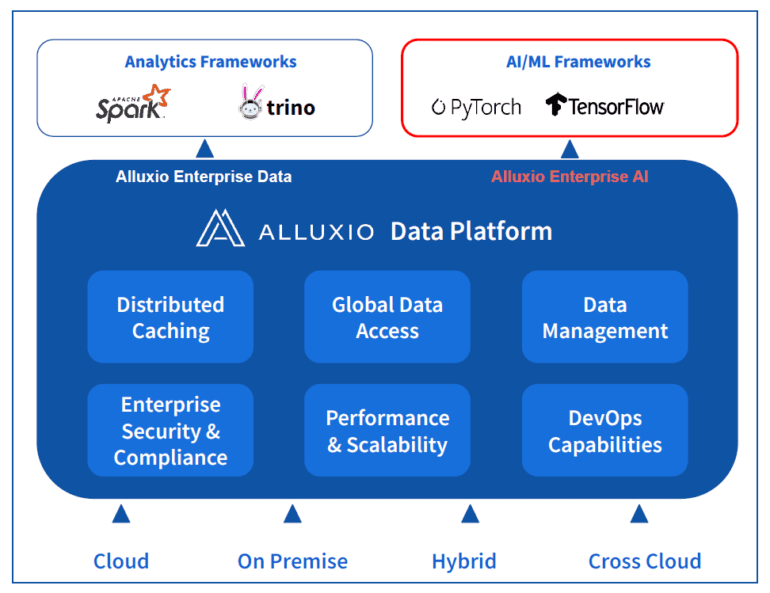

In a stride toward revolutionizing the landscape of artificial intelligence, Alluxio, an organization stemming from the 2014 open-source endeavor, Tachyon, at UC Berkeley’s AMPLab, has taken a monumental leap by introducing the Alluxio Enterprise AI (AEAI) platform. Meticulously tailored for the realm of deep learning and generative AI applications, this groundbreaking platform builds upon the established Alluxio Data Platform. It infuses advanced optimizations meticulously crafted for GPU resources and specific machine learning (ML) and deep learning libraries.

Emergence from Humble Origins to an AI Powerhouse

At first glance, the Alluxio Data Platform may have appeared as a conventional data virtualization solution, but it fundamentally diverges from the typical platforms bearing such a label. Adit Mayan, Director of Product Management at Alluxio, elucidated this distinction in a conversation with The New Stack: “We possess unique capabilities, including a distributed cache and global data accessibility, regardless of its source.” He further elaborated, “This holds particular relevance for those who have adopted hybrid cloud strategies and are utilizing multiple object stores, catering to the needs of organizations pursuing a multicloud approach.”

Delving deeper, Mayan elucidated that the Alluxio Enterprise AI continues in this vein, with a laser-like focus on enhancing deep learning and large-scale model training. This innovative solution offers tailored optimizations for ML and deep learning libraries such as Spark, PyTorch, and TensorFlow, alongside delivering high-performance I/O capabilities over standard storage mediums. This is achieved through the novel Decentralized Object Repository Architecture (DORA) by Alluxio, which functions seamlessly over cloud object storage, eliminating egress charges for customers. DORA extends its support to both open-source and commercial on-premises storage platforms, including the Hadoop Distributed File System (HDFS) and Minio. Further enriching the offering are workload-specific optimizations geared toward ML training and analytics. Notably, Alluxio incorporates a Kubernetes operator and data preload for accelerated model deployment, effectively reducing production deployment times by 2-3x.

A Catalyst for Accelerated AI Pipelines

Alluxio Enterprise AI exhibits the formidable capability to expedite end-to-end AI pipelines, encompassing tasks related to large language models (LLMs), natural language processing, and computer vision. It adeptly handles intricate pipelines, allowing for scenarios that might involve hybrid cloud and on-premises training jobs, harnessing data from both cloud object storage and HDFS. Additionally, it facilitates the deployment of new and updated models to the cloud, effectively servicing inferencing requests from downstream applications—a vital function in the AI ecosystem.

Harnessing the Power of GPU Optimization

Beyond sheer performance enhancement, AEAI showcases an exceptional knack for optimizing GPU utilization, a pivotal component in empowering resource-intensive AI workloads. This optimization stems from a profound awareness of not only diverse data source platforms but also the machine learning and deep learning libraries that access this data. Mayan elaborated on this integration, stating, “We are tightly integrated with the compute applications on top, so we have an awareness of, for example, the PyTorch programs and, inside that, there is the concept of a DataLoader, which is responsible for the I/O access.” This strategic alignment allows AEAI to intelligently predict and respond to data access requirements, seamlessly plugging into the infrastructure to deliver tangible benefits.

Alluxio reports that its AEAI platform can dramatically reduce the percentage of training runtime consumed by PyTorch’s DataLoader, from over 80% to less than 2%. Consequently, GPU utilization rates surge from as low as 20% to a staggering 90% or more. Furthermore, AEAI exhibits the unique capability to train on cloud-based GPUs, utilizing on-premises training data. Given the expense and potential scarcity of GPUs within organizations, this approach presents a strategic advantage. Optimizing GPU usage not only leads to cost reductions in AI model inferencing and training but also significantly enhances capacity, enabling more extensive experimentation and model training.

Unlocking the Potential of Large Language Models

In a context specific to generative AI, the Alluxio Enterprise AI platform unveils an additional boon. It is designed to accelerate the fine-tuning of pre-trained foundation models—a critical process in tailoring generalized LLMs to the unique data and context of an organization. This customization process represents a cornerstone in making LLMs truly invaluable in enterprise settings.

A Paradigm Shift in AI Platforms

With these groundbreaking capabilities, Alluxio has unearthed a profound use case for its Data Platform’s architecture in the realm of AI. This discovery could prove even more consequential than the data access enhancements it initially provided. No longer relegated to serving as a mere caching and acceleration layer for queries and analysis, Alluxio Enterprise AI emerges as a pivotal processing layer, rendering machine learning, deep learning, and LLM training, inference, and tuning not only pragmatic but also economical. This transformation holds significance for both large enterprise organizations and smaller, cost-conscious entities.

Paving the Way for Enterprise-Scale AI

While predicting the precise impact of AEAI on the market remains challenging, its innovative approach stands as a testament to the organization’s commitment to revolutionize AI platforms. In a world where AI is gaining ever-increasing prominence, enterprises cannot afford to rely on fragmented combinations of AI cloud services and generic data analytics layers. What they require are platforms that eliminate redundant layers of processing, calculations, and resource utilization that often result from these piecemeal approaches. This is the path to scalable AI, a fundamental prerequisite for the widespread and pragmatic adoption of this transformative technology.

The cloud may offer an economical storage solution for the colossal volumes of data required to train modern AI models. However, it often falls short in terms of efficient data access and processing. By optimizing file access over cloud object storage and facilitating the utilization of data-available GPUs, wherever they may reside, AEAI transforms what once appeared as elegant efficiencies in the Alluxio Data Platform into tangible economic benefits and a reduced time-to-market in the burgeoning field of applied AI.

Conclusion:

Alluxio’s AEAI platform represents a significant advancement in the field of generative AI. Its optimizations for GPU resources, support for popular machine learning libraries, and streamlined data access through DORA make it a game-changer. This innovation has the potential to reshape the AI market by enabling faster and more cost-effective AI model development, ultimately driving broader adoption across industries.