TL;DR:

- Amazon researchers have introduced a groundbreaking AI method for distinguishing instrumental music from vocal music in large music catalogs.

- Traditional approaches to instrumental music identification yield suboptimal results.

- Amazon’s multi-stage method includes source separation, singing voice quantification, and background track analysis.

- The approach excels in precision and recall, outperforming existing models for instrumental music detection.

Main AI News:

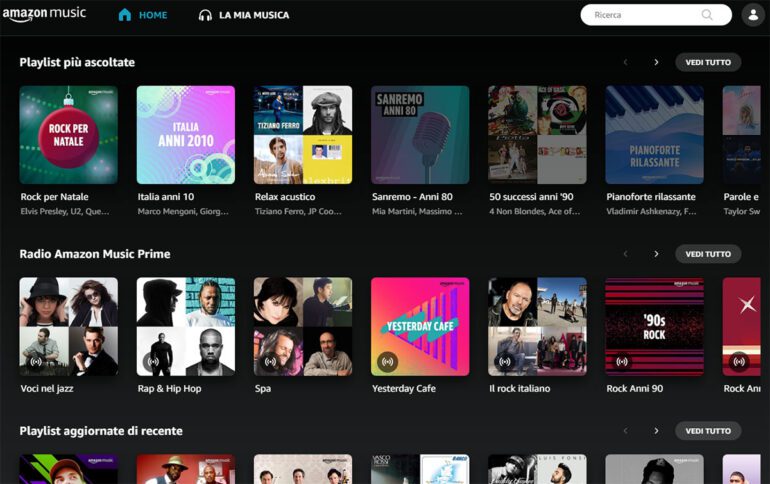

In the ever-evolving digital landscape, music streaming services have become an integral part of our daily lives. Yet, amidst the vast sea of musical content, distinguishing between instrumental and vocal tracks remains a formidable challenge. This differentiation holds paramount importance for a myriad of applications, including crafting purpose-specific playlists, aiding concentration or relaxation, and even as a foundational step in linguistic categorization for singing, especially in markets teeming with diverse languages.

Within the realm of scalable content-based algorithms for automatic music tagging, a substantial body of academic research has flourished, aiming to provide contextual information. These techniques often entail the development of low-level content features, incorporating audio data and various data modalities into supervised multi-class multi-label models. Such models have exhibited remarkable performance across diverse domains, encompassing music genre prediction, mood analysis, instrumentation assessment, and language identification.

In a recent breakthrough, a team of researchers from Amazon has set their sights on enhancing automatic instrumental music detection. They argue that conventional approaches fall short of delivering optimal results in this domain. Specifically, when it comes to identifying instrumental music, these models tend to yield a low recall rate, signifying a deficiency in correctly identifying relevant instances with high precision.

To surmount this challenge, the research team has introduced a pioneering multi-stage method for instrumental music detection, consisting of the following key stages:

- Source Separation Model: In the initial stage, the audio recording undergoes a partition into two distinct components: vocals and accompaniment, i.e., the background music. This partition is crucial as instrumental music, in theory, should exclude any vocal elements.

- Quantification of Singing Voice: The second stage involves quantifying the singing voice content within the vocal signal. This quantification enables the determination of whether a track contains vocals or not. If the presence of a singing voice falls below a predefined threshold, it indicates that the recording is instrumental.

- Background Track Analysis: The background track, representing the instrumental aspects of the song, undergoes scrutiny in the third stage. A neural network, trained to differentiate sounds into instrumental and non-instrumental categories, takes center stage in this analysis. The primary role of this neural network is to ascertain the presence of musical instruments within the background recording. A binary classifier is then applied to the vocal signal, determining the music’s instrumentality based on the quantity of singing voice, falling below the established threshold.

This multi-stage approach aims to provide a conclusive verdict regarding the instrumental nature of specific music. It leverages the presence of a singing voice and background music features to arrive at its determination. Furthermore, the research includes a comparative evaluation against state-of-the-art models for instrumental music detection, bolstering the method’s efficacy.

Conclusion:

Amazon’s innovative AI solution for identifying instrumental music is poised to reshape the music streaming market. With improved accuracy in differentiating instrumental tracks, music streaming services can enhance playlist curation, user experience, and language-based categorization, ultimately meeting diverse consumer preferences and market demands more effectively. This development holds significant potential for music recommendation and content personalization, further solidifying Amazon’s position in the competitive music streaming landscape.