TL;DR:

- Amphion, an open-source toolkit, leads the way in AI-driven audio, music, and speech generation.

- Developed by researchers from prestigious institutions, including The Chinese University of Hong Kong and Shanghai AI Lab.

- Amphion emphasizes reproducible research, offering unique visualizations of classical models.

- Its central goal is to enable a comprehensive understanding of audio conversion from diverse inputs.

- Supports individual generation tasks, high-quality audio production with vocoders, and vital evaluation metrics.

- The thriving open-source community sees Amphion as a standout platform for diverse audio generation tasks.

- Deep learning advancements drive progress in generative models for audio, music, and speech processing.

- Amphion fills a crucial gap by unifying generation tasks and providing a comprehensive framework.

- Its visualizations enhance user comprehension of generative processes.

- While Amphion outlines its purpose and features, specific experimental results are yet to be included.

Main AI News:

In the ever-evolving realm of artificial intelligence, the landscape of audio, music, and speech generation is experiencing groundbreaking advancements. Within the thriving open-source community, numerous toolkits have emerged, each contributing to the ever-expanding repository of algorithms and techniques. Among these, Amphion, developed by a team of researchers hailing from The Chinese University of Hong Kong, Shenzhen, Shanghai AI Lab, and Shenzhen Research Institute of Big Data, stands out as a game-changer, boasting unique features and a steadfast commitment to promoting reproducible research.

Amphion is a versatile toolkit that serves as a catalyst for research and development in the domains of audio, music, and speech generation. It places a strong emphasis on enabling reproducible research through innovative visualizations of classical models. At its core, Amphion is dedicated to providing a holistic understanding of audio conversion, accommodating a wide array of input variations. This exceptional toolkit not only supports individual generation tasks but also offers state-of-the-art vocoders for the production of high-quality audio, complemented by essential evaluation metrics to ensure consistent performance assessment.

This study highlights the rapid evolution of audio, music, and speech generation, all thanks to the advancements in machine learning. In the thriving open-source community, an abundance of toolkits cater to these domains, but Amphion distinguishes itself as the sole platform capable of handling diverse generation tasks encompassing audio, music-singing, and speech. What sets Amphion apart is its distinctive visualization feature, which enables users to interactively explore the generative process, providing invaluable insights into the inner workings of the models.

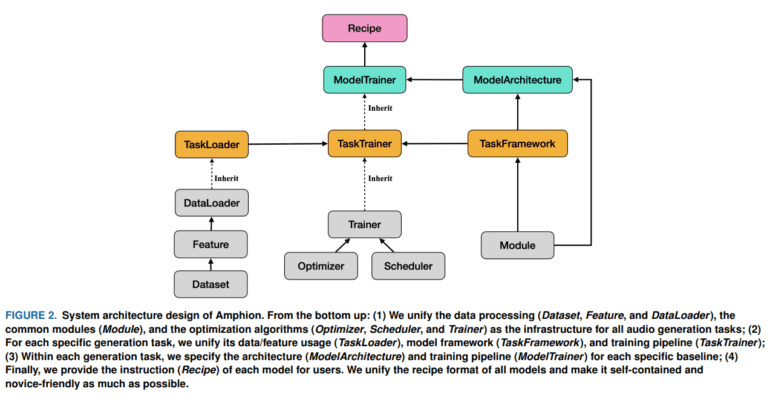

The progress in deep learning has propelled generative models forward in audio, music, and speech processing. However, this surge in research has led to the proliferation of scattered open-source repositories, often lacking systematic evaluation metrics and consistency in quality. Amphion addresses these challenges head-on with its open-source platform, designed to facilitate the study of converting diverse inputs into high-quality audio. It unifies all generation tasks under a comprehensive framework that encompasses feature representations, evaluation metrics, and dataset processing. The unique visualizations of classical models offered by Amphion deepen user comprehension of the entire generation process.

Amphion’s commitment to enhancing user understanding through visualizations, ensuring high-quality audio production with vocoders, and maintaining consistency through evaluation metrics is commendable. This toolkit also delves into successful generative models for audio, including autoregressive, flow-based, GAN-based, and diffusion-based models, making it an all-encompassing resource for researchers and developers alike. While this study provides a comprehensive overview of Amphion’s purpose and features, it is important to note that specific experimental results and findings are not included at this stage, leaving room for further exploration and discovery in this dynamic field.

Conclusion:

Amphion’s emergence as a leading AI toolkit for audio, music, and speech generation signifies a promising direction for the market. Its commitment to reproducible research and comprehensive understanding, coupled with its versatile support for diverse tasks, positions it as a valuable resource for researchers and developers. As the field of generative models continues to evolve, Amphion’s unique features and contributions are poised to drive innovation and shape the future of audio and speech technology.