TL;DR:

- The Interactive Agent Foundation Model proposes a unified pre-training framework for AI agents, treating text, visual data, and actions as distinct tokens.

- It leverages pre-trained language and visual-language models to predict masked tokens across all modalities, enabling seamless interaction with humans and environments.

- The model’s architecture incorporates pre-trained CLIP ViT-B16 for visual encoding and OPT-125M for action and language modeling, facilitating cross-modal information sharing.

- With 277M parameters jointly pre-trained across diverse domains, it demonstrates effective engagement in multi-modal settings across various virtual environments.

- Initial evaluations across robotics, gaming, and healthcare domains show promising results, with competitive performance in robotics and gaming tasks and superior performance in healthcare applications.

Main AI News:

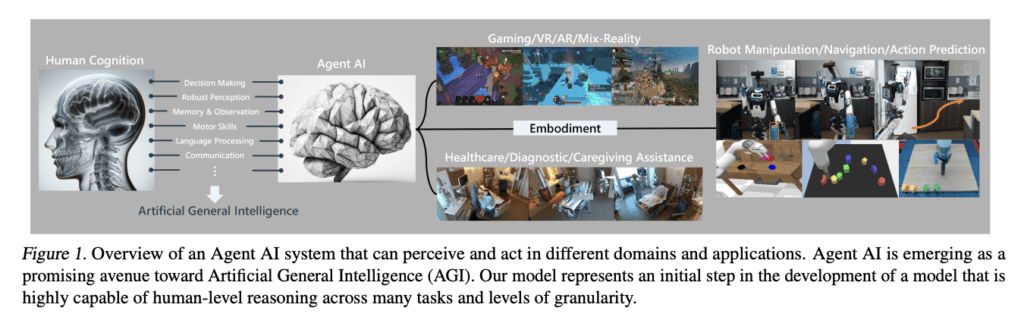

In the rapidly evolving landscape of artificial intelligence (AI), there’s a clear shift from static, task-centric models to dynamic, adaptable agent-based systems. These systems are designed to be versatile across a multitude of applications, capable of gathering sensory data and effectively engaging with various environments—an enduring goal in AI research.

One promising avenue in this evolution is the development of generalist AI models. Rather than focusing narrowly on specific tasks, these models are trained to handle a wide array of tasks and data types. This approach not only streamlines development but also enhances scalability, leveraging vast amounts of data, computational resources, and model parameters.

Recent studies have underscored the benefits of such generalist AI systems. By training a single neural model across diverse tasks and data types, researchers have achieved scalability previously unseen in AI development. However, significant challenges remain. Large foundation models, while powerful, often struggle with hallucinations and inaccuracies, lacking sufficient grounding in real-world training environments.

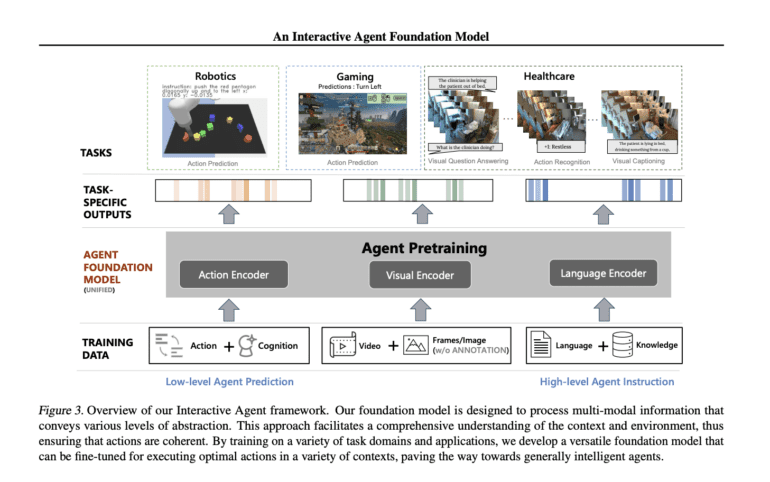

To address these challenges, a team of researchers from Stanford University, Microsoft Research, Redmond, and the University of California, Los Angeles, have introduced the Interactive Agent Foundation Model. This groundbreaking model employs a unified pre-training framework, treating text, visual data, and actions as distinct tokens. By leveraging pre-trained language and visual-language models, it predicts masked tokens across all modalities, enabling seamless interaction with humans and environments.

At the heart of the Interactive Agent Foundation Model lies its innovative architecture. Initialized with pre-trained CLIP ViT-B16 for visual encoding and OPT-125M for action and language modeling, the model facilitates cross-modal information sharing through linear layer transformations. To overcome memory constraints, previous actions and visual frames are incorporated using a sliding window approach. Sinusoidal positional embeddings play a crucial role in predicting masked visible tokens, ensuring robust performance across diverse scenarios.

One notable aspect of this model is its holistic approach to training. Unlike previous methods that relied on frozen submodules, the Interactive Agent Foundation Model is jointly trained during pre-training, optimizing the entire model for performance and adaptability.

Initial evaluations across robotics, gaming, and healthcare domains have yielded promising results. While there are instances where the model is outperformed by others due to data limitations for pre-training, it demonstrates competitive performance, particularly excelling in robotics tasks. Fine-tuning of the pre-trained model has proven especially effective in gaming scenarios, showcasing its versatility and adaptability across different applications.

In healthcare settings, the model surpasses several baselines, leveraging its diverse pre-training approach to achieve superior performance. Overall, the Interactive Agent Foundation Model represents a significant step forward in AI development, promising greater flexibility, scalability, and effectiveness across a wide range of domains and tasks.

Source: Marktechpost Media Inc.

Conclusion:

The introduction of the Interactive Agent Foundation Model represents a significant advancement in AI development, offering a unified approach to training across diverse modalities. Its promising performance across various domains indicates its potential to revolutionize the market, providing businesses with more adaptable and effective AI solutions for a wide range of applications. As the model continues to evolve and improve, organizations can anticipate enhanced capabilities and opportunities for innovation in their respective industries.