TL;DR:

- AO-Grasp technology enhances robots’ ability to interact with articulated objects effectively.

- Researchers emphasize the importance of stable and actionable grasping solutions for articulated objects.

- Existing methods lack comprehensive grasp-generation solutions for articulated objects.

- The AO-Grasp Dataset and model provide data and methods for secure and actionable grasps.

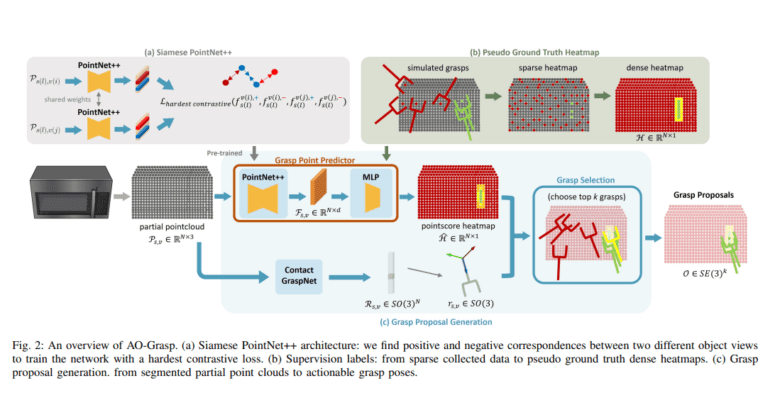

- AO-Grasp comprises an Actionable Grasp Point Predictor model and an advanced approach for grasping rigid objects.

- The model’s performance is compared to the CGN model, revealing differences in training data.

- AO-Grasp outperforms existing baselines in both simulated and real-world scenarios.

- The technology excels in generating grasp-likelihood heatmaps for objects with movable parts.

- AO-Grasp demonstrates robust generalization across previously unseen categories during training.

Main AI News:

In recent times, the integration of robots into diverse industries, spanning from manufacturing to healthcare, has gained significant momentum. Nevertheless, the effectiveness of these robots is contingent upon their ability to seamlessly interact with their surroundings, particularly when it comes to handling objects. Central to this interaction is the art of grasping objects securely. This is precisely where AO-Grasp, a groundbreaking technology, steps in – it specializes in generating stable and dependable grasping solutions for articulated objects. AO-Grasp has garnered acclaim for elevating success rates in both synthetic and real-world scenarios, revolutionizing robots’ capabilities to interact efficiently with cabinets and appliances.

Researchers have taken a pivotal stance within the grasp planning landscape, emphasizing the paramount importance of securing stable grasps while dealing with articulated objects and accentuating their actionability. Existing methodologies often fall short of providing comprehensive solutions for producing robust and varied prehensile grasps. Frequently, they tend to oversimplify grasp generation or concentrate on non-prehensile interaction policies. Their research also underscores the scarcity of real-world assessments and underscores the significance of extensive grasp datasets tailored for articulated objects. It underscores the myriad challenges associated with grasping such objects and underscores the need to comprehend local geometries to pinpoint suitable grasping points.

The proposed method rises to the challenge of effectively interacting with articulated objects, such as cabinets and appliances, characterized by their movable components. Grasping these objects poses inherent complexities due to the prerequisite for both stability and actionability in the grasp, with the graspable regions fluctuating alongside the object’s joint configurations. Existing research predominantly centers on non-articulated entities, prompting the introduction of the AO-Grasp Dataset and model. These offerings supply crucial data and a proven methodology for generating secure and actionable grasps on articulated objects, empowering robots to adeptly navigate these objects for a multitude of manipulation tasks.

The researchers introduce the AO-Grasp method, designed to create stable and actionable grasping solutions for articulated objects. This method comprises two integral components: an Actionable Grasp Point Predictor model and a cutting-edge approach to grasping rigid objects. The predictor model leverages the AO-Grasp Dataset, housing an impressive 48,000 actionable grasps on synthetic articulated objects, to identify optimal grasp points. Additionally, the model’s orientation prediction prowess is meticulously compared with the CGN model, which is trained on the ACRONYM dataset, shedding light on the disparities in training data. Their approach also skillfully tackles the challenges associated with training the predictor model and employs pseudo-ground truth labels to combat overfitting.

In simulated environments, AO-Grasp consistently outperforms established baselines for both rigid and articulated objects, achieving substantially higher success rates. Transitioning to real-world testing, AO-Grasp achieves success in 67.5% of scenarios, a remarkable improvement over the baseline’s 33.3%. Across various object states and categories, AO-Grasp maintains its superiority over Contact-GraspNet and Where2Act. Notably, it excels in generating grasp-likelihood heatmaps, particularly for objects featuring multiple movable components. The discernible success margin over CGN becomes even more pronounced for closed states, underscoring AO-Grasp’s prowess in handling articulated objects. Furthermore, AO-Grasp exhibits robust generalization capabilities across previously unseen categories during its training phase.

Conclusion:

AO-Grasp represents a significant breakthrough in the robotics market, empowering robots to efficiently interact with articulated objects. Its success in both simulated and real-world scenarios, coupled with its ability to handle objects with movable components, positions it as a transformative force in the industry. This technology opens up new possibilities for various manipulation tasks across diverse sectors, making it a valuable asset for businesses seeking to optimize their robotic operations.