TL;DR:

- Arthur introduces open source tool “Arthur Bench” to enhance Language Model Model (LLM) selection.

- The tool addresses challenges in gauging LLM effectiveness, bridging the gap between model choices and application needs.

- Arthur Bench empowers users to methodically test LLM performance with diverse prompts, aiding decision-making.

- The tool’s open source version caters to the developer community, while a SaaS version accommodates complex testing requirements.

- Arthur’s momentum continues with the recent launch of “Arthur Shield,” a protective LLM firewall.

Main AI News:

In the swiftly evolving landscape of machine learning, Arthur, a dynamic player in the realm of machine learning monitoring, has harnessed the burgeoning interest in generative AI to spearhead the development of cutting-edge tools aimed at amplifying the efficacy of Language Model Models (LLMs) within corporate spheres. Today marks the debut of Arthur Bench, a groundbreaking open source instrument meticulously engineered to empower users in identifying the optimal LLM for their distinct dataset requirements.

Leading the charge is Adam Wenchel, a visionary CEO and co-founder at Arthur, who has deftly navigated the surging tides of generative AI and LLMs. Guided by the surging industry enthusiasm, Arthur has diligently channeled its efforts into crafting pioneering products that transcend conventional boundaries.

Wenchel underscores the contemporary gap in the market. Even in the wake of less than a year since the landmark unveiling of ChatGPT, he asserts that enterprises grapple with a conspicuous void when it comes to a methodical yardstick for gauging the efficacy of one tool against another. This void has now met its match with the advent of Arthur Bench.

“Arthur Bench is the antidote to one of the most pressing quandaries resonating through every echelon of our customer base: amid the plethora of model choices, how does one discern the paramount selection for a specific application?” Wenchel elucidates in a conversation with TechCrunch.

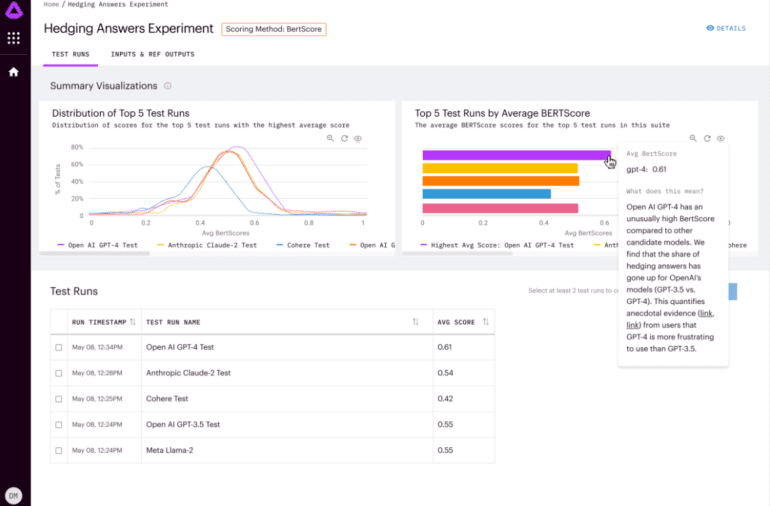

The technological marvel comes adorned with an assortment of precision tools designed to rigorously scrutinize performance. Yet, the true value proposition emerges as it empowers users to meticulously evaluate and measure the responsiveness of various LLMs to the diverse array of prompts that typify their distinctive applications.

“Imagine orchestrating tests for a diverse array of 100 prompts, followed by an intricate analysis of how two distinct LLMs – say Anthropic pitted against OpenAI – fare when confronted with the very prompts that your user base is poised to deploy,” envisions Wenchel. He expounds further, highlighting that this evaluation can be seamlessly scaled, enabling a judicious determination of the preeminent model tailored for the unique nuances of each use case.

With its grand unveiling, Arthur Bench assumes the mantle of an open source juggernaut, a gift to the developer community. Concurrently, a Software as a Service (SaaS) iteration is on the horizon, poised to accommodate customers seeking to sidestep the complexities inherent in administering the open source variant or those necessitating expansive testing capabilities and willing to invest accordingly. At present, the company is directing its concerted efforts toward the open source initiative.

This latest milestone arrives hot on the heels of the May launch of Arthur Shield, a groundbreaking LLM firewall engineered to discern hallucinations within models, while safeguarding against pernicious misinformation and breaches of confidential data.

Conclusion:

Arthur’s release of the open source tool “Arthur Bench” marks a significant stride in the realm of Language Model Models (LLMs). The tool not only addresses a critical industry challenge of selecting the optimal LLM for specific applications, but also empowers users to evaluate and measure model responsiveness at scale. This innovative move resonates with the broader market trend of advancing LLM capabilities, fostering informed decision-making and adaptive solutions in an evolving landscape. Furthermore, Arthur’s holistic approach, exemplified by “Arthur Shield,” underscores its commitment to shaping the future of LLMs by not only optimizing their usage but also safeguarding against potential risks. This signifies a promising direction for the market as businesses seek comprehensive tools to leverage the potential of LLMs in their operations.