TL;DR:

- European Parliament approves groundbreaking Artificial Regulation proposal, initiating interinstitutional negotiations for a final text.

- The regulation aims to promote human-centric and trustworthy AI while ensuring the protection of health, safety, rights, democracy, the rule of law, and the environment.

- Controversy arises as regulation covers technology itself, potentially impeding innovation.

- The rapid adoption of AI generative tools, like ChatGPT, prompts EU’s swift response with the AI Act proposal.

- Debates arise regarding the risks and benefits of AI, with calls for global prioritization of risk mitigation.

- Telefónica advocates for responsible digitalization, embedding ethical principles and transparency requirements.

- AI Act should support innovation, enhance the internal market, and deliver positive societal and economic impact.

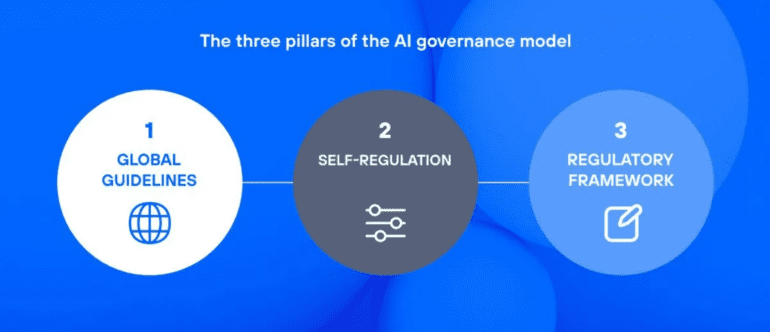

- Three pillars of AI governance are proposed: global guidelines, self-regulation, and a suitable regulatory framework.

- Global guidelines are needed for legal certainty and protection of rights; AI Pact and international initiatives contribute to this.

- Self-regulation offers efficiency and innovation opportunities for low-risk applications.

- Telefónica embraces ethical principles for AI throughout the company’s value chain.

- A holistic approach combining international cooperation, self-regulation, public policies, and risk-based regulation is required.

- Striking the right balance between innovation and regulation is crucial for the AI market’s growth and development.

Main AI News:

As we venture into the realm of artificial intelligence (AI), a groundbreaking milestone has been reached. The European Parliament has given its seal of approval to the pioneering proposal for Artificial Regulation. This momentous development signifies not the culmination, but rather the initiation of EU interinstitutional negotiations aimed at achieving a consensus on the final text.

The forthcoming Regulation sets a paramount global standard, seeking to foster the adoption of human-centric and trustworthy AI. Its purpose is twofold: to ensure the safeguarding of health, safety, fundamental rights, democracy, rule of law, and the environment against potential adverse effects of AI systems, while also encouraging their widespread acceptance. Nonetheless, the controversial aspect of this approach lies in its regulation of not just the impact and utilization of technology, but also the technology itself. Foundational and generative AI could conceivably face impediments, potentially hindering the pace of innovation.

The Emergence of AI Generative Tools and Their Implications on Governance Models

The rapid proliferation of ChatGPT, accompanied by the controversies it has sparked, likely contributes to the EU’s swift response to the AI Act proposal. These AI tools are steadily finding their way into various business functions, and their societal ramifications have sparked growing concerns.

Prominent figures within the AI industry, including Sam Altman, CEO of OpenAI, and Dennis Hassabis, CEO of Google Deepmind, advocate for treating the mitigation of AI-related risks as a global priority, comparable to other existential risks like pandemics and nuclear war. However, dissenting voices challenge this perspective. Marc Andreessen, an innovator and co-creator of the first web browser Mosaic, who famously proclaimed over a decade ago that “software is eating the world,” recently posed the question, “Will AI save the world?“. His query adds to the ongoing debate surrounding the risks associated with AI.

While there is a general consensus on the imperative to mitigate potential AI risks, at Telefónica, we believe that a culture of prohibition or excessive regulation targeting the technology itself is not the optimal approach. The pace of technological evolution outpaces regulatory changes, rendering technology-focused regulations swiftly outdated. Such outdated regulations could bear adverse consequences for society and the economy at large. Instead, we advocate for responsible digitalization, embedding ethical principles and transparency requirements within our AI governance model, including generative AI.

The Future Trajectory of the AI Act for Societal and Economic Progress

Throughout the negotiation process, the EU institutions must not lose sight of the fact that AI is a transformative technology poised to deliver profound benefits to our economy and society. This game-changing technology acts as a catalyst for regional and business competitiveness. Consequently, the AI Act must foster innovation and enhance the functioning of the internal market, acknowledging the pivotal role AI plays in today’s data-driven era. It holds the potential to spur service innovation, enable new business models, and generate efficiencies, all while contributing positively to society.

The central question lies in achieving innovation with a human-centric and trustworthy approach. At Telefónica, we leverage our public positioning to showcase our commitment to prosperity, coupled with the protection of individuals’ rights and our societal framework.

The Three Pillars of Governance: Global Guidelines, Self-Regulation, and Regulatory Framework

Our comprehensive perspective on AI governance revolves around three fundamental pillars: global guidelines, self-regulation, and a fitting regulatory framework. We firmly believe that the EU AI Act’s scope should be circumscribed and supplemented by regional guidelines in harmony with global agreements and responsible conduct guided by ethical principles.

Regional and Global Guidelines

The domain of AI transcends national boundaries, necessitating global solutions and unified approaches to ensure legal certainty and protect people’s rights. To foster a worldwide convergence of ethical principles and practices, we require guidelines and collaboration. The introduction of the AI Pact within Europe, a voluntary commitment from the industry that precedes the AI Act, aims to offer certainty in the regulation’s implementation, bolster innovation, and enhance the internal market’s functioning.

Furthermore, international initiatives have been endorsed to address challenges and promote the ethical use of AI. In May 2023, the G7 nations committed to prioritizing collaboration on inclusive AI governance. Governments emphasized the significance of forward-looking, risk-based approaches aligned with shared democratic values, ensuring trustworthy AI.

In a similar vein, during the fourth EU-US Trade and Technology Council (TTC) ministerial meeting in May 2023, the TTC explicitly included generative AI systems, such as ChatGPT, in the scope of the Joint Roadmap on evaluation, measurement tools, trustworthy AI, and risk management. Margrethe Vestager, the European Commission Executive Vice-President, announced collaborative efforts between the EU and the US to establish a voluntary AI Code of Conduct ahead of formal regulations. The objective is to develop non-binding international standards encompassing risk audits, transparency, and other requirements for companies engaged in AI system development, thereby encouraging voluntary compliance.

Self-Regulation

Moreover, self-regulation presents noteworthy opportunities. Firstly, the rapid pace of AI development outstrips the adoption of norms, which typically take years to materialize. Secondly, the complexity of AI makes it challenging to formulate a priori regulations applicable across diverse contexts, which could potentially stifle innovation. Thirdly, for uses deemed low-risk, self-regulation proves more financially and administratively efficient. Importantly, self-regulation never compromises the protection of individuals’ rights, health, democracy, and safety; on the contrary, it enhances digital services and broadens individual and collective opportunities.

Telefónica has embraced ethical principles for AI that permeate the organization. These principles are integrated from the design and development stages, encompassing the use of products and services by employees, suppliers, and third parties. Our application of these principles rests on a “Responsibility by Design” framework, enabling the incorporation of ethical and sustainable criteria throughout the value chain.

Regulatory Framework

Navigating the AI regulation debate demands a holistic vision that combines international cooperation, self-regulation, the formulation of sound public policies, and a risk-based regulatory approach. This comprehensive strategy aims to mitigate risks while fostering a human-centric and trustworthy framework conducive to innovation and economic growth. Such an approach champions the ethical deployment of technology and its widespread adoption.

Conclusion:

The approval of the Artificial Regulation proposal by the European Parliament marks a significant step toward establishing a standardized framework for AI governance. While the regulation’s focus on the technology itself raises concerns about potential hindrances to innovation, it underscores the importance of ensuring human-centric and trustworthy AI. The swift response to the growing adoption of AI generative tools demonstrates the urgency to address the associated risks.

For businesses operating in the AI market, it is essential to embrace responsible digitalization and integrate ethical principles throughout their operations. By striking a balance between global guidelines, self-regulation, and a well-structured regulatory framework, the market can foster innovation, enhance the internal market, and navigate the evolving landscape of AI governance successfully.