- Baidu introduces a new self-reasoning framework aimed at improving the reliability and traceability of Retrieval-Augmented Language Models (RALMs).

- The framework addresses common issues such as noisy retrievals and inadequate citations by generating self-reasoning trajectories through three processes: relevance-aware, evidence-aware selective, and trajectory analysis.

- Performance testing shows the framework achieves results comparable to GPT-4 with only 2,000 training samples and enhances interpretability without external models.

- Previous approaches to integrating external information into LLMs have involved various complex and costly methods.

- Baidu’s method simplifies the process by identifying key sentences and citing relevant documents within a unified framework.

- The framework demonstrated superior performance in short-form and long-form QA datasets and fact verification, with high citation recall and accuracy.

- An ablation study highlighted the importance of each component in the framework, showing its robustness to noisy document retrievals and aligning with human citation analysis.

Main AI News:

Baidu Inc., China, has introduced a pioneering self-reasoning framework designed to elevate the reliability and traceability of Retrieval-Augmented Language Models (RALMs). RALMs, which integrate external knowledge to enhance Large Language Models (LLMs), often struggle with reliability issues and traceability concerns. Common challenges include noisy retrievals leading to inaccurate responses and difficulties in verifying the model’s outputs due to insufficient citations. Traditional approaches to address these issues, such as incorporating natural language inference and document summarization models, often increase complexity and costs, making optimization a considerable challenge.

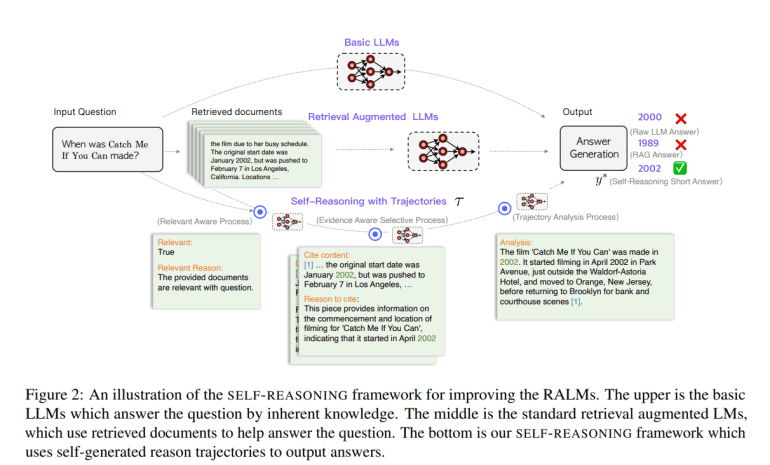

The new framework from Baidu improves response accuracy by generating self-reasoning trajectories through three key processes: relevance-aware, evidence-aware selective, and trajectory analysis. This method, evaluated across four public datasets, has shown superior performance compared to existing models, even achieving results on par with GPT-4 using only 2,000 training samples. By enhancing interpretability and traceability without the need for external models, this framework streamlines the process and offers a more efficient solution.

Previous strategies to boost LLMs by integrating external information have included pre-training with retrieved passages, adding citations, and developing end-to-end systems that generate responses without altering model weights. Some techniques dynamically adjust or fine-tune LLMs with retrieval tools, while others aim to improve factual accuracy through various retrieval and editing methods. In contrast, Baidu’s approach identifies key sentences and cites relevant documents within a unified framework, thereby avoiding the need for additional models and enhancing efficiency.

The self-reasoning process involves generating answers based on reasoning trajectories from a query and document corpus, with each answer statement citing pertinent documents. This framework trains the model to produce reasoning trajectories and responses in a single pass, using a three-stage process: document relevance evaluation, key sentence selection and citation, and reasoning analysis to finalize answers. The data used for training is meticulously generated and quality-controlled to ensure accuracy.

Extensive testing on short-form and long-form QA datasets, as well as a fact verification dataset, demonstrated the framework’s effectiveness. It was evaluated using various metrics, including accuracy, exact match recall, citation recall, and precision. The SELF-REASONING framework outperformed many baseline models, especially in long-form QA and fact verification tasks, showcasing high citation recall and accuracy with minimal training samples and reduced resource consumption.

An ablation study revealed the critical role of each component within the SELF-REASONING framework. Omitting any of the three processes—Relevance-Aware Process (RAP), Evidence-Aware Selective Process (EAP), or Trajectory Analysis Process (TAP)—significantly diminished performance, underscoring their collective importance. The framework also exhibited robustness against noisy and shuffled document retrievals, with human citation analysis validating its high citation quality and alignment with automatic evaluations. These findings highlight the framework’s effectiveness in enhancing LLM performance on knowledge-intensive tasks.

Conclusion:

Baidu’s introduction of the self-reasoning framework represents a significant advancement in the field of Retrieval-Augmented Language Models (RALMs). By improving response accuracy and traceability through a streamlined, end-to-end approach, Baidu offers a more efficient and interpretable solution compared to traditional methods. This innovation is poised to enhance the reliability of AI systems across various applications, potentially setting new standards for model performance and reducing costs associated with external model integration. The framework’s proven effectiveness and cost-efficiency may influence market trends, driving further adoption and development of similar advanced AI technologies.