TL;DR:

- Large language models (LLMs) are gaining attention for their human-like capabilities in various tasks, including code generation.

- Researchers introduced the RRTF framework, leveraging natural language LLM alignment techniques to enhance code LLMs’ performance.

- PanGu-Coder2 model, developed using RRTF, achieved an exceptional 62.20% pass rate on the OpenAI HumanEval benchmark.

- Code LLMs can potentially outperform natural language models in code creation tasks with high-quality data.

- Contributions include the RRTF Optimization Paradigm, PanGu-Coder2’s outstanding performance, and insights on building superior training data and optimization methods.

Main AI News:

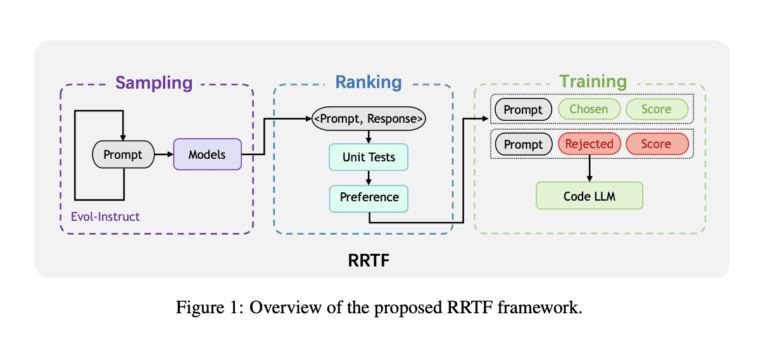

The world of Large Language Models (LLMs) has been abuzz with excitement in recent months. These remarkable models, capable of mimicking human-like responses, have taken center stage in answering questions, generating precise content, translating languages, summarizing lengthy textual passages, and even completing complex code samples. With each passing day, LLMs continue to advance rapidly, with powerful iterations exhibiting exceptional prowess in code generation tasks. In a remarkable study, a brilliant team of researchers from Huawei Cloud Co., Ltd., Chinese Academy of Science, and Peking University unveiled a groundbreaking framework known as RRTF (Rank Responses to align Test&Teacher Feedback). This innovative framework presents an efficient and highly effective method for enhancing pre-trained large language models to achieve unparalleled performance in code production.

The RRTF framework is a true game-changer for Code LLMs, elevating their capabilities in code generation activities to unprecedented heights. By leveraging natural language LLM alignment techniques and rating feedback, rather than relying on absolute reward values, the RRTF framework proves to be a novel approach inspired by the Reinforcement Learning from Human Feedback method. This revolutionary approach, which employs ranking responses as feedback, has already proven its worth in models like InstructGPT or ChatGPT, offering a simpler and more effective training approach. Now, the RRTF framework brings this transformative power to Code LLMs, sparking a new era of code generation advancements.

Notably, the team’s efforts resulted in the development of the remarkable PanGu-Coder2 model, a true marvel in the realm of code generation. This exceptional model achieved an astounding 62.20% pass rate at the top-1 position on the prestigious OpenAI HumanEval benchmark. Compared to its predecessor, PanGu-Coder2 surpassed expectations by an impressive 30%, solidifying its place as the reigning champion in the field. In fact, PanGu-Coder2’s performance has outshone all previously released Code LLMs, setting new benchmarks and reaching new heights in code generation capabilities.

To showcase the transformative potential of RRTF, the team conducted comprehensive analyses on three crucial benchmarks: HumanEval, CoderEval, and LeetCode. The results spoke volumes, as Code LLMs demonstrated their potential to outperform even larger-sized natural language models in code creation tasks. This breakthrough study emphasized the importance of high-quality data in honing a model’s ability to follow instructions accurately and produce impeccable code.

The team’s valuable contributions are multi-faceted and far-reaching:

- The introduction of the RRTF Optimization Paradigm, a model-neutral, straightforward, and data-efficient approach that revolutionizes code generation.

- The remarkable PanGu-Coder2 model, a true leap forward that outperforms its predecessor by 30% and excels across benchmarks like HumanEval, CoderEval, and LeetCode.

- Setting new standards and achieving state-of-the-art milestones in code generation, making PanGu-Coder2 the indisputable leader in the realm of Code LLMs.

- Sharing invaluable insights and practical knowledge on building superior training data for code generation, ensuring models are equipped to excel.

- Utilizing the RRTF framework to train the PanGu-Coder2 model, unlocking new possibilities and shedding light on an innovative training process.

- Introducing optimization methods used by PanGu-Coder2, guaranteeing lightning-fast inference and creating realistic deployment scenarios for real-world applications.

The convergence of the PanGu-Coder2 model and the RRTF framework marks a pivotal moment in AI research, propelling code generation into an era of unprecedented efficiency and excellence. As businesses and industries adopt and harness the power of these advancements, the potential for transformative applications in various domains becomes ever more apparent. The journey towards creating a smarter, more interconnected world powered by AI has taken a remarkable leap forward, and the future promises even more astonishing innovations.

Conclusion:

The introduction of the PanGu-Coder2 model and the RRTF framework marks a significant breakthrough in the market of large language models. These advancements in code generation signify a pivotal moment in AI research, offering transformative applications across industries and paving the way for smarter, more efficient real-world deployments. Businesses should closely monitor these developments and explore opportunities to leverage the capabilities of code LLMs to stay ahead in the competitive landscape.