- Reinforcement learning is reshaping humanoid robotics, bridging the gap between AI and physical interactions.

- Recent studies highlight the potential of reinforcement learning in training robots to perform complex tasks, such as walking and playing soccer.

- Teams from Google DeepMind and other institutions have successfully applied reinforcement learning to real-world robots, yielding significant improvements in speed, agility, and adaptability.

- The use of virtual simulations for training minimizes risks to physical robots and accelerates the development process.

- Transformer neural networks, similar to those powering large language models, are employed to train humanoid robots, enabling them to learn from their environment and perform tasks autonomously.

- These advancements mark a tipping point in robotics, unlocking new capabilities and paving the way for AI-driven control systems in diverse applications.

Main AI News:

Reinforcement learning, a breakthrough in AI technology, is poised to revolutionize the landscape of humanoid robotics. While AI tools like GPT have dominated the digital realm, the integration of reinforcement learning promises to bring AI interactions into the physical world, opening doors to applications in factories, space stations, nursing homes, and beyond. Recent studies published in Science Robotics shed light on how reinforcement learning can pave the way for the realization of humanoid robots.

The current paradigm in controlling bipedal robots relies heavily on model-based predictive control, resulting in sophisticated systems like Boston Dynamics’ Atlas robot. However, these systems demand significant human expertise for programming and struggle to adapt to new environments. In contrast, reinforcement learning offers a more promising approach, allowing AI to learn through trial and error to execute sequences of actions.

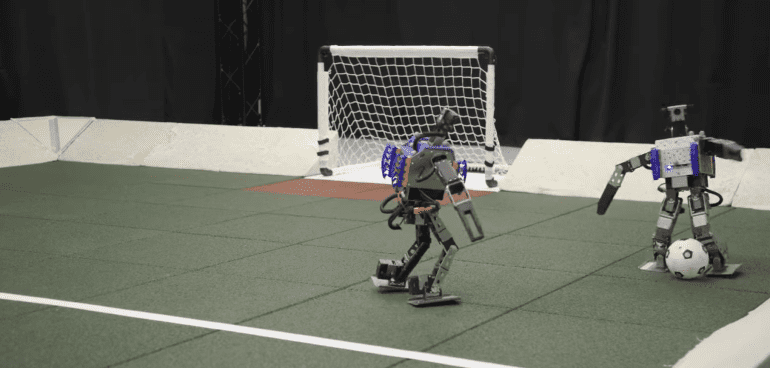

A team led by Tuomas Haarnoja from Google DeepMind embarked on a mission to explore the capabilities of reinforcement learning in real-world robots. They chose to work with OP3, a 20-inch-tall toy robot, aiming not only to teach it to walk but also to engage in one-on-one soccer matches. Leveraging the agility and versatility of soccer, the team saw it as an ideal environment to study general reinforcement learning principles, encompassing planning, exploration, cooperation, and competition.

The compact size of the robots enabled rapid iteration, a crucial factor in development. By training the AI on virtual robots before deploying it on real counterparts, the team mitigated the risk of damage to physical robots during the learning process. The training involved two stages: first, teaching the AI to lift the robot and score goals separately, and then training it to imitate these behaviors and compete against opponents.

Results were promising, with real robots equipped with reinforcement learning software exhibiting significant improvements in speed, agility, and recovery compared to those using traditional scripted controllers. Moreover, the AI demonstrated the ability to learn complex motor skills and strategic gameplay, showcasing the potential of reinforcement learning in enhancing robotic capabilities.

In a parallel endeavor, Ilija Radosavovic and his team tackled the challenge of training a larger humanoid robot, Digit from Agility Robotics, standing at five feet tall. Employing a transformer neural network, akin to those powering large language models like ChatGPT, the team leveraged observation-action pairs to train the robot to perform tasks based on its environment.

The controller, after rigorous training in a digital simulation, showcased remarkable resilience and adaptability in real-world scenarios, outperforming traditional controllers in various tasks. These breakthroughs mark a tipping point in robotics, signaling the shift towards AI-driven control systems and the potential for unlocking even greater capabilities in the future.

As advancements continue, the synergies between different approaches, such as Berkeley’s robust system and Google DeepMind’s dexterity, will likely shape the evolution of AI-powered robotics. The fusion of these methodologies holds the key to addressing complex challenges and realizing the full potential of humanoid robots in diverse applications, including the realm of competitive sports like soccer, which has long been a benchmark for robotics and AI.

Conclusion:

The integration of reinforcement learning into humanoid robotics signifies a transformative shift in the market, with implications for industries ranging from manufacturing to healthcare. As robots become more agile, adaptable, and autonomous, they offer unprecedented opportunities for efficiency, productivity, and innovation. Businesses that embrace this technology stand to gain a competitive edge, driving growth and shaping the future of automation.