- Researchers in China developed a TPU using carbon nanotube transistors.

- This TPU offers significant energy efficiency, reducing power consumption in AI processing.

- The architecture optimizes data flow, minimizing SRAM operations.

- Achieved 88% accuracy in image recognition with ultra-low power usage.

- Potential for further advancements in performance, scalability, and integration.

Main AI News:

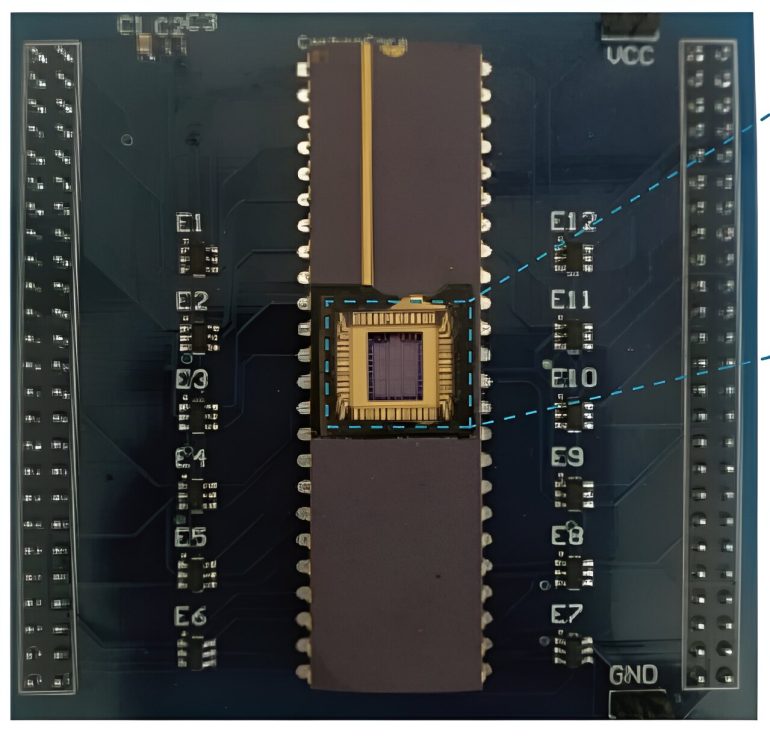

Researchers from Peking University and other Chinese institutions have introduced a groundbreaking tensor processing unit (TPU) made from carbon nanotubes, marking a significant advancement in energy-efficient AI hardware. Detailed in Nature Electronics, this TPU is designed to address the growing computational demands of AI, which traditional silicon-based semiconductors struggle to meet.

The team developed a novel systolic array architecture utilizing carbon nanotube transistors—field effect transistors (FETs) that replace conventional semiconductors with carbon nanotubes. This innovative design optimizes data flow, significantly reducing energy consumption by minimizing the read and write operations of static random-access memory (SRAM).

The TPU’s architecture allows efficient processing, accelerating convolution operations in neural networks. The researchers demonstrated its capabilities by constructing a five-layer convolutional neural network, achieving an 88% accuracy rate in image recognition while consuming just 295μW of power—setting a new benchmark for energy efficiency in convolutional acceleration technologies. Initial simulations suggest that this TPU, based on 180 nm technology, can operate at 850 MHz with energy efficiency surpassing 1 TOPS/w, outperforming other devices at the same node.

Looking ahead, the team is focused on enhancing the TPU’s performance, energy efficiency, and scalability. Potential advancements include the integration of aligned semiconducting carbon nanotubes, size reduction of transistors, and three-dimensional system integration. These developments position the carbon nanotube-based TPU as a key player in the next generation of AI hardware, with the potential to drastically reduce power consumption while boosting processing capabilities.

Conclusion:

Introducing a carbon nanotube-based TPU represents a pivotal development in AI hardware, promising substantial energy savings and enhanced processing capabilities. As AI applications grow increasingly data-intensive, this innovation could disrupt the market by setting new energy efficiency and performance standards. Companies investing in AI and semiconductor technologies should closely monitor this advancement, as it could lead to a shift towards more sustainable, high-performing AI systems, giving early adopters a competitive edge. This breakthrough also signals a potential move from traditional silicon-based technologies, opening the door for further innovation in low-dimensional electronics and 3D integration.