- Cerebras introduces Wafer Scale Engine 3 (WSE-3), doubling performance while maintaining power consumption.

- WSE-3 boasts 4 trillion transistors, a 50% increase over the previous generation, enabled by advanced chipmaking technology.

- Deployment in a Dallas datacenter aims to create a supercomputer capable of 8 exaflops.

- Partnership with Qualcomm targets a 10-fold enhancement in AI inference price-performance ratio.

- Despite reductions in process node size, transistor count has tripled since the inception of the first megachip.

- CS-3 computer can train neural network models up to 24 trillion parameters, outpacing existing models by 10 times.

Main AI News:

Cerebras, headquartered in Sunnyvale, California, has unveiled its latest iteration of waferscale AI chips, promising a doubling in performance compared to the previous generation while maintaining the same power consumption levels. Dubbed the Wafer Scale Engine 3 (WSE-3), this new chip boasts an impressive 4 trillion transistors, marking a remarkable 50 percent increase over its predecessor, thanks to advancements in chipmaking technologies. The company has announced plans to deploy the WSE-3 in a new line of AI computing systems, with the first installations already underway at a datacenter in Dallas, set to create a supercomputer capable of delivering a staggering 8 exaflops (8 billion billion floating point operations per second). Additionally, Cerebras has entered into a collaborative agreement with Qualcomm, aiming to enhance the price-performance ratio for AI inference by a factor of 10.

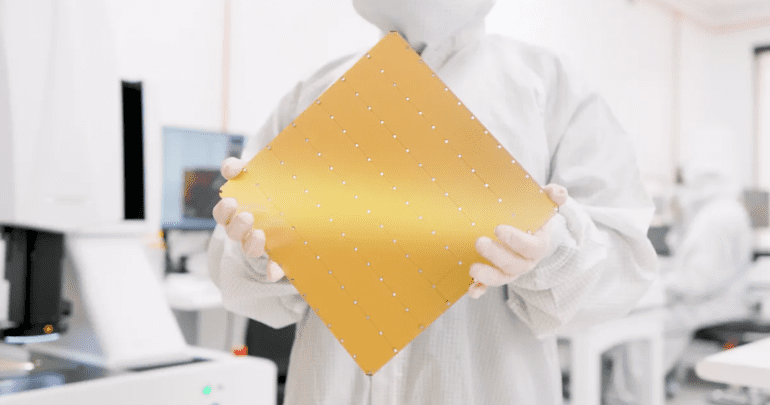

With the introduction of the WSE-3, Cerebras maintains its position as the producer of the world’s largest single chip. Measuring 21.5 centimeters on each side and utilizing nearly an entire 300-millimeter wafer of silicon, this square-shaped chip pushes the boundaries of conventional chipmaking, which typically caps the size of silicon dies at around 800 square millimeters. While some chipmakers have turned to 3D integration and advanced packaging technologies to combine multiple dies, resulting in transistor counts in the tens of billions, none have matched the scale achieved by Cerebras.

The evolution of Cerebras’ WSE chips reflects the trajectory of Moore’s Law. Starting with the debut of the first WSE chip in 2019, manufactured using TSMC’s 16-nanometer process, subsequent iterations have seen a steady march toward smaller process nodes, with WSE-3 utilizing TSMC’s cutting-edge 5-nanometer technology. Despite this reduction in process node size, the transistor count has more than tripled since the inception of the first megachip, signifying a substantial leap in computational power.

While the number of AI cores, memory capacity, and internal bandwidth have plateaued, the performance improvement, measured in floating-point operations per second (flops), has outpaced all other metrics. The CS-3 computer, powered by the new AI chip, is designed to train vastly larger language models, surpassing OpenAI’s GPT-4 and Google’s Gemini by a factor of 10. With the capability to train neural network models with up to 24 trillion parameters, the CS-3 eliminates the need for complex software workarounds required by other systems, making training tasks more efficient and accessible.

In a strategic move to address the growing demand for cost-effective AI inference solutions, Cerebras has partnered with Qualcomm to develop innovative techniques aimed at reducing inference costs by a significant margin. Leveraging neural network techniques such as weight data compression and sparsity, coupled with Qualcomm’s state-of-the-art inference chip, the AI 100 Ultra, Cerebras aims to democratize AI inference, making it more accessible and sustainable for a wide range of applications and industries.

Conclusion:

Cerebras’ latest advancements in waferscale AI processing signify a significant leap forward in the industry. The doubling of performance and partnership with Qualcomm to enhance AI inference affordability underscore the company’s commitment to driving innovation and accessibility in AI computing. With the potential to train larger language models efficiently, Cerebras is poised to reshape the landscape of AI research and applications, opening doors to unprecedented possibilities in various sectors such as healthcare, finance, and technology.