TL;DR:

- MIT CSAIL researchers address the challenge of image recognition accuracy in AI.

- They introduce the “minimum viewing time” (MVT) metric to quantify image recognition difficulty.

- Existing datasets favor easy images, leading to skewed model performance metrics.

- Larger AI models perform better on simple images but struggle with complexity.

- The study paves the way for more realistic benchmarks and improved AI performance.

- It has implications for healthcare, professional evaluations, and real-world AI applications.

Main AI News:

In today’s ever-evolving landscape of artificial intelligence (AI), image recognition accuracy stands as an unseen challenge, perplexing even the most advanced AI systems. As we browse through the photos on our devices, we encounter images that leave us momentarily baffled. Take, for instance, the image of a seemingly fuzzy object on a couch—could it be a pillow or a coat? After a brief pause, it dawns on us that it’s our friend’s beloved cat, Mocha. While some images are instantly deciphered, why do certain photos present such difficulty?

MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) researchers have made a startling discovery. Despite the paramount importance of visual data comprehension across vital sectors such as healthcare, transportation, and household devices, the challenge of determining an image’s recognition difficulty for humans has largely been overlooked. The driving force behind the advancement of AI, deep learning-based models, has primarily relied on datasets. However, we possess limited knowledge about how data influences progress in large-scale deep learning beyond the simple notion that “more data is better.”

In real-world applications demanding a profound understanding of visual data, humans consistently outperform object recognition models, even though these models excel on existing datasets, including those explicitly tailored to challenge AI systems with unbiased images and distribution variations. The persistence of this problem can be attributed, in part, to the absence of a standardized measure for the difficulty of images or datasets. Without accounting for image difficulty in evaluation, it becomes challenging to objectively gauge progress toward human-level performance, cover the entire spectrum of human capabilities, and elevate the complexity level of datasets.

To bridge this knowledge gap, David Mayo, an MIT PhD student in electrical engineering and computer science and a CSAIL affiliate, embarked on a comprehensive exploration of image datasets. Mayo sought to understand why certain images posed greater challenges for both humans and machines when it came to recognition. He elaborates, “Some images inherently demand more time for recognition, and it’s crucial to unravel the neural processes during this period and how they relate to machine learning models. Perhaps intricate neural circuits or unique mechanisms exist that are absent in our current models, only becoming apparent when confronted with demanding visual stimuli. This exploration is pivotal for grasping and enhancing machine vision models.”

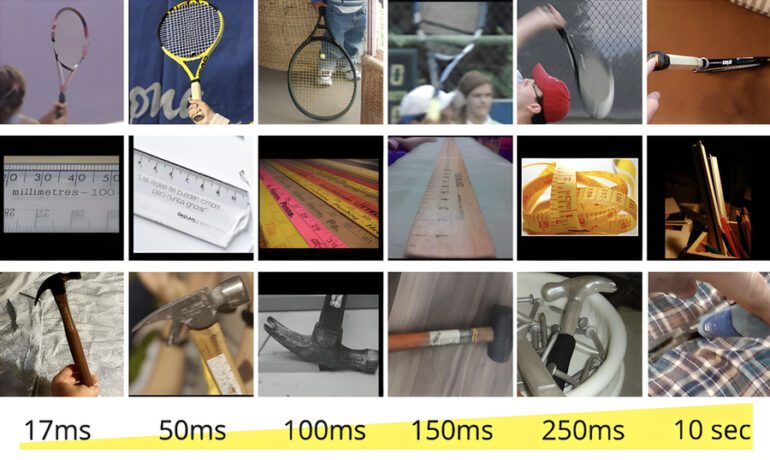

This endeavor led to the development of a groundbreaking metric known as the “minimum viewing time” (MVT). MVT quantifies an image’s recognition difficulty by measuring how long an individual needs to view it before correctly identifying its contents. Using a subset of ImageNet, a renowned machine learning dataset, and ObjectNet, designed to test object recognition robustness, the research team exposed participants to images for varying durations, ranging from a mere 17 milliseconds to a substantial 10 seconds. They then tasked the participants with selecting the correct object from a pool of 50 options. After conducting over 200,000 image presentation trials, the team uncovered a startling revelation. Existing test sets, including ObjectNet, appeared to favor easier, shorter MVT images, with the majority of benchmark performance drawn from images that posed minimal challenges to humans.

The project also unveiled intriguing trends in model performance, particularly in relation to scaling. Larger models exhibited significant improvements when confronted with simpler images but made limited progress with more intricate ones. Notably, the CLIP models, which seamlessly integrate language and vision, displayed a more human-like recognition pattern.

David Mayo emphasizes, “Traditionally, object recognition datasets have skewed towards simpler images, a practice that artificially inflates model performance metrics, failing to reflect a model’s true robustness or its capability to handle complex visual tasks. Our research exposes the fact that challenging images present a more profound challenge, leading to a distribution shift often overlooked in standard assessments.” He adds, “We have released image sets categorized by difficulty, along with tools for automatic MVT computation. This empowers the inclusion of MVT in existing benchmarks and its application across various domains. These include assessing test set difficulty before implementing real-world systems, discovering neural correlations with image difficulty, and advancing object recognition techniques to bridge the gap between benchmark and real-world performance.“

Jesse Cummings, an MIT graduate student in electrical engineering and computer science and co-first author with Mayo, shares his insight, stating, “One of my most significant takeaways is that we now possess an additional dimension to evaluate models. We aim for models capable of recognizing any image, especially those challenging for humans. Our results underscore that current state-of-the-art models fall short of this ideal, and our existing evaluation methods are ill-equipped to determine when they do because standard datasets predominantly consist of straightforward images.”

The journey from ObjectNet to MVT signifies a crucial evolution in the field of machine learning. By focusing on how models perform in contrasting their responses to the easiest and most challenging images, this novel approach departs from traditional methods that emphasize absolute performance. It delves into the intricacies of image difficulty, offering insights into how it aligns with human visual processing. Although observable trends exist, a comprehensive semantic explanation of image difficulty remains an enigma, as David Mayo points out.

In domains such as healthcare, the relevance of comprehending visual complexity takes center stage. The ability of AI models to decipher medical images, such as X-rays, hinges on the diversity and distribution of image difficulty. The researchers advocate for meticulous analysis of difficulty distribution tailored to professionals, ensuring AI systems are evaluated against expert standards rather than layperson interpretations.

Mayo and Cummings are currently exploring the neurological underpinnings of visual recognition. They are investigating whether the brain exhibits distinct activity patterns when processing easy versus challenging images. This study aims to unravel whether complex images engage additional brain regions not typically associated with visual processing, shedding light on how our brains decode the visual world with precision and efficiency.

As we peer into the future, the researchers are not solely focused on enhancing AI’s predictive capabilities concerning image difficulty. They are also striving to identify correlations with viewing-time difficulty to create images of varying complexity—either more challenging or easier to recognize.

While this study represents a significant stride forward, the researchers acknowledge its limitations, particularly regarding the separation of object recognition from visual search tasks. The current methodology concentrates on object recognition, omitting the complexities introduced by cluttered images.

David Mayo concludes, “This comprehensive approach addresses the longstanding challenge of objectively assessing progress toward human-level performance in object recognition. It opens new avenues for understanding and advancing the field. With the potential to adapt the Minimum Viewing Time difficulty metric for various visual tasks, this work paves the way for more robust, human-like performance in object recognition, ensuring that models are truly tested and prepared for the intricacies of real-world visual comprehension.”

Alan L. Yuille, Bloomberg Distinguished Professor of Cognitive Science and Computer Science at Johns Hopkins University, offers his perspective, stating, “This is a fascinating study of how human perception can be used to identify weaknesses in the ways AI vision models are typically benchmarked, which overestimate AI performance by concentrating on easy images. This will help develop more realistic benchmarks leading not only to improvements to AI but also make fairer comparisons between AI and human perception.”

Simon Kornblith, PhD ’17, a technical staff member at Anthropic, adds, “It’s widely claimed that computer vision systems now outperform humans, and on some benchmark datasets, that’s true. However, a lot of the difficulty in those benchmarks comes from the obscurity of what’s in the images; the average person just doesn’t know enough to classify different breeds of dogs. This work instead focuses on images that people can only get right if given enough time. These images are generally much harder for computer vision systems, but the best systems are only a bit worse than humans.”

This pioneering research was authored by David Mayo, Jesse Cummings, and Xinyu Lin MEng ’22, in collaboration with CSAIL Research Scientist Andrei Barbu, CSAIL Principal Research Scientist Boris Katz, and MIT-IBM Watson AI Lab Principal Researcher Dan Gutfreund. These researchers are affiliated with the MIT Center for Brains, Minds, and Machines.

The team will present their groundbreaking work at the prestigious 2023 Conference on Neural Information Processing Systems (NeurIPS), marking a significant milestone in the field of AI image recognition.

Conclusion:

This research exposes the limitations of current AI image recognition benchmarks and introduces a promising metric, MVT, to assess image difficulty. The findings highlight the need for more realistic evaluation standards in AI, with potential implications for industries like healthcare and broader real-world AI applications. AI developers and stakeholders should consider these insights when benchmarking and implementing image recognition systems.