TL;DR:

- Language Models (LLMs) have transformed industries with their NLP automation.

- LLMs face limitations in fields like chemistry due to their focus on predicting words.

- ChemCrow is an LLM-powered chemistry engine that combines LLMs with expert-designed tools.

- ChemCrow simplifies chemical tasks in drug and materials design, synthesis, and more.

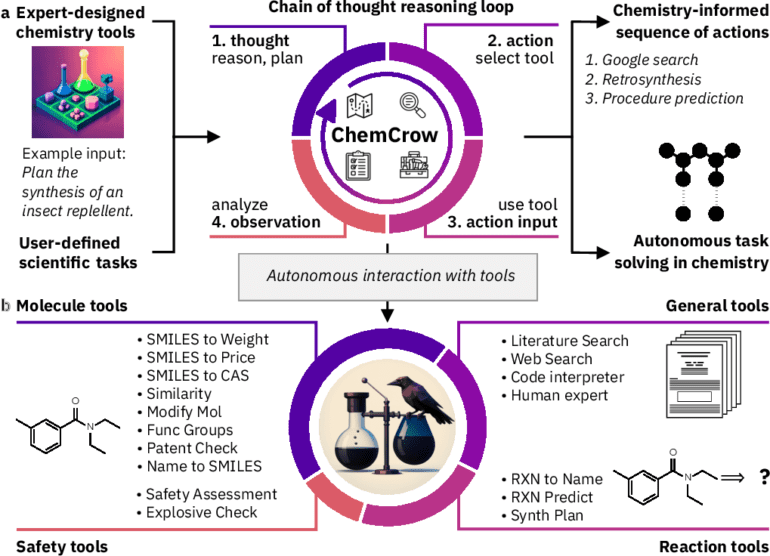

- The model follows a pattern of Thought, Action, Action Input, and Observation.

- ChemCrow evolves from an information source to a thinking engine, improving task completion.

- Thirteen different tools are integrated, with room for expansion.

- ChemCrow offers a user-friendly interface for both professional chemists and non-experts.

- The evaluation shows that ChemCrow outperforms GPT-4 in successful task completion.

Main AI News:

The revolutionary progress in Natural Language Processing (NLP) automation catalyzed by Language Language Models (LLMs) has triggered a paradigm shift across multiple industries. These models have been successfully applied to various NLP applications, showcasing remarkable few-shot and zero-shot outcomes. Particularly noteworthy are the recent advancements leveraging the Transformer architecture, initially developed for neural machine translation.

However, it is crucial to recognize the limitations inherent in LLMs. Challenges arise when it comes to acquiring proficiency in elementary arithmetic and chemical calculations. These constraints stem from the fundamental structure of these models, which primarily focus on predicting upcoming words. To overcome these barriers, a viable approach involves enhancing comprehensive language models with supplementary third-party software.

Artificial intelligence (AI) systems, meticulously crafted by experts to address specific problems, have made significant headway in the field of chemistry. These systems have made substantial contributions to reaction prediction, retrosynthesis planning, molecular property prediction, materials design, and, most recently, Bayesian Optimization. Notably, code-generating LLMs have demonstrated a certain level of comprehension of chemistry due to the nature of their training. However, the complex and sometimes artisanal aspects of chemistry, coupled with the limited scope and applicability of computational tools, often pose challenges, even within their specified domains. Corporate mandates favoring integration and internal use have facilitated the integration of closed settings like RXN for Chemistry and AIZynthFinder, exemplifying successful collaborations between LLMs and chemistry-specific tools.

Addressing these concerns, researchers from the Laboratory of Artificial Chemical Intelligence (LIAC), the National Centre of Competence in Research (NCCR) Catalysis, and the University of Rochester introduce ChemCrow. This cutting-edge LLM-powered chemistry engine draws inspiration from successful applications in diverse fields and aims to streamline the reasoning process for a wide range of typical chemical tasks in drug and materials design, as well as synthesis. By equipping an LLM (such as GPT-4 in our trials) with task-specific and format-specific prompts, ChemCrow harnesses the power of expert-designed chemistry-specific tools. The LLM receives a curated list of tools along with concise explanations of their purpose, data input requirements, and expected output.

To ensure effective operation, the model follows a pattern of Thought, Action, Action Input, and Observation. This approach necessitates considering the current task’s state and its relationship to the ultimate objective and devising a plan of action accordingly. In alignment with this preprint, another study (reference 46) outlines a similar strategy for endowing an LLM with chemistry-specific capabilities that would otherwise lie beyond its purview. Subsequently, the LLM solicits an action and the corresponding input for that action, based on the reasoning it has just performed in the Thought step. After a brief intermission, the text generator resumes its quest for an appropriate function to apply to the provided data. The generated result, prefixed with the term “Observation,” is relayed back to the LLM, which then reiterates the previous step, “Thought.”

Through this iterative process, the LLM evolves from a self-assured, albeit occasionally flawed, source of information into a thinking engine that critically observes and reflects upon its findings, subsequently taking appropriate actions based on acquired knowledge. The researchers have integrated thirteen different tools to facilitate research and discovery. Although the toolset employed is not exhaustive, it can be effortlessly expanded by simply introducing additional tools and providing natural language descriptions of their intended purposes. ChemCrow serves as a user-friendly interface, catering to both professional chemists and individuals lacking specialized training in the field, ensuring access to reliable chemical information.

Conclusion:

The introduction of ChemCrow, an LLM-powered chemistry engine, represents a significant advancement in the market. By combining language models with expert-designed tools, ChemCrow simplifies and enhances chemical tasks in various domains. Its ability to evolve from an information source to a thinking engine opens up new possibilities for professionals and non-experts alike. With its user-friendly interface and superior performance, ChemCrow is set to revolutionize the way chemistry-related work is conducted, catering to a wide range of users and driving innovation in the market.