TL;DR:

- ChemLLM is a pioneering dialogue-based language model tailored for chemistry.

- Developed by a collaborative team, it bridges the gap in LLMs for chemistry-specific applications.

- It addresses the challenge of structured chemical data through innovative instruction construction methods.

- ChemLLM’s training on dialogue-friendly formats ensures proficiency in processing complex chemical information.

- Outperforms established models in core chemical tasks like name conversion and reaction prediction.

- Demonstrates adaptability to related domains such as mathematics and physics.

- Proficient in specialized natural language processing tasks within chemistry.

- Offers potential as a reliable assistant for various chemistry-related endeavors.

- Public availability of codes, datasets, and model weights encourages further exploration and innovation.

Main AI News:

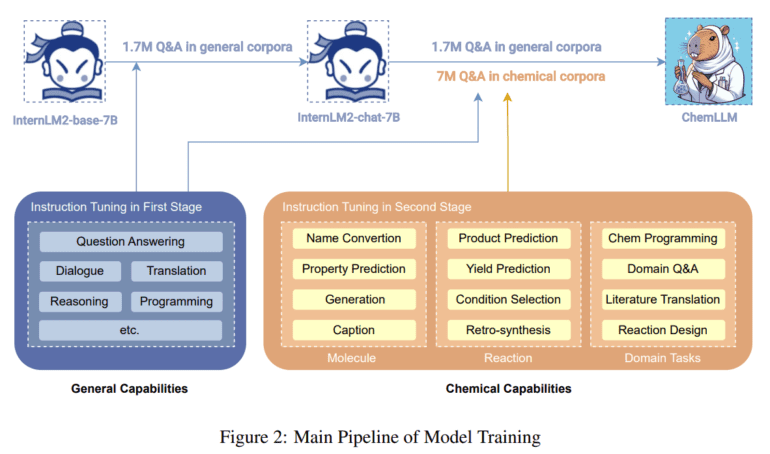

The emergence of tailored large language models (LLMs) marks a significant advancement across diverse sectors. In particular, the realm of chemistry, with its intricate demands, has eagerly awaited a solution capable of adeptly navigating its complexities. Enter ChemLLM, a pioneering model jointly developed by leading minds from Shanghai Artificial Intelligence Laboratory, Fudan University, Shanghai Jiao Tong University, Wuhan University, The Hong Kong Polytechnic University, and The Chinese University of Hong Kong. This groundbreaking initiative heralds the arrival of the first dialogue-based LLM tailored explicitly for chemistry, addressing the nuanced requirements of this scientific discipline. ChemLLM’s inception stemmed from a crucial recognition of the existing gap in LLMs catered towards chemistry.

The primary obstacle lies in the structured nature of chemical data, typically entrenched in databases and incompatible with the dialogue-centric architecture of traditional LLMs. ChemLLM tackles this challenge head-on with its innovative template-based instruction construction method. By transmuting structured chemical data into a dialogue-friendly format, ChemLLM seamlessly engages in interactions, positioning itself as an adept participant in chemical discourse.

The journey commences with the conversion of structured chemical knowledge into dialogue-friendly formats, enabling the model to train on these dialogues as if they were natural conversations. This approach ensures ChemLLM’s proficiency in processing and comprehending intricate chemical information, facilitating coherent and contextually relevant discussions on chemistry-related topics. Subsequently, the model undergoes extensive training on a comprehensive corpus of chemical data, spanning tasks ranging from molecular property prediction to reaction forecasting, while maintaining its prowess in natural language processing.

ChemLLM’s performance sets a new standard, outshining established models such as GPT-3.5 and GPT-4 in fundamental chemical tasks. Its proficiency in name conversion, molecular captioning, and reaction prediction underscores its profound grasp of chemical principles and its ability to apply this knowledge effectively. Notably, despite its primary focus on chemistry, ChemLLM exhibits remarkable adaptability to related domains like mathematics and physics, highlighting its versatility and potential utility beyond its core domain.

The model further demonstrates its prowess in specialized natural language processing tasks within the realm of chemistry. From translating chemical literature to programming in cheminformatics, ChemLLM showcases a nuanced understanding of the field’s language and its practical applications. Such proficiency positions ChemLLM as a dependable assistant for a myriad of chemistry-related endeavors, offering insights and solutions rooted in a profound understanding of chemical knowledge.

By making the model’s codes, datasets, and model weights accessible to the public, the research team has paved the way for extensive exploration and innovation in integrating LLMs into the field of chemistry. This collaborative approach fosters the model’s adoption and adaptation by the broader scientific community, fostering a culture of collaboration and perpetual enhancement in the domain.

Conclusion:

The introduction of ChemLLM marks a significant milestone in integrating AI with chemistry, offering enhanced capabilities in processing and understanding complex chemical data. Its adaptability and proficiency across various tasks signal promising opportunities for innovation and efficiency within the scientific community. As ChemLLM becomes more accessible, we anticipate accelerated advancements in chemistry-related fields, driven by collaborative exploration and utilization of this groundbreaking technology.