TL;DR:

- AI and deep learning have transformed many fields, including medical imaging.

- Chest X-ray (CXR) interpretation benefits from vision-language foundation models (FMs).

- Limited data and evaluation challenges have impeded FM development for CXR analysis.

- Researchers from Stanford and Stability AI introduce the CheXinstruct dataset to enhance FM CXR interpretation.

- CheXagent, an instruction-tuned FM, combines language understanding, image encoding, and bridging networks.

- CheXbench evaluates FM performance, with CheXagent outperforming general- and medical-domain FMs.

- CheXagent excels in view classification, disease classification, identification, and textual understanding.

- Fairness assessment identifies areas for improvement in alignment with human radiologist standards.

Main AI News:

Artificial Intelligence (AI) has ushered in a transformative era in various domains, from natural language understanding to computer vision. Within the realm of medical imaging, the interpretation of chest X-rays (CXRs) has not remained untouched by the AI revolution. CXRs, being one of the most commonly employed diagnostic tools, hold immense clinical importance. The emergence of vision-language foundation models (FMs) has paved the way for automated CXR interpretation, offering the potential to redefine clinical decision-making and elevate patient care.

Developing effective FMs tailored for CXR interpretation presents a formidable challenge. The scarcity of extensive vision-language datasets, the intricacies of medical data, and the absence of robust evaluation frameworks have traditionally hindered progress. Conventional methods often fall short of capturing the intricate relationship between visual elements and their corresponding medical interpretations, impeding the development of accurate CXR interpretation models.

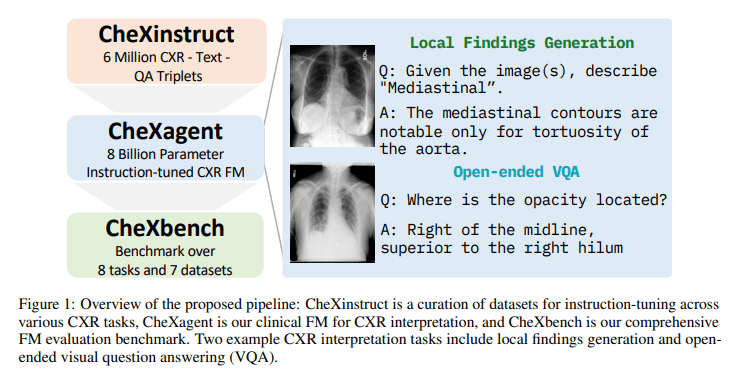

In a groundbreaking effort, researchers at Stanford University, in collaboration with Stability AI, have unveiled CheXinstruct—a meticulously curated instruction-tuning dataset derived from 28 publicly available sources. This dataset is purposefully designed to enhance the CXR interpretation capabilities of FMs significantly. Simultaneously, the research team introduced CheXagent, an instruction-tuned FM for CXR interpretation, boasting a staggering 8 billion parameters. CheXagent represents a fusion of a clinical large language model (LLM) proficient in comprehending radiology reports, a visionary encoder for encoding CXR images, and a bridging network that seamlessly integrates visual and linguistic elements. This harmonious amalgamation empowers CheXagent to analyze and succinctly summarize CXRs with precision.

To gauge the effectiveness of these innovations, CheXbench was introduced as an evaluation benchmark. CheXbench facilitates systematic comparisons of FMs across eight clinically relevant CXR interpretation tasks, evaluating their prowess in image perception and textual comprehension. The results were nothing short of exceptional, with CheXagent surpassing both general-domain and medical-domain FMs.

CheXagent’s supremacy was evident in various tasks, including view classification, binary disease classification, single and multi-disease identification, and visual question answering. In the realm of textual understanding, CheXagent excelled in generating medically accurate reports and condensing findings—a feat validated by expert radiologists.

Furthermore, the evaluation encompassed a fairness assessment across sex, race, and age groups to identify potential performance disparities, contributing to the model’s transparency. While CheXagent emerged as a frontrunner in performance, it also acknowledged the need for further refinement, particularly in aligning its outputs with the standards upheld by human radiologists.

Conclusion:

The introduction of CheXagent and the CheXinstruct dataset signifies a major advancement in AI-powered CXR interpretation. This breakthrough technology has the potential to reshape the medical imaging market by improving diagnostic accuracy, streamlining clinical decision-making, and enhancing patient care. It underscores the growing significance of AI in healthcare and sets the stage for further innovations in the field.