- China implements AI chatbot verification using “source blocking” to censor negative information about President Xi Jinping.

- Cyberspace Administration (CAC) mandates AI giants and startups to participate in censoring large language models (LLMs).

- LLMs act as educational resources for AI chatbots, impacting their functionality based on the quality of data.

- CAC monitors AI chatbot responses to sensitive queries about President Xi and Chinese politics, ensuring compliance.

- Chinese AI companies must manage “sensitive keywords” and maintain dedicated databases to pass censorship.

- Ambiguous censorship standards pose challenges, with guidelines continually expanding and thousands of sensitive keywords.

Main AI News:

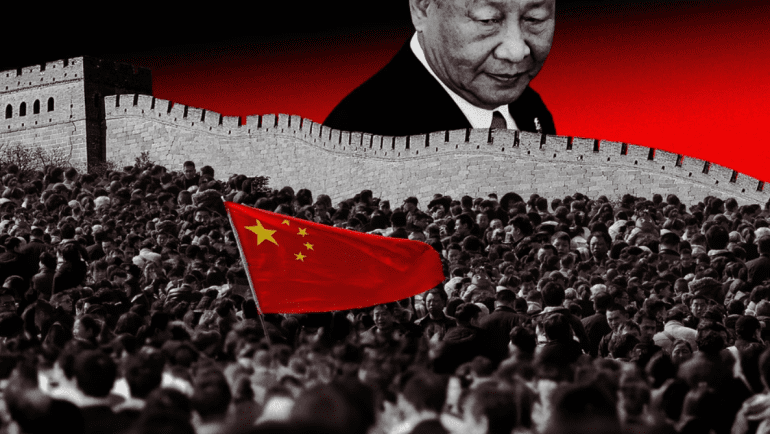

China has initiated trials of its AI chatbot verification process, employing a “source blocking” technique to censor negative information about China and President Xi Jinping from AI systems. Concurrently, China has launched its own AI chatbot imbued with President Xi’s philosophical principles.

The Financial Times (FT) reported on the 17th (local time) that China’s Cyberspace Administration (CAC) is mandating participation from AI giants and startups alike in the government’s censorship efforts over large language models (LLMs). This oversight extends to prominent tech entities such as ByteDance and Alibaba, as well as numerous smaller startups.

LLMs function akin to textbooks for AI chatbots, with the quality of text data they contain directly impacting the bots’ capabilities. According to FT sources, CAC operatives across China are posing sensitive queries about President Xi and critical questions about Chinese political affairs to AI chatbots, monitoring and scrutinizing their responses. The CAC also oversees data integrity and security protocols within LLMs.

Chinese AI firms must undertake specific measures to pass censorship, including the curation of “sensitive keywords” and maintenance of dedicated databases. Guidelines issued by Chinese authorities in February outline sensitive terms such as “subversion of state power” and “hindrance to national unity,” which AI companies must update weekly, given the broad nature of the directives that continue to expand with thousands of keywords.

Ambiguities in censorship standards pose challenges, as noted by an AI company employee in Hangzhou, China, who remarked, “I failed the initial CAC review without clear reasons, leading to months of internal speculation.” The guidelines mandate that AI chatbots handle sensitive queries in diverse ways, limiting rejected responses to less than 5% of all queries posed.

Major AI tech players appear to have met regulatory standards. Baidu’s chatbot prompts users to ask alternative questions when inquired about sensitive topics like the Tiananmen incident or President Xi, while Alibaba’s chatbot evasively responds, “I am still learning how to answer that.”

This regulatory framework is perceived as bolstering China’s socialist governance through AI chatbots, exemplified by the development and distribution of an AI chatbot aligned with “Xi Jinping Thought on Socialism with Chinese Characteristics for a New Era.” CAC actively promotes this initiative.

Conclusion:

China’s stringent regulatory framework for AI, highlighted by its control over AI chatbots through censorship and compliance mandates, underscores a proactive approach to shaping AI’s role within national governance. This robust system aims to consolidate state power and ideological control, potentially influencing global AI development trends towards increased regulatory scrutiny and compliance requirements.