TL;DR:

- China’s major internet companies rush to purchase Nvidia chips worth $5 billion for AI development.

- Baidu, ByteDance, Tencent, and Alibaba place orders for A800 processors and GPUs.

- Export restrictions prompt Chinese firms to opt for A800 chips due to limitations on A100s.

- Nvidia GPUs have gained prominence as a vital resource for large language AI models.

- Concerns over potential US export controls drive increased chip stockpiling.

- China’s internet giants develop homegrown large language models to emulate ChatGPT.

- ByteDance’s AI chatbot Grace undergoes internal testing; the company stockpiles Nvidia GPUs.

- Alibaba integrates large language model into various products; Baidu develops Ernie Bot.

- Rising chip costs due to high demand for AI capabilities observed.

- Tencent Cloud releases server cluster powered by Nvidia H800 GPU; Alibaba Cloud follows suit.

- ByteDance provides cloud computing solutions with stockpiled Nvidia chips; introduces trial platform.

Main AI News:

China’s major internet giants are swiftly procuring high-performance Nvidia chips, which are crucial for constructing advanced generative artificial intelligence systems. In a purchasing frenzy spurred by concerns over potential US export restrictions, Baidu, ByteDance, Tencent, and Alibaba have collectively committed to orders worth $1 billion, targeting around 100,000 A800 processors from the renowned US chip manufacturer. The deliveries for these orders are set to take place this year, as disclosed by several sources with insights into the matter. Furthermore, these Chinese tech conglomerates have also successfully secured an additional $4 billion in orders for graphics processing units, scheduled for delivery in 2024.

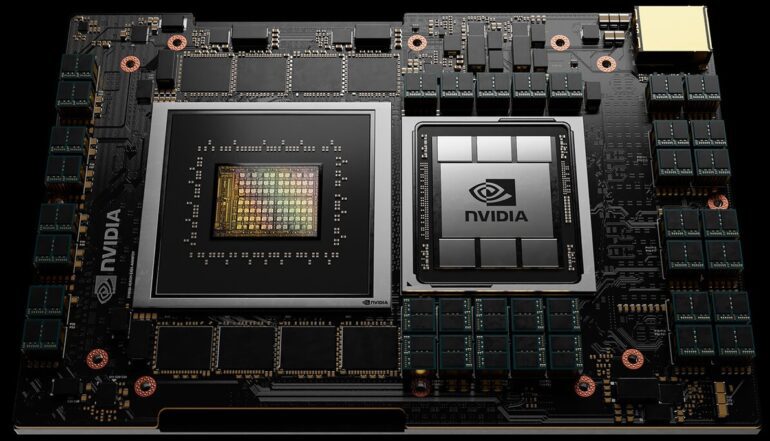

The A800 variant represents a modified iteration of Nvidia’s cutting-edge A100 GPU, primarily designed for data centers. The imposition of export limitations by the US government last year, aimed at restricting Beijing’s technological aspirations, has restricted Chinese technology companies to exclusively acquiring A800s. Regrettably, these have inferior data transfer capabilities compared to the A100s. With the burgeoning excitement surrounding artificial intelligence in recent times, Nvidia’s GPUs have evolved into a highly sought-after commodity for the globe’s most influential technology firms, enabling them to empower the development of substantial language AI models.

The Chinese internet giants are fervently accumulating A800 chips out of apprehension that the Biden administration might consider fresh export constraints that could encompass Nvidia’s even less potent chips, further compounding the ongoing GPU scarcity driven by overwhelming demand. In a recent announcement, Washington unveiled plans to enforce a prohibition, commencing next year, on certain US investments within China’s quantum computing, advanced chip manufacturing, and artificial intelligence sectors.

A Baidu insider who preferred to remain anonymous remarked, “Without these Nvidia chips, we can’t pursue the training for any large language model.” These companies are currently in the process of crafting their own in-house expansive language models, following the success of ChatGPT, the breakthrough chatbot introduced by Microsoft-backed OpenAI roughly eight months ago. ByteDance, for instance, is fostering multiple small teams dedicated to diverse generative AI products. Among them is an AI chatbot dubbed Grace, presently undergoing internal testing. Earlier this year, ByteDance trialed a generative AI feature named TikTok Tako for its social media platform TikTok, which harnessed the capabilities of OpenAI’s ChatGPT. Sources within the company unveiled that ByteDance has already stockpiled a substantial 10,000 Nvidia GPUs to underpin its aspirations. Additionally, the firm has also placed orders for nearly 70,000 A800 chips, anticipated to arrive next year, with an estimated value of around $700 million.

Alibaba, on the other hand, envisions integrating its entire product lineup with a large language model, encompassing its online retail hub Taobao and its mapping utility Gaode Map. Meanwhile, Baidu is forging ahead with its own ChatGPT-like endeavor – a generative AI chatbot christened Ernie Bot. In response to inquiries, Baidu, ByteDance, Tencent, and Alibaba chose not to provide comments. Nvidia’s official statement noted, “Consumer internet companies and cloud providers invest billions of dollars on data center components every year, often placing orders many months in advance.”

Earlier this year, as the AI excitement gained momentum, the majority of China’s leading internet giants found themselves holding fewer than a few thousand chips suitable for training expansive language models. According to multiple sources within Chinese tech companies, the increasing demand has been mirrored by a notable surge in the chips’ costs. “The price of the A800 in the hands of distributors has risen by more than 50 percent,” shared an Nvidia distributor.

In a strategic move, Tencent Cloud recently unveiled a fresh server cluster in April, powered by the Nvidia H800 GPU – an iteration of the latest H100 model customized for China. This high-performing hardware can effectively fuel extensive language model training, autonomous driving systems, and scientific computing. Similarly, Alibaba Cloud has procured numerous H800 chips from Nvidia, and a slew of interested parties have reached out to the company, expressing a desire for cloud services augmented by these chips. Their intention is to replicate the success of ChatGPT in the Chinese market. Additionally, ByteDance is actively engaging in offering cloud computing solutions, utilizing its stockpile of Nvidia A800 and A100 chips. The company further introduced a platform in June for corporations to explore various large language model services in a trial capacity.

Conclusion:

The substantial investments by China’s internet giants in Nvidia chips underscore their determination to advance their AI ambitions. The rush to procure A800 processors and GPUs reflects the urgency to secure AI-enabling hardware amid concerns about potential export controls. The push to develop homegrown large language models indicates a competitive shift in the AI landscape, while rising chip costs signify the growing value of these resources. The introduction of advanced server clusters and cloud computing solutions highlights the ongoing race to harness AI’s potential for various applications.