TL;DR:

- Cloudflare to deploy Nvidia GPUs in its global Edge network for AI applications.

- Aims to make AI inference accessible and cost-effective worldwide.

- Strategic collaboration includes Nvidia Ethernet switches and software stack.

- Rapid GPU deployment in 100+ cities by the end of 2023, expanding further in 2024.

- Initial support for predefined AI models, with plans to add more.

- Potential for responsive customer experiences and innovation across industries.

Main AI News:

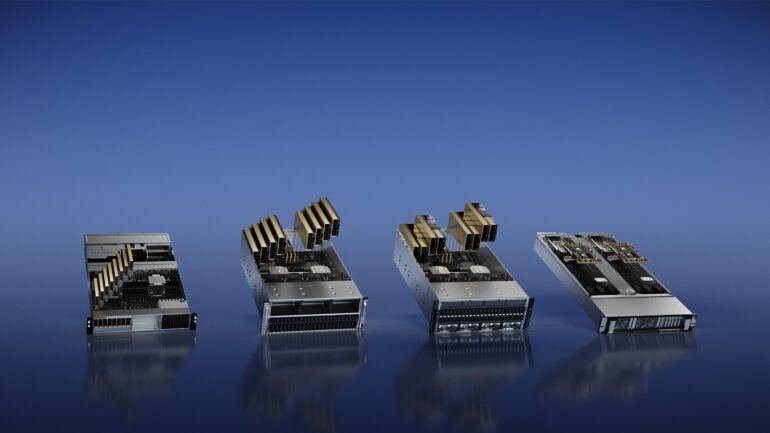

In a strategic move poised to redefine the landscape of generative AI, content delivery network (CDN) giant, Cloudflare, has unveiled ambitious plans to deploy Nvidia GPUs within its expansive global Edge network. This groundbreaking initiative, set to revolutionize the accessibility and affordability of AI inference, represents a significant leap forward in the world of artificial intelligence applications, with a particular focus on large-language models.

Matthew Prince, CEO and co-founder of Cloudflare, envisions a future where AI inference on a network becomes the “sweet spot” for businesses worldwide. By leveraging Nvidia’s cutting-edge GPU technology, Cloudflare aims to bring AI inference capabilities, once considered out of reach for many, within grasp, regardless of geographic location.

“AI inference on a network is going to be the sweet spot for many businesses: private data stays close to wherever users physically are, while still being extremely cost-effective to run because it’s nearby,” Matthew Prince, CEO and co-founder of Cloudflare, said. “With Nvidia’s state-of-the-art GPU technology on our global network, we’re making AI inference – that was previously out of reach for many customers – accessible and affordable globally.“

Cloudflare’s strategic collaboration with Nvidia extends beyond GPU deployment, encompassing the incorporation of Nvidia Ethernet switches and the utilization of Nvidia’s comprehensive stack inference software, which includes Nvidia TensorRT-LLM and Nvidia Triton Inference server. This holistic approach ensures that Cloudflare’s Edge network is well-equipped to support the most demanding AI workloads.

The rollout of Nvidia GPUs is slated for rapid expansion, with deployment in over 100 cities planned by the close of 2023. By the end of 2024, Cloudflare’s AI-powered Edge network is set to reach “nearly everywhere Cloudflare’s network extends,” underscoring the company’s commitment to global accessibility.

“Nvidia’s inference platform is critical to powering the next wave of generative AI applications,” emphasized Ian Buck, VP of hyperscale and HPC at Nvidia. The collaboration between Cloudflare and Nvidia promises to unlock unprecedented opportunities for businesses across various industries, enabling them to craft responsive customer experiences and drive innovation to new heights.

However, it’s important to note that at the initial launch, Cloudflare’s AI Edge network will exclusively support predefined models. These include Meta’s Llama 2 7B and M2m100-1.2, OpenAI’s Whisper, Hugging Face’s Distilbert-sst-2-int8, Microsoft’s Resnet-50, and Baai’s bge-base-en-v1.5. Cloudflare has expressed its intention to broaden model support in the future, with collaborative efforts from partners like Hugging Face.

Conclusion:

This strategic partnership between Cloudflare and Nvidia signifies a significant shift in the AI market landscape. By bringing Nvidia’s advanced GPU technology to its global Edge network, Cloudflare is set to democratize AI inference, making it more accessible and cost-effective for businesses worldwide. This move has the potential to drive innovation and reshape customer experiences across various industries, establishing Cloudflare as a key player in the AI ecosystem.