TL;DR:

- CMU introduces BUTD-DETR, an AI model that directly conditions spoken utterances to detect all mentioned objects in images.

- The model functions as a regular object detector when given a list of object categories and excels in 3D language grounding.

- With deformable attention enhancements, BUTD-DETR achieves comparable performance to MDETR in 2D while converging twice as quickly.

- The model unifies grounding models for 2D and 3D applications, adaptable to both dimensions with minor adjustments.

- BUTD-DETR outperforms state-of-the-art methods in 3D language grounding benchmarks and wins the ReferIt3D competition.

Main AI News:

The realm of computer vision hinges on the ability to identify all the “objects” present in an image accurately. While creating a vocabulary of categories and training models to recognize instances of these categories has been a prevailing approach, it still leaves a fundamental question unanswered – “What truly defines an object?” This quandary is further exacerbated when these object detectors are deployed as practical home agents. Often, models learn to select the most relevant item from a pool of object suggestions offered by pre-trained detectors when confronted with referential utterances in either 2D or 3D settings. Regrettably, this can lead to missed detections of utterances pertaining to more nuanced visual elements, such as the chair, its legs, or even the front tip of a chair leg.

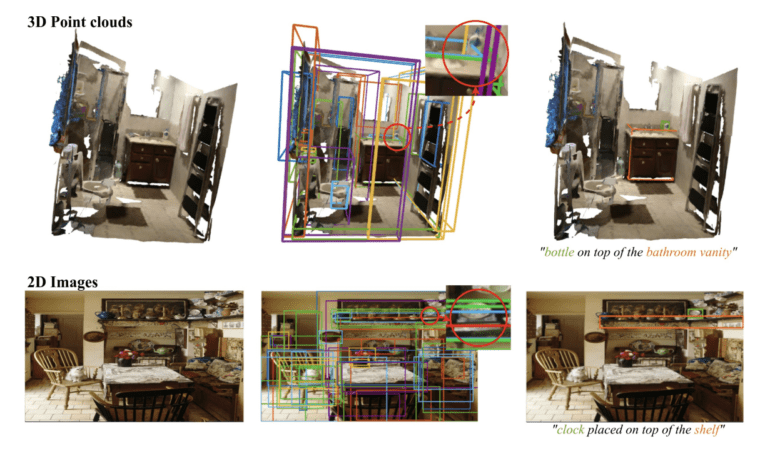

A dedicated team of researchers from Carnegie Mellon University now introduces the Bottom-up, Top-Down DEtection TRansformer, affectionately known as BUTD-DETR, a groundbreaking model that directly conditions itself on spoken language utterances to detect and identify all mentioned items. When provided with a list of object categories as an utterance, BUTD-DETR functions as a regular object detector. Its training data comprises image-language pairs labeled with bounding boxes for all referenced items in the speech, combined with fixed-vocabulary object detection datasets. However, the brilliance of BUTD-DETR lies in its versatility, as it can also anchor language phrases within 3D point clouds and 2D images with a few clever adjustments.

Unlike random selection from a pool, BUTD-DETR intelligently decodes object boxes by attentively processing both verbal and visual inputs. Although the bottom-up, task-agnostic attention might overlook some intricate details while locating an item, the language-directed attention promptly fills in the gaps. The model takes in both a scene and a spoken utterance as input, extracting box suggestions from an already trained detector. Subsequently, visual, box and linguistic tokens are extracted from the scene, boxes, and speech using specialized encoders for each modality. These tokens imbue significance within their context by paying attention to one another, while refined visual cues initiate object queries that decode boxes spanning multiple streams.

The practice of object detection exemplifies grounded referential language, where the uttered words serve as category labels for the objects being detected. For training, researchers employ object detection as the referential grounding for detection prompts by randomly selecting certain object categories from the detector’s vocabulary and synthesizing utterances by sequencing them, such as “Couch. Person. Chair.” These detection cues supplement the model with supervision information, aiming to identify all instances of the specified category labels within the scene. Importantly, the model is instructed not to form box associations for category labels that lack corresponding visual input examples, like “person” in the aforementioned example. Such an approach allows a single model to perform both language grounding and object recognition simultaneously, leveraging the same training data for both tasks.

The outcomes of this research endeavor have been quite remarkable. While the MDETR-3D equivalent displays underwhelming performance compared to earlier models, BUTD-DETR attains a state-of-the-art performance in 3D language grounding. Moreover, BUTD-DETR extends its capabilities to the 2D domain and, with ingenious architectural enhancements like deformable attention, achieves comparable performance to MDETR while converging at twice the speed. This significant progress takes a crucial step towards unifying grounding models for both 2D and 3D applications, as it can seamlessly adapt to function effectively in both dimensions with minimal adjustments.

For all 3D language grounding benchmarks, BUTD-DETR showcases remarkable performance gains over state-of-the-art methods like SR3D, NR3D, and ScanRefer. Notably, it emerged as the top-performing submission in the ECCV workshop on Language for 3D Scenes, featuring the competitive ReferIt3D competition. Furthermore, when trained on vast amounts of data, BUTD-DETR has the potential to rival the best existing approaches for 2D language grounding benchmarks. The researchers’ efficient deformable attention for the 2D model facilitates twice as rapid convergence compared to state-of-the-art MDETR, signaling a promising future for this cutting-edge AI model.

Conclusion:

The introduction of BUTD-DETR represents a significant advancement in the field of AI-based object detection. Its ability to directly condition language utterances and detect all mentioned items in images has vast implications for various industries. By bridging the gap between language and visual understanding, this model can greatly improve the capabilities of home agents, robotics, and any application that relies on precise object detection and recognition. Furthermore, its success in both 2D and 3D settings signifies a promising direction for unified grounding models. Businesses involved in computer vision, AI research, and smart technology development should closely monitor the integration and commercialization of BUTD-DETR to stay competitive in a rapidly evolving market.