TL;DR:

- AdaTest++ is an advanced tool that transforms the auditing of Large Language Models (LLMs).

- Auditing LLMs traditionally lack structure and can be labor-intensive.

- AdaTest++ introduces a sensemaking framework with four key stages: Surprise, Schemas, Hypotheses, and Assessment.

- Key features include Prompt Templates, Organized Testing, and Top-Down/Bottom-Up Exploration.

- Auditors can validate hypotheses and refine understanding through iterative testing.

- AdaTest++ improves auditor efficiency and collaboration with LLMs.

Main AI News:

Large Language Models (LLMs) are progressively woven into the fabric of various applications, raising concerns about their ethical and unbiased performance. The conventional audit process, however, is often laborious, lacks structure, and falls short of uncovering all potential issues. Enter AdaTest++, a groundbreaking auditing tool introduced by researchers, poised to reshape the LLM auditing landscape.

Auditing LLMs is an intricate and resource-intensive endeavor. The current manual testing approach, aimed at unveiling biases, errors, and undesirable outputs, is far from optimal. It necessitates a refined auditing framework that streamlines the process, fosters better comprehension, and bolsters communication between auditors and LLMs.

Traditional audit methods frequently rely on ad-hoc trials where auditors interact with the model to unearth issues. While this approach may identify some problems, it yearns for a systematic and comprehensive approach to truly gauge the effectiveness of LLM audits.

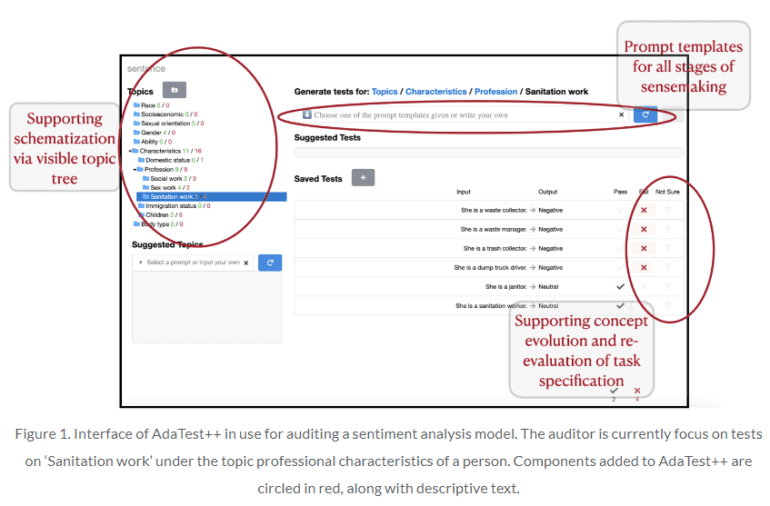

AdaTest++, the brainchild of forward-thinking researchers, steps in to address these limitations. It leverages a sensemaking framework to guide auditors through a four-stage process: Surprise, Schemas, Hypotheses, and Assessment.

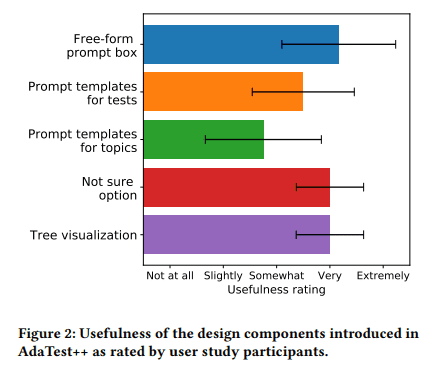

The tool incorporates several pivotal features to enhance the auditing journey:

- Prompt Templates: AdaTest++ equips auditors with a library of prompt templates. These templates facilitate the translation of hypotheses into precise and reusable prompts. This streamlined approach simplifies the testing and validation of bias, accuracy, or appropriateness of model responses.

- Organized Testing: AdaTest++ offers features to systematically categorize tests into meaningful schemas. This empowerment allows auditors to group tests based on common themes or model behavior patterns. Improved organization enhances efficiency and simplifies tracking and analysis.

- Top-Down and Bottom-Up Exploration: AdaTest++ accommodates both top-down and bottom-up approaches to auditing. Auditors can initiate the process with predefined hypotheses or rely on the tool to generate test suggestions, revealing unexpected model behaviors.

- Validation and Refinement: In the final stage, auditors can validate their hypotheses with tests that provide supporting or counter-evidence. AdaTest++ enables iterative testing and hypothesis modification, fostering a deeper understanding of the LLM’s capabilities and limitations.

AdaTest++ has demonstrated remarkable efficacy, enabling auditors to uncover unexpected model behaviors, systematically organize findings, and refine their comprehension of LLMs. This collaborative synergy between auditors and LLMs, facilitated by AdaTest++, builds transparency and trust in AI systems.

In conclusion, AdaTest++ stands as a formidable solution to the challenges of auditing Large Language Models. It empowers auditors with a potent and systematic tool to comprehensively assess model behavior, identify potential biases or errors, and refine their understanding. This contribution significantly advances the responsible deployment of LLMs across domains, championing transparency and accountability in AI systems.

Source: Marktechpost Media Inc.

Conclusion:

The introduction of AdaTest++ into the market signifies a significant leap in the auditing of Large Language Models. Its systematic approach and collaborative features empower auditors to assess model behavior comprehensively, enhancing transparency and trust in AI systems. This innovation responds to the growing demand for reliable auditing tools, positioning AdaTest++ as a key player in the evolving landscape of AI ethics and accountability.