TL;DR:

- Cohere AI introduces Aya initiative to bridge language gaps in NLP.

- Aya includes a curated dataset spanning 65 languages, enhancing diversity in language modeling.

- The project encompasses annotation tools, multilingual datasets, and evaluation suites.

- Aya aims to boost inclusivity by translating datasets into 114 languages and generating 513 million instances.

- All components of Aya, including the annotation platform and datasets, are open-sourced under an Apache 2.0 license.

Main AI News:

The realm of Artificial Intelligence (AI) leans heavily on datasets, particularly in the domain of language modeling. Recent strides in Natural Language Processing (NLP) owe much to the adeptness of Large Language Models (LLMs) in promptly responding to directives. This proficiency stems from the meticulous fine-tuning of pre-existing models, underscoring the indispensability of well-annotated datasets.

Nevertheless, the bulk of available datasets primarily cater to the English language. Cohere AI’s team, in a recent endeavor, endeavors to bridge this linguistic divide by crafting a meticulously curated dataset focused on instruction-following, accessible across 65 languages. Collaborating with native speakers globally, they’ve amassed authentic instances of instructions and completions, spanning diverse linguistic landscapes.

The aspiration extends beyond merely assembling the largest multilingual repository to encompass translating existing datasets into 114 languages and generating 513 million instances via templating methodologies. This strategic endeavor aims to enrich the diversity and inclusivity of training data for language models.

Dubbed the Aya initiative, Cohere AI unveils four pivotal components integral to the project’s framework:

- The Aya Annotation Platform streamlines annotation processes, catering to 182 languages and dialects, facilitating the acquisition of high-quality multilingual data in an instruction-oriented format. Operational for eight months, it boasts 2,997 users from 119 countries, conversant in 134 languages, underscoring its global reach.

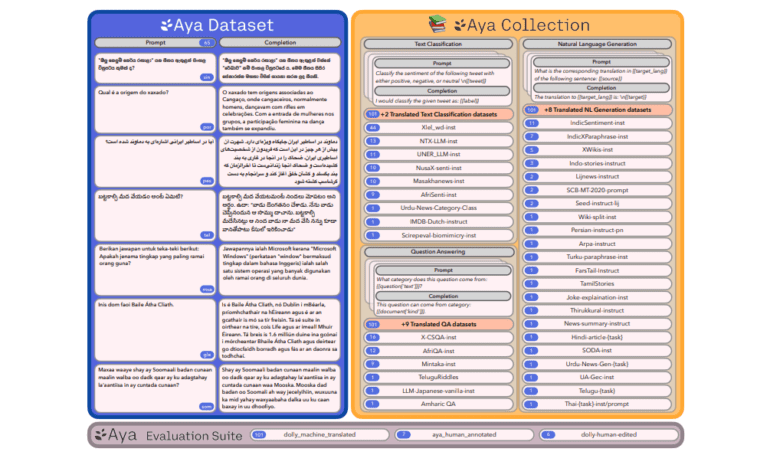

- The Aya Dataset represents the epitome of a human-curated compilation, featuring over 204K examples across 65 languages, tailored for multilingual instruction fine-tuning.

- Aya Collection amalgamates instruction-style templates sourced from proficient speakers, applied across 44 meticulously chosen datasets spanning tasks like open-domain question answering, machine translation, text classification, generation, and paraphrasing. The repository spans 114 languages, boasting 513 million instances, constituting the largest open-source reservoir for multilingual instruction fine-tuning data.

- Aya Evaluation encompasses a diverse test suite for assessing the quality of multilingual open-ended generation. It comprises original English prompts alongside 250 human-crafted prompts for each of seven languages, 200 automatically translated yet human-vetted prompts across 101 languages (including dialects), and human-edited prompts for six languages.

- Open Source: The Aya Annotation Platform’s code, alongside Aya Dataset, Aya Collection, and Aya Evaluation Suite, are all released under the permissive Apache 2.0 license, embodying a commitment to fostering collaborative development within the AI community.

Conclusion:

Cohere AI’s Aya initiative marks a significant leap towards linguistic inclusivity in the AI market. By addressing the language gap in NLP through curated datasets and annotation tools, Aya opens avenues for broader language modeling applications. The project’s commitment to open-source principles fosters collaboration, potentially catalyzing further innovation and market expansion in multilingual AI technologies.