TL;DR:

- Silicon Valley’s d-Matrix secures $110 million in Series B funding.

- Investors include Temasek, Playground Global, and Microsoft.

- CEO Sid Sheth emphasizes the value of experienced semiconductor investors.

- d-Matrix specializes in AI chips optimized for generative AI applications.

- Their innovative “in-memory compute” technology reduces energy consumption.

- d-Matrix focuses on AI inference, distinguishing itself from Nvidia.

- Microsoft commits to evaluating d-Matrix’s chip for future use.

- Revenue projection for this year: under $10 million.

- Ambitious goal to generate $70-75 million in annual revenue within two years.

Main AI News:

Silicon Valley-based AI chip innovator, d-Matrix, has triumphed in its latest funding round, securing an impressive $110 million in capital. This monumental achievement comes as a beacon of hope for the tech industry, which has seen numerous chip companies struggle to attract investors in recent times.

The Series B funding round, spearheaded by Singapore’s Temasek, has garnered support from renowned investors such as Playground Global of Palo Alto, California, and technology giant Microsoft. CEO Sid Sheth lauded the expertise of the investors, stating, “This is capital that understands what it takes to build a semiconductor business. They’ve done it in the past. This is capital that can stay with us for the long term.”

d-Matrix initiated its fundraising journey approximately a year ago, marking its commitment to pushing the boundaries of AI chip technology. While the company did not disclose its valuation, it has previously raised an impressive $44 million.

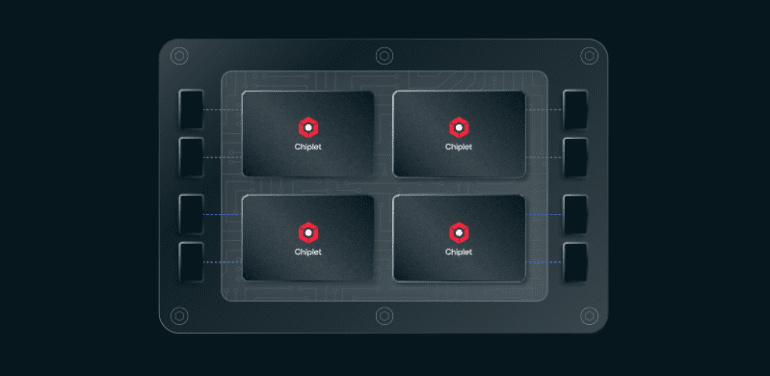

At the core of d-Matrix’s vision lies the design of chips meticulously optimized to empower generative AI applications like ChatGPT. What sets them apart is their pioneering approach to “in-memory compute,” a digital marvel that enhances the efficiency of AI code execution. By harnessing this cutting-edge chip technology, d-Matrix minimizes energy consumption while delivering remarkable performance for generative AI responses.

Crucially, d-Matrix distinguishes itself from industry titan Nvidia by focusing on the “inference” aspect of AI processing, rather than competing in the realm of training large AI models. Playground partner Sasha Ostojic expressed their breakthroughs, saying, “We have solved the computer architecture, the low power requirements, and the needs of data centers. We’ve built a software stack that delivers industry-leading low latency by orders of magnitude.”

In an exciting development, Microsoft has committed to evaluating d-Matrix’s chip for its own use upon its anticipated launch next year, cementing the company’s growing reputation in the tech world.

For this year, d-Matrix projects revenue of under $10 million, primarily from chip evaluations by eager customers. Looking ahead, the company forecasts a remarkable leap in annual revenue, targeting a range of $70 million to $75 million in two years, with a goal to achieve profitability, as revealed by CEO Sid Sheth. With this impressive infusion of capital and groundbreaking chip technology, d-Matrix is poised to make a significant impact on the AI chip market, promising a brighter future for artificial intelligence applications worldwide.

Conclusion:

d-Matrix’s successful $110 million funding round, with support from prominent investors, underscores the rising importance of AI chip innovation. Their focus on AI inference technology and energy efficiency positions them as a potential disruptor in the market, with Microsoft’s interest further validating their potential impact. This investment signals a promising future for AI chip advancements and applications.