TL;DR:

- DALL-E 3 is revolutionizing text-to-image models with its impressive image generation capabilities.

- It faces challenges in spatial awareness and maintaining specificity in generated images.

- A novel training approach combines synthetic and ground-truth captions to enhance DALL-E 3’s capabilities.

- This approach improves the model’s understanding of textual context and image quality.

- Advanced language models like GPT-4 play a crucial role in refining textual information.

- DALL-E 3’s advancements promise to reshape the market for AI-driven creative content generation.

Main AI News:

In the ever-evolving landscape of artificial intelligence, one area that has garnered substantial attention and investment is the enhancement of text-to-image generation models. Among the frontrunners in this domain, DALL-E 3 has recently come into the spotlight for its exceptional prowess in transforming textual descriptions into coherent images. However, even as it shines, DALL-E 3 faces its own set of challenges, particularly in the realms of spatial awareness, text rendering, and maintaining specificity in the generated visual content. A recent breakthrough in AI research introduces a groundbreaking training approach, merging synthetic and ground-truth captions, with the aim of elevating DALL-E 3’s image-generation capabilities and addressing these persistent challenges.

To grasp the significance of this research, it’s essential to recognize the limitations that have hindered DALL-E 3’s performance thus far. The model has struggled with accurately comprehending spatial relationships and faithfully rendering intricate textual details. These hurdles have, in turn, impeded its capacity to interpret and translate textual descriptions into visually coherent and contextually accurate images. In response, the OpenAI research team has devised a comprehensive training strategy designed to equip DALL-E 3 with a nuanced understanding of textual context.

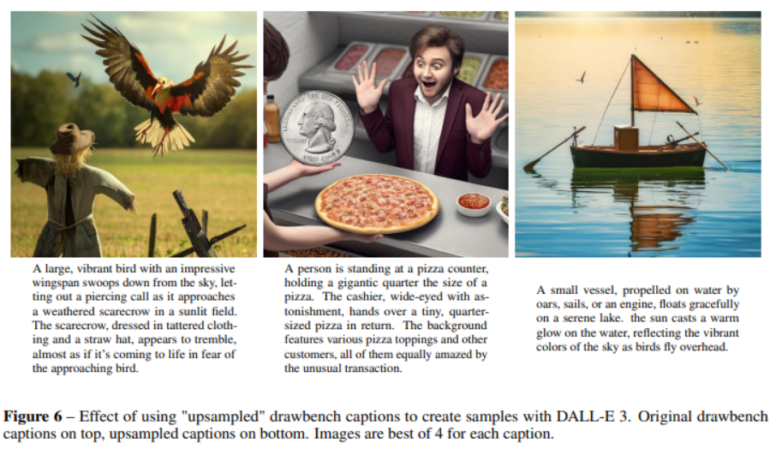

This strategy involves exposing the model to a diverse corpus of data, combining synthetic captions generated by the model itself with authentic ground-truth captions derived from human-generated descriptions. By doing so, the team seeks to imbue DALL-E 3 with the ability to intricately capture the subtle nuances embedded within the provided textual prompts, resulting in images that are not just visually appealing but also conceptually rich.

Delving deeper into the technical intricacies of this methodology, it becomes evident that the interplay between synthetic and ground-truth captions plays a pivotal role in conditioning the model’s training process. This comprehensive approach strengthens DALL-E 3’s capacity to discern complex spatial relationships and render textual information within the generated images with unprecedented accuracy. The research team’s exhaustive experiments and evaluations serve as a testament to the effectiveness of this approach, highlighting the significant improvements achieved in DALL-E 3’s image generation quality and fidelity.

Furthermore, this study underscores the invaluable role played by advanced language models, exemplified by GPT-4, in enriching the captioning process. These sophisticated language models contribute to refining the quality and depth of the textual information processed by DALL-E 3. Consequently, they pave the way for the generation of nuanced, contextually accurate, and visually engaging representations that push the boundaries of what text-to-image models can achieve.

Conclusion:

The introduction of DALL-E 3, with its enhanced text-to-image capabilities and innovative training approach, signifies a significant advancement in the market for AI-driven creative content generation. Businesses can look forward to improved image quality and richer contextual understanding, setting the stage for transformative applications in various industries, from marketing to entertainment.