- DataRobot introduces guard models to address concerns surrounding toxic content and data leaks in generative AI applications.

- The AI Platform now features enhanced observability capabilities, including pre-configured guard models and advanced diagnostic tools.

- Guard models detect and prevent issues like prompt injections, toxicity, and privacy breaches, bolstering confidence in GenAI.

- DataRobot empowers organizations to deploy end-to-end guardrails for LLMs and GenAI applications, ensuring secure and responsible AI deployment.

- Feedback mechanisms enable continual refinement of GenAI applications, predicting and mitigating potential pitfalls.

- DataRobot’s adaptability facilitates seamless support for diverse GenAI use cases, positioning the company as a trusted partner in AI deployment.

Main AI News:

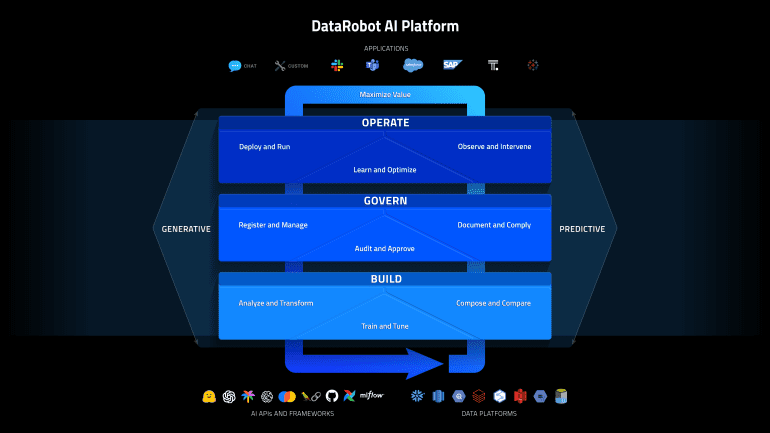

In the race to harness the potential of generative AI (GenAI), businesses encounter roadblocks, from concerns over toxic content to the risk of data breaches and hallucinations. Enter “guard models” – a proposed remedy to avert these dangers. DataRobot stands at the forefront, enhancing its AI Platform with new observability features tailored to thwart the missteps of large language models (LLMs).

These enhancements encompass a suite of pre-configured guard models, bolstered by advanced alerting mechanisms, visual diagnostic tools, and data quality checks. The aim? To assuage customer apprehensions surrounding GenAI and LLMs, according to DataRobot’s Chief Technology Officer, Michael Schmidt.

“Our clients consistently voice concerns regarding confidence in GenAI,” Schmidt asserts. “Many hesitate to deploy these systems due to uncertainties about performance and behavior.”

Guard models play a pivotal role in addressing these apprehensions. Equipped with DataRobot’s Generative AI Guard Library, users gain access to pre-built models adept at detecting and mitigating issues like prompt injections, toxicity, and privacy breaches. Additionally, clients can craft bespoke guard models tailored to their specific needs.

By deploying guard models in tandem with DataRobot’s AI Platform, organizations gain comprehensive safeguards against undesirable outcomes. Schmidt emphasizes the platform’s ability to evaluate the efficacy of these guardrails in real-world scenarios, offering insights into performance and mitigating risks.

Furthermore, DataRobot’s latest release introduces feedback mechanisms to refine GenAI applications continually. Leveraging insights from user experiences, the platform enables predictive analytics to anticipate and mitigate potential pitfalls, ensuring smoother deployment and operation of GenAI systems.

While DataRobot’s roots lie in traditional machine learning, the company has swiftly adapted to the GenAI landscape. Schmidt highlights the platform’s versatility, seamlessly accommodating a myriad of models and environments, thus enabling rapid adoption and support for diverse GenAI use cases.

“With DataRobot, clients gain a unified platform for managing and monitoring AI deployments, whether on cloud platforms or custom environments,” Schmidt explains. “This flexibility underpins our ability to support various generative AI workloads, positioning us as a trusted partner in navigating the complexities of AI deployment.”

By fortifying its arsenal with guard models and enhancing observability, DataRobot empowers businesses to harness the transformative potential of GenAI while mitigating associated risks, fostering a future where AI innovation thrives securely and responsibly.

Conclusion:

DataRobot’s introduction of guard models signifies a significant step towards enhancing AI security and fostering trust in generative AI applications. By addressing key concerns and offering comprehensive solutions, DataRobot is poised to play a pivotal role in shaping the future of AI deployment, enabling businesses to innovate confidently and responsibly in the AI-driven landscape.