TL;DR:

- Gensyn secures £34.25 million ($43 million) in Series A funding led by a16z Crypto.

- Gensyn utilizes blockchain technology to revolutionize AI model training.

- The company aims to establish a decentralized trust layer for machine learning.

- Gensyn’s Machine Learning Compute Protocol enables distributed cloud computing.

- The network connects underutilized computing devices globally for scalable machine learning.

- Gensyn’s protocol shows promising performance with low overhead and high scalability.

- Overcoming challenges such as generalizability, heterogeneity, and latency is crucial for success.

- Gensyn strives to reduce the cost of ML computing and offer trustless model training.

Main AI News:

In the wake of its successful expansion into the international market with the opening of a new office in London, a16z Crypto, a leading venture capital firm, has made yet another significant announcement. The firm has taken the helm in a £34.25 million ($43 million) Series A funding round for Gensyn, a company at the forefront of leveraging blockchain technology for AI model training.

Gensyn, founded by computer science and machine learning veterans Ben Fielding and Harry Grieve in 2020, had previously received $6.5 million in seed funding from Galaxy Digital in March 2022. The company has also attracted investments from renowned entities like CoinFund, Protocol Labs, Canonical Crypto, and Eden Block.

With this new injection of funds, Gensyn is poised to grow significantly beyond its current team of two co-founders. As the popularity of ChatGPT continues to soar, igniting the AI landscape, Gensyn’s Machine Learning Compute Protocol is primed to thrive as a distributed cloud computing pay-as-you-go model for software engineers and researchers.

Building a Decentralized Trust Layer for Machine Learning

Combining blockchain technology with AI has often been perceived as inefficient. Blockchains offer immutable records distributed across multiple network nodes, necessitating verification. In contrast, AI models require high data throughput and frequent updates, posing a challenge for traditional blockchain integration. However, Gensyn, based in the UK, is seen by a16z as the breakthrough needed to establish a “decentralized trust layer for machine learning,” as highlighted by Gensyn co-founder Harry Grieve.

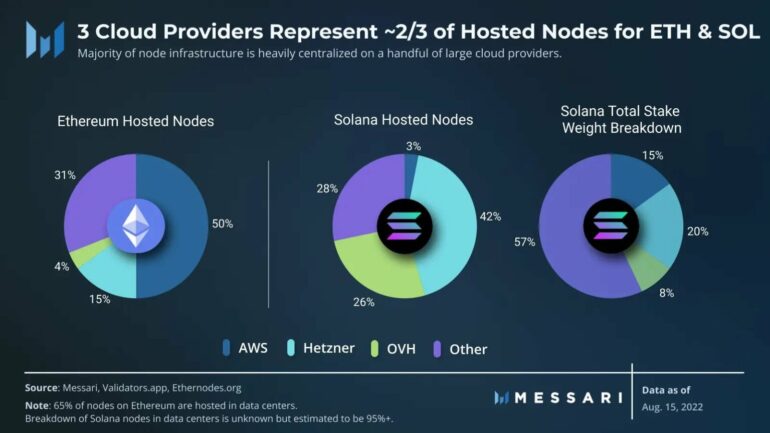

Similar to how Bitcoin eliminates the need for trusted intermediaries in monetary transactions, Gensyn’s peer-to-peer (P2P) network aims to disrupt cloud computing by circumventing the dominance of players like Amazon Web Services (AWS). The Gensyn network protocol endeavors to achieve this by harnessing the power of underutilized computing devices worldwide. This includes consumer GPUs utilized in video gaming, custom ASICs for mining, and System-on-Chip (SoC) devices commonly integrated into smartphones and tablets.

Of particular interest are consumer SoC devices, which possess the necessary components—memory, CPU, GPU, and storage—on a single microchip. This compactness enables scalability as these devices can form a computing cluster within a distributed system, making them highly sought-after for machine learning tasks such as training neural networks.

Unveiling Gensyn’s Scalability

Gensyn’s network operates as a layer one proof-of-stake protocol network, similar to Ethereum or Solana. Specifically built on the Substrate protocol, this framework facilitates peer-to-peer communication between network nodes. Gensyn’s Python simulations evaluating the protocol’s performance with the Modified National Institute of Standards and Technology (MNIST), a widely-used machine learning dataset, demonstrate low overhead compared to native runtime.

According to Harry Grieve, Gensyn boasts unlimited scalability and offers extremely cost-effective verification overhead. The core challenge lies in establishing a trustless consensus mechanism capable of executing small computations, addressing six key challenges:

- Generalisability: Ensuring ML developers can receive trained neural networks irrespective of custom architecture or dataset.

- Heterogeneity: Leveraging different processing architectures across various operating systems.

- Overhead: Computational verification must impose minimal overhead to be viable. For instance, Gensyn highlights that utilizing Ethereum for ML would result in a ~7850x computational overhead compared to AWS using Nvidia GPUs like the V100 Tensor Core.

- Scalability: Specialized hardware can hinder scalability.

- Trustlessness: Scaling becomes impossible if trust remains a prerequisite, as it necessitates a trusted party to verify the work.

- Latency: Training neural networks demands low latency, as high latency adversely affects inference—the ability of a properly trained machine learning model to make real-world predictions on newly presented data.

In essence, Gensyn aims to establish an incentive structure that allows “the unit cost of ML computes to settle into its fair equilibrium.” By their projections, this would position Gensyn even above AWS in terms of cost-efficiency.

Ultimately, for AI to seamlessly integrate into people’s lives, it is crucial that their models are trained with trustlessness at their core. Gensyn is leading the charge in making this vision a reality.

Conclusion:

Gensyn’s groundbreaking approach to decentralized machine learning using blockchain technology has attracted significant investment and holds the potential to disrupt the market. By leveraging underutilized computing devices, Gensyn’s protocol addresses scalability concerns and offers cost-effective solutions for training AI models. However, overcoming challenges related to generalizability, heterogeneity, and latency remains pivotal. As the company continues to advance, it has the potential to reshape the landscape of machine learning, offering trustless model training and driving innovation in the AI industry.